U n i v e r s i t é Y O R K U n i v e r s i t y

ATKINSON FACULTY OF LIBERAL AND PROFESSIONAL STUDIES

SCHOOL OF ANALYTIC STUDIES & INFORMATION TECHNOLOGY

S C I E N C E A N D T E C H N O L O G Y S T U D I E S

NATS 1800 6.0 SCIENCE AND EVERYDAY PHENOMENA

ATKINSON FACULTY OF LIBERAL AND PROFESSIONAL STUDIES

SCHOOL OF ANALYTIC STUDIES & INFORMATION TECHNOLOGY

S C I E N C E A N D T E C H N O L O G Y S T U D I E S

NATS 1800 6.0 SCIENCE AND EVERYDAY PHENOMENA

Lecture 22: Artificial Intelligence

| Prev | Next | Search | Syllabus | Selected References | Home |

Topics

-

In 1936 Alan Turing (1912 - 1954)

and independently Alonzo Church (1903 - 1995),

published papers in which they outlined the construction of a formal model of a digital computer, now known as the Turing Machine,

and proved that, under certain fairly general assumptions, certain Turing machines are universal—in

the sense that they can simulate any other Turing machine. Modern digital computers are actual implementations of universal Turing

machines. Turing, however, also showed that there are problems such machines can not solve, and became deeply interested in the

ultimate limits of computers, in particular he asked: "Can machines think?."

Robot Walking vs Human Skeleton Walking

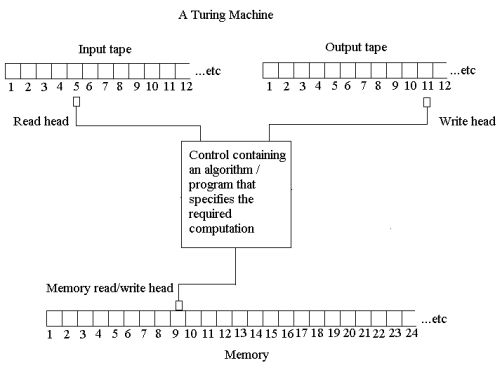

Essentially, a Turing machine can be imagined as a device that can read (scan) a tape of potentially infinite length, divided into squares, each carrying one symbol. These symbols could be 1's and 0's, or anything else. The machine can read one symbol at a time, erase and replace it if necessary, and move in either direction along the tape, always one square at a time. The machine can be in any one of a number of internal states, and it can change from one state to another, depending both on the symbol read off the tape and on certain rules stored in the machine. If you think of the overall effect of this process, a Turing machine's input is a series (a string) of symbols and its output is another series or string of symbols.

A Turing Machine

As an aside, a physical implementation of the Turing machine has been carried out recently by Ehud Shapiro of the Computer Science and Applied Mathematics Department at the Weizmann Institute of Science (Israel). "Shapiro's mechanical computer has been built to resemble the biomolecular machines of the living cell, such as ribosomes. Ultimately, this computer may serve as a model in constructing a programmable computer of subcellular size, that may be able to operate in the human body and interact with the body's biochemical environment, thus having far-reaching biological and pharmaceutical applications." [ from Computing Device To Serve As Basis For Biological Computer ] The classic paper in which Turing asked "Can machines think?" is entitled Computing Machinery and Intelligence. It was originally published in 1950 in Mind, 59, 433-460 (1950)."I propose to consider the question, 'Can machines think?' This should begin with definitions of the meaning of the terms 'machine 'and 'think'. The definitions might be framed so as to reflect so far as possible the normal use of the words, but this attitude is dangerous. If the meaning of the words 'machine' and 'think 'are to be found by examining how they are commonly used it is difficult to escape the conclusion that the meaning and the answer to the question, 'Can machines think?' is to be sought in a statistical survey such as a Gallup poll. But this is absurd. Instead of attempting such a definition I shall replace the question by another, which is closely related to it and is expressed in relatively unambiguous words."

The 'other' question is very simple.The new form of the problem can be described in terms of a game which we call the 'imitation game.' It is played with three people, a man (A), a woman (B), and an interrogator (C) who may be of either sex. The interrogator stays in a room apart from the other two. The object of the game for the interrogator is to determine which of the other two is the man and which is the woman. He knows them by labels X and Y, and at the end of the game he says either 'X is A and Y is B' or 'X is B and Y is A'. The interrogator is allowed to put questions to A and B thus: C: Will X please tell me the length of his or her hair? Now suppose X is actually A, then A must answer. It is A's object in the game to try and cause C to make the wrong identification. His answer might therefore be 'My hair is shingled, and the longest strands, are about nine inches long.' In order that tones of voice may not help the interrogator the answers should be written, or better still, typewritten. The ideal arrangement is to have a teleprinter communicating between the two rooms. Alternatively the question and answers can be repeated by an intermediary. The object of the game for the third player (B) is to help the interrogator. The best strategy for her is probably to give truthful answers. She can add such things as 'I am the woman, don't listen to him!' to her answers, but it will avail nothing as the man can make similar remarks. We now ask the question, 'What will happen when a machine takes the part of A in this game?' Will the interrogator decide wrongly as often when the game is played like this as he does when the game is played between a man and a woman? These questions replace our original, 'Can machines think?'"

The 'imitation game' has since become known as the Turing Test. Of course Turing was not the only one to ask questions about the ultimate limitations of computers. As Alan Ross Anderson writes, "The development of electronic computers in recent years has given a new twist to questions about the relations between 'mental' and 'mechanical' events, and stimulated an extraordinary amount of discussion. Since 1950 more than 1000 papers have been published on the question as to whether 'machines' can 'think'."

[ from Alan Ross Anderson, ed., Minds and Machines, Prentice-Hall, 1964, p. 1 ] For example, in his 1956 Silliman Lectures, The Computer and the Brain (New Haven & London: Yale University Press, 1958), John von Neumann addressed head-on the question of the relationships between computers and the human central nervous system. Incidentally, this book offers a very good introduction to the architecture of computers. Of great interest is also a package of articles by one of the principal forces behind the development of the Internet, J C R Licklider. One of the articles is entitled "Man-Computer Symbiosis," and, although published in 1960, is full of incredibly accurate predictions about the future of computers and of artificial intelligence. Returning now to Turing's article, his conclusion essentially was that, if the interrogator can not tell the machine and the human apart, why shouldn't we accept that the machine is also intelligent? Turing is very aware of the objections which can be raised against his proposal, and a substantial portion of the article is devoted to anticipating and responding to such objections. The jury is still out on this issue. Notice that The Loebner Prize has been set up to reward the first computer which (who?) will pass the Turing test. -

There is of course another concept that should be clarified before we can truly discuss the Turing test (despite the contention

that Turing claims to bypass this hurdle), and that is "intelligence". What is intelligence? Or, more precisely,

what is human intelligence? A nice overview of the concept of intelligence

is presented by Bill Huitt. It seems mow clear that there are many facets to intelligence.

Read Who Owns Intelligence?

by Howard Gardner, which appeared in the February 1999 issue of Atlantic Magazine [ go to the Library to read it ].

In fact, there is no agreed-upon definition of intelligence. The history of this concept is as varied and controversial as it can be.

Howard Gardner's article offers a glimpse of such tortuous history. Add the relatively recent and abundant literature on 'animal

intelligence,' and the even more recent serious speculations on 'alien intelligence,' and you will have a sense of the difficulties

we face. Perhaps all we can say at this moment is that "intelligence is the cognitive ability of an individual to learn from

experience, to reason well, to remember important information, and to cope with the demands of daily living." [ R Sternberg, as quoted in Bill Huitt's article ].

In other words, intelligence is not one thing, but a cluster of related, but distinct, abilities of the brain. Binet's belief

that intelligence is what the IQ Test measures has been conclusively discredited.

Since, however, we are interested here in the possibility of artificially emulating or simulating intelligence by

means of computers, let's get back to Turing's 1950 classic article. As we saw earlier, instead of asking what is intelligence,

and whether computers are or can become intelligent, Turing suggested that any machine whose behavior is

indistinguishable from that of an intelligent creature is, for all intents and purposes, intelligent. Although

this approach avoids the problem of 'defining' intelligence, it has the advantage that we are all very familiar with it.

Each one of us takes his own intelligence for granted, and when he experiences a similar behavior in others, he assumes

that they are intelligent too. This of course is not as unproblematic as it sounds. Philosophers have been debating

for a very long time the more general problem of 'other minds.' How do I know that my mental states and operations

are essentially the same in other people?

One of the arguments against Turing's proposal (which was raised by Turing himself) is the so called 'argument from

consciousness'. Not until a machine can write a sonnet or compose a concerto because of thoughts and emotions felt,

and not by the chance fall of symbols, could we agree that machine equals brain—that is, not only write it but know

that it had written it." This is a common objection against the possibility of artificial intelligence. A related formulation

is 'Lady Lovelace's objection.' In a 1842 memoir concerning Babbage's machine, she had written: "The Analytic Engine has no pretensions to

originate anything. It can do whatever we know how to order it to perform."

While it is perhaps true that today's computers don't exhibit signs of creative behavior, the question raised by Ada

Lovelace should be considered in a broader context: are computers intrinsically unable to be creative? The answers

given to this and related questions are very different, and span the whole spectrum from 'yes' to 'no.'

Who Owns Intelligence?

by Howard Gardner, which appeared in the February 1999 issue of Atlantic Magazine [ go to the Library to read it ].

In fact, there is no agreed-upon definition of intelligence. The history of this concept is as varied and controversial as it can be.

Howard Gardner's article offers a glimpse of such tortuous history. Add the relatively recent and abundant literature on 'animal

intelligence,' and the even more recent serious speculations on 'alien intelligence,' and you will have a sense of the difficulties

we face. Perhaps all we can say at this moment is that "intelligence is the cognitive ability of an individual to learn from

experience, to reason well, to remember important information, and to cope with the demands of daily living." [ R Sternberg, as quoted in Bill Huitt's article ].

In other words, intelligence is not one thing, but a cluster of related, but distinct, abilities of the brain. Binet's belief

that intelligence is what the IQ Test measures has been conclusively discredited.

Since, however, we are interested here in the possibility of artificially emulating or simulating intelligence by

means of computers, let's get back to Turing's 1950 classic article. As we saw earlier, instead of asking what is intelligence,

and whether computers are or can become intelligent, Turing suggested that any machine whose behavior is

indistinguishable from that of an intelligent creature is, for all intents and purposes, intelligent. Although

this approach avoids the problem of 'defining' intelligence, it has the advantage that we are all very familiar with it.

Each one of us takes his own intelligence for granted, and when he experiences a similar behavior in others, he assumes

that they are intelligent too. This of course is not as unproblematic as it sounds. Philosophers have been debating

for a very long time the more general problem of 'other minds.' How do I know that my mental states and operations

are essentially the same in other people?

One of the arguments against Turing's proposal (which was raised by Turing himself) is the so called 'argument from

consciousness'. Not until a machine can write a sonnet or compose a concerto because of thoughts and emotions felt,

and not by the chance fall of symbols, could we agree that machine equals brain—that is, not only write it but know

that it had written it." This is a common objection against the possibility of artificial intelligence. A related formulation

is 'Lady Lovelace's objection.' In a 1842 memoir concerning Babbage's machine, she had written: "The Analytic Engine has no pretensions to

originate anything. It can do whatever we know how to order it to perform."

While it is perhaps true that today's computers don't exhibit signs of creative behavior, the question raised by Ada

Lovelace should be considered in a broader context: are computers intrinsically unable to be creative? The answers

given to this and related questions are very different, and span the whole spectrum from 'yes' to 'no.'

For recent musings on Turing's Test, read Hello, Are You Human?, at

the end of which you will also find a few interesting links. You may also wish to take part in the Turing Game,

a research project at the Georgia Institute of Technology. "The goal of the Turing Game is to better understand

the nature of online interaction."

In his books The Emperor's New Mind: Concerning Computers, Minds, and the Laws of Physics (Oxford

University Press 1989), and Shadows of the Mind: A Search for the Missing Science of Consciousness

(Oxford University Press 1994), Roger Penrose argues that many questions concerning artificial intelligence, physics,

and the philosophy of mind are deeply related, and he offers a long argument that human thought cannot be computed.

Psyche, an interdisciplinary journal of research on consciousness, held a Symposium

on Roger Penrose's Shadows of the Mind. You may want to read some of the contributions.

You may also be interested in What Can't the Computer Do?, where John Maynard Smith reviews Penrose's first book.

The review appeared in the the March 15, 1990 issue of the New York Review of Books.

A different approach is taken by MIT's Steven Pinker.

Hello, Are You Human?, at

the end of which you will also find a few interesting links. You may also wish to take part in the Turing Game,

a research project at the Georgia Institute of Technology. "The goal of the Turing Game is to better understand

the nature of online interaction."

In his books The Emperor's New Mind: Concerning Computers, Minds, and the Laws of Physics (Oxford

University Press 1989), and Shadows of the Mind: A Search for the Missing Science of Consciousness

(Oxford University Press 1994), Roger Penrose argues that many questions concerning artificial intelligence, physics,

and the philosophy of mind are deeply related, and he offers a long argument that human thought cannot be computed.

Psyche, an interdisciplinary journal of research on consciousness, held a Symposium

on Roger Penrose's Shadows of the Mind. You may want to read some of the contributions.

You may also be interested in What Can't the Computer Do?, where John Maynard Smith reviews Penrose's first book.

The review appeared in the the March 15, 1990 issue of the New York Review of Books.

A different approach is taken by MIT's Steven Pinker.  Organs Of Computation:

A Talk With Steven Pinker appeared in EDGE.

Organs Of Computation:

A Talk With Steven Pinker appeared in EDGE.

-

A very interesting way to examine in greater detail the issues raised by the Turing test was developed by John Searle,

an American philosopher. The Chinese Room, as this argument is called, claims to refute Turing's idea.

John Nugent offers a good

overview,

and Steven Harnad presents a fairly comprehensive review of the issue in

overview,

and Steven Harnad presents a fairly comprehensive review of the issue in  Minds, Machines and Searle.

A more difficult, but more complete article appears in the Internet Encyclopedia of Philosophy, where

you also find a good bibliography.

It is interesting to note that, in his Monadology, Leibniz "had asked the reader to imagine what

would happen if you magnified the insides of the head more and more until it was so large you could walk right through it

like a mill" (in the sense of a place for grinding flour). The 17th paragraph of the Monadology begins:

Minds, Machines and Searle.

A more difficult, but more complete article appears in the Internet Encyclopedia of Philosophy, where

you also find a good bibliography.

It is interesting to note that, in his Monadology, Leibniz "had asked the reader to imagine what

would happen if you magnified the insides of the head more and more until it was so large you could walk right through it

like a mill" (in the sense of a place for grinding flour). The 17th paragraph of the Monadology begins:

"Moreover, it must be confessed that perception and that which depends upon it are inexplicable on mechanical grounds, that is to say, by means of figures and motions. And supposing there were a machine, so constructed as to think, feel, and have perception it might be conceived as increased in size, while keeping the same proportions, so that one might go into it as into a mill. That being so, we should, on examining its interior, find only parts which work one upon another, and never anything by which to explain a perception." [ as quoted in Michael Arbib's article Warren Mcculloch's Search for the Logic of the Nervous System, which appeared in Perspectives in Biology and Medicine, v043.2, Winter 2000. ]

Here is a succinct description of the Chinese Room:"In the Chinese room thought experiment, a person who understands no Chinese sits in a room into which written Chinese characters are passed. In the room there is also a book containing a complex set of rules (established ahead of time) to manipulate these characters, and pass other characters out of the room. This would be done on a rote basis, e.g. 'When you see character X, write character Y.' The idea is that a Chinese-speaking interviewer would pass questions written in Chinese into the room, and the corresponding answers would come out of the room appearing from the outside as if there were a native Chinese speaker in the room. It is Searle's belief that such a system could indeed pass a Turing Test, yet the person who manipulated the symbols would obviously not understand Chinese any better than he did before entering the room."

and here is how Searle himself puts it:"Imagine that a bunch of computer programmers have written a program that will enable a computer to simulate the understanding of Chinese … Imagine that you are locked in a room, and in this room are several baskets full of Chinese symbols. Imagine that you … do not understand a word of Chinese, but that you are given a rule book in English for manipulating these Chinese symbols. The rules specify the manipulations of the symbols purely formally, in terms of their syntax, not their semantics … Now suppose that some other Chinese symbols are passed into the room, and that you are given further rules for passing back Chinese symbols out of the room. Suppose that unknown to you the symbols passed into the room are called 'questions' by the people outside of the room, and the symbols you pass back out of the room are called 'answers to the questions.' Suppose, furthermore, that the programmers are so good at designing the programs and that you are so good at manipulating the symbols, that very soon your answers are indistinguishable from those of a native Chinese speaker … Now the point of the story is simply this: by virtue of implementing a formal computer program from the point of view of an outside observer, you behave exactly as if you understood Chinese, but all the same you don't understand a word of Chinese. But if going through the appropriate computer program for understanding Chinese is not enough to give you an understanding of Chinese, then it is not enough to give any other digital computer an understanding of Chinese." [ from J Searle, Minds, Brains and Science (Harvard U Press, 1986, p. 32) ]

You may also want to read Searle's article "Is the Brain's Mind a Computer Program?" in Scientific American (262: 26-31, 1990) in the library.

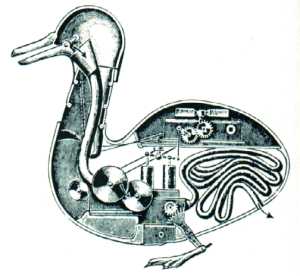

Jacques de Vaucanson (1709 - 1782). Mechanical Duck

-

Before examining Searle's refutation of the Turing test, it is helpful to review once again the questions

and answers relative to the basic issue:

Can Machines Think?.

Searle argues that the Turing's test is not sufficient to determine whether a computer really exhibits human

intelligence. Or, in other words, intelligent behavior is not a proof of (human) intelligence.

The Chinese Room is simply an instance of 'simulation'. According to Searle, what truly characterizes the human mind is

'intentionality': "a form of energy whereby the cause, either in the form of desires or intentions,

represents the very state of affairs that it causes." (op. cit., p. 66). So, who is right? I guess we don't know yet.

Personally, I would rather bet my money on Turing, particularly in view of Minsky's approach to the proble (see next bullet).

Can Machines Think?.

Searle argues that the Turing's test is not sufficient to determine whether a computer really exhibits human

intelligence. Or, in other words, intelligent behavior is not a proof of (human) intelligence.

The Chinese Room is simply an instance of 'simulation'. According to Searle, what truly characterizes the human mind is

'intentionality': "a form of energy whereby the cause, either in the form of desires or intentions,

represents the very state of affairs that it causes." (op. cit., p. 66). So, who is right? I guess we don't know yet.

Personally, I would rather bet my money on Turing, particularly in view of Minsky's approach to the proble (see next bullet).

-

A completely different approach to artificial intelligent is that of Marvin

Minsky, at MIT Media Lab and MIT AI Lab. His idea of human intellectual structure and function is presented

in The Society of Mind (New York: Simon and Schuster, 1986), also available, in an enhanced edition,

as a CD-ROM. You can get a good summary of his views on artificial intelligence (Strong AI) in his article

Why People Think Computers Can't.

Minsky attacks the problem of machine intelligence not by critiquing what may go on in the machine, but by

questioning what we believe is going on in our mind.

Why People Think Computers Can't.

Minsky attacks the problem of machine intelligence not by critiquing what may go on in the machine, but by

questioning what we believe is going on in our mind.

"We naturally admire our Einsteins and Beethovens, and wonder if computers ever could create such wondrous theories or symphonies. Most people think that creativity requires some special, magical 'gift' that simply cannot be explained. If so, then no computer could create—since anything machines can do (most people think) can be explained … We shouldn't intimidate ourselves by our admiration of our Beethovens and Einsteins. Instead, we ought to be annoyed by our ignorance of how we get ideas—and not just our 'creative' ones [ … ] Do outstanding minds differ from ordinary minds in any special way? I don't believe that there is anything basically different in a genius, except for having an unusual combination of abilities, none very special by itself."

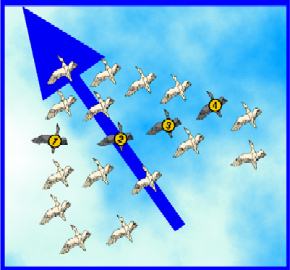

Minsky thus goes back to an old question: what do we mean when we say that we think? "The secret of what something means lies in the ways that it connects to all the other things we know." Instead of conceiving of our brain as a monolithic organ with magical properties, it may be more accurate to look at it as a set of distinct but connected subsystems, each one with its own limited, and perhaps specialized, abilities. Individual termites are not particularly smart, but a termite colony appears to be. It keeps its 'castle' air conditioned even in the harshest summer day in Africa, it cares for its newborn in well-organized nurseries, it cultivates mushroom gardens, it's always 'aware' of the state of the entire colony, and so on. Why not approach the problem of the organization of our brain along termite lines?"That's why I think we shouldn't program our machines that way, with clear and simple logic definitions. A machine programmed that way might never 'really' understand anything—any more than a person would. Rich, multiply-connected networks provide enough different ways to use knowledge that when one way doesn't work, you can try to figure out why. When there are many meanings in a network, you can turn things around in your mind and look at them from different perspectives; when you get stuck, you can try another view. That's what we mean by thinking! Physicists have been studying the remarkable process of how a flock of birds moves flawlessly as an organized group, even if the individual birds make frequent misjudgments. If a bird in a flock makes an error in the direction it should travel, it will tend to swerve side-to-side rapidly. You might think this error in judgment would overwhelm the other birds, causing the flock to become disoriented and fly apart very quickly. But this process actually helps keep these misjudgments under control, by quickly spreading the error among many birds so that it becomes very diluted." [ from The Physics of Flocking ]

Misjudgment in a Bird Flock

Evidence that Minsky's position may well have some merit is accumulating. For example, Laurent Keller, of the University of Lausanne, Switzerland, and colleagues, have taught robots some of the behavioural rules used by ants, and found that the robots can form cooperating, autonomous groups that are more successful than individual robots.

For a non-technical introduction to similar problems and the computer simulations created to understand them, see John L Casti, Would-Be Worlds: How Simulation is Changing the Frontiers of Science, John Wiley & Sons, 1997. Another short but great source is Mitchel Resnick, Turtles, Termites, and Traffic Jams: Explorations in Massively Parallel Microworlds, The MIT Press, 1997. Many useful references and resources can also be found on the web. -

Idealised Electronic Neuron: The Building Block of Neural Nets

Readings, Resources and Questions

-

Alan Turing became in fact involved in the construction of the Automatic Computing Engine (ACE) at

the National Physical Laboratory, London.

Visit The Turing Archive for the

History of Computing.

A good site dedicated to the life and work of Turing is The Alan Turing Home Page.

Another brief, but excellent account of Turing's work is an essay by John M Kowalik.

There are many simulations of Turing machines on the web. For example, you may want to download and play with Visual Turing.

The Turing Archive for the

History of Computing.

A good site dedicated to the life and work of Turing is The Alan Turing Home Page.

Another brief, but excellent account of Turing's work is an essay by John M Kowalik.

There are many simulations of Turing machines on the web. For example, you may want to download and play with Visual Turing.

-

The possibility that one day computers may not only be as or more intelligent and conscious but perhaps better than human beings has

been the subject of many speculations and of much science fiction. It is always interesting (and sobering) to realize that such

visions are, at least partially, conditioned by the culture in which they are formulated. Here are two example. The first is

The Machine Stops,

a short story E M Forster wrote in 1909. The second is

The Machine Stops,

a short story E M Forster wrote in 1909. The second is  As We May Think,

a famous article by Vannevar Bush, one of the pioneers of modern computers. It was written in 1945. It is an exploration of

the ways the new emerging information technology would affect society. Bush was no science fictions writer. He was a reputed

electrical engineer who invented the differential analyzer, a large analog computer, one of the last calculating devices to precede the digital computer.

As We May Think,

a famous article by Vannevar Bush, one of the pioneers of modern computers. It was written in 1945. It is an exploration of

the ways the new emerging information technology would affect society. Bush was no science fictions writer. He was a reputed

electrical engineer who invented the differential analyzer, a large analog computer, one of the last calculating devices to precede the digital computer.

In 1996, The Brown/MIT Vannevar Bush Symposium was held to celebrate "50 Years After 'As We May Think'." You may want to read an extended abstract of the event. - "Developed by two professors of education at Indiana University, the History of Influences in the Development of Intelligence Theory and Testing website gives a comprehensive overview of the field of intelligence theory and testing from Plato to the present day. Using an 'interactive map,' the site offers a timeline of the major figures in the field and their affiliations with one another. Users can click on names, time periods, or schools to access more in-depth information. The site's 'Hot Topics' section is particularly interesting, giving substantial material relating to some of the most controversial issues in intelligence theory, including an extensive section-by-section summary of the bestseller The Bell Curve and article-length rebuttals by scholars, including one by anthropologist Stephen Jay Gould."

-

Pay a visit to

Eliza: A Friend You Could Never Have Before .

Would she pass the Turing Test?

Eliza: A Friend You Could Never Have Before .

Would she pass the Turing Test?

-

We don't have room in one lecture to introduce and discuss several other, important, artificial intelligence technologies, such

as 'expert systems,' 'neural nets,' 'genetic programming,' or 'alife.'

A very good site to visit is Approaches: Methods Used to Create Intelligence, where

you can find an introduction to many relevant topics. Another important general reference is Artificial Intelligence, Expert Systems, Neural Networks and Knowledge Based Systems.

See also Expert Systems,

History of Expert Systems.

Matthew Caryl's Neural Nets is

a comprehensive, but readable, account of the history, background and basic principles of this approach. Kevin Gurney presents

A Brief History of Neural Nets,

with useful references to the early work by Wiener (Cybernetics), McCulloch and Pitts (Artificial Neurons), and others.

You may also want to read the ai-faq/neural-nets.

What's It All About, Alife?

is a well written article by Robert Crawford at Harvard and represents a good introduction to artificial life

or alife. This is the study of those artificial systems that exhibit some of the properties of populations of

living systems: self-organization, adaptation, evolution, metabolism, etc. In this sense, living systems are also examples of

a-life. This is important, because the study of a-life may also shed light on life itself. One of the starting points of a-life

was a game invented by John Conway.

"The Game of Life (or simply Life) is not a game in the conventional sense. There are no players, and no winning or losing. Once the 'pieces' are placed in the starting position, the rules determine everything that happens later. Nevertheless, Life is full of surprises! In most cases, it is impossible to look at a starting position (or pattern) and see what will happen in the future. The only way to find out is to follow the rules of the game. Life is one of the simplest examples of what is sometimes called 'emergent complexity' or 'self-organizing systems.' This subject area has captured the attention of scientists and mathematicians in diverse fields. It is the study of how elaborate patterns and behaviors can emerge from very simple rules. It helps us understand, for example, how the petals on a rose or the stripes on a zebra can arise from a tissue of living cells growing together. It can even help us understand the diversity of life that has evolved on earth." [ from Paul Callahan's What is the Game of Life? ]

- Visit IBM's website devoted to Deep Blue, the machine which defeated Garry Kasparov, the world's chess champion.

- An example of AI at work: ASE: Autonomous Sciencecraft Experiment. This experiment is designed to study and "demonstrate the potential for space missions to use onboard decision-making to detect, analyze, and respond to science events, and to downlink only the highest value science data."

© Copyright Luigi M Bianchi 2003-2005

Picture Credits: Wolgang Kreutzer's AI Page · Skull Collection

U of Newcastle upon Tyne · AIP · PemutationCity ·

Last Modification Date: 23 February 2006

Picture Credits: Wolgang Kreutzer's AI Page · Skull Collection

U of Newcastle upon Tyne · AIP · PemutationCity ·

Last Modification Date: 23 February 2006