MacKenzie, I. S. (2012). Evaluating eye tracking systems for computer input. In Majaranta, P., Aoki, H., Donegan, M., Hansen, D. W., Hansen, J. P., Hyrskykari, A., & Räihä, K.-J. (Eds.). Gaze interaction and applications of eye tracking: Advances in assistive technologies, pp. 205-225. Hershey, PA: IGI Global. [software]

Evaluating Eye Tracking Systems for Computer Input

I. Scott MacKenzie

Dept. of Computer Science and EngineeringYork University Toronto, Canada M3J 1P3

mack@cse.yorku.ca

1 INTRODUCTION

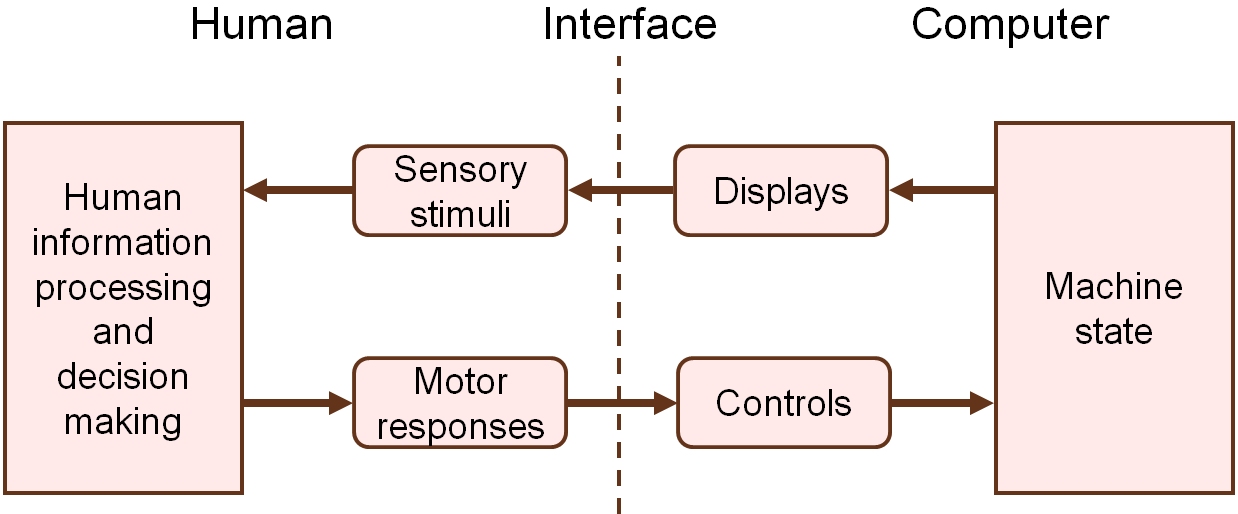

The eye is a perceptual organ. In the normal course of events, the eye receives sensory stimuli from the environment. The stimuli are processed in the brain as "information" and decisions are formulated on appropriate actions. The normal course of events also calls for the human's decisions to yield motor responses that effect changes in the environment. If the environment is a machine or computer, then the sensory stimuli come from displays and the motor response act on controls. This scenario mirrors the classical view of the human-machine interface. Figure 1 provides a schematic.

Figure 1. Classical view of the human-machine interface [4, p. 20]

Although visual displays are the most common, it is also valid to speak of "auditory displays" or "tactile displays". These are outputs from the machine or computer that stimulate the human sense of hearing or touch, respectively. Human motor responses come by way of our fingers, hands, arms, legs, feet, and so on, and are used to control the machine or computer. Of course, speech or articulated sounds are also human responses and may act as controls to issue commands to the computer or machine.

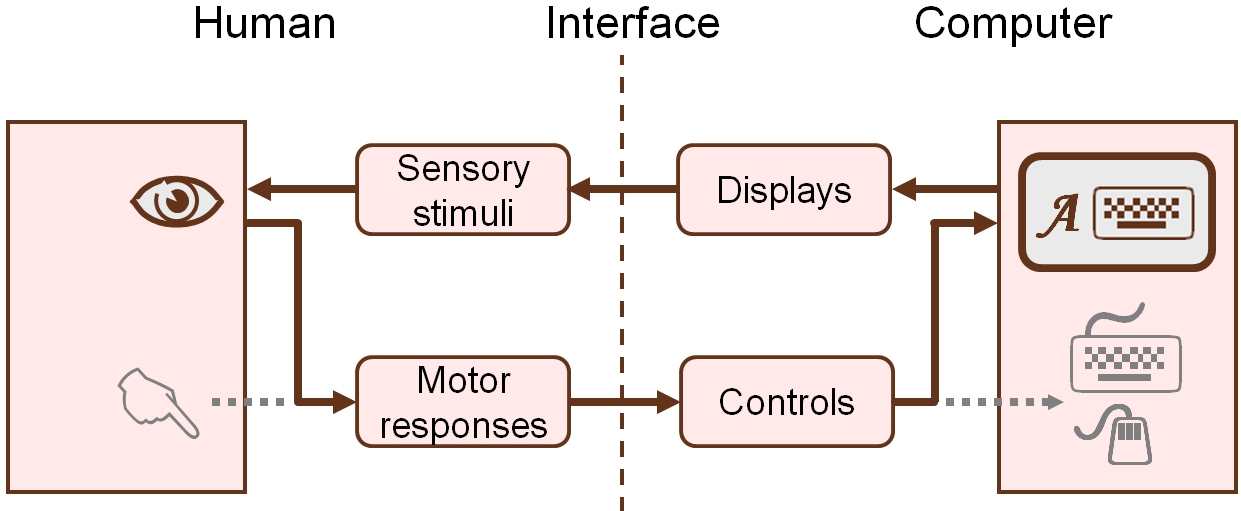

Today, computing technology is pervasive and ubiquitous. Computers are used by humans for work and pleasure, in tasks both complex and trivial, and for pursuits mundane, challenging, and creative. Eye trackers are just one example of a computing technology that offer tremendous potential for humans. Applications for eye trackers can be divided along two lines. In one application, an eye tracker is a passive instrument that measures and monitors the eyes to determine where, and at what, the human is looking. In another, the eye tracker is an active controller that allows a human, through his or her eyes, to interact with and control a computer. When a human uses an eye tracker for computer control, the "normal course of events" changes considerably. The eye is called upon to do "double duty", so to speak. Not only is it an important sensory input channel, it also provides motor responses to control the computer. A revised schematic of the human-computer interface is shown in Figure 2. The normal path from the human to the computer is altered. Instead of the hand providing motor responses to control the computer through physical devices (set in grey), the eye provides motor responses that control the computer through "soft controls" – virtual or graphical controls that appear on the system's display.

Figure 2. The human-computer interface. With an eye tracker, the eye serves double duty, processing sensory stimuli from computer displays and providing motor responses to control the system

This chapter is focused on the second of these two applications – the use of an eye tracker for computer input. Our concern is with methods of evaluating the interaction.

When an eye tracker is used for computer input, how well does the interaction work? Can common tasks be done efficiently, quickly, accurately? What is the user's experience? How are alternative interaction methods evaluated and compared to identify those that work well, and deserve further study, and those that work poorly, and should be discarded? These are the sorts of questions that can be answered with a valid and robust methodology for evaluating eye trackers for computer input.

2 COMPUTER INPUT

As a computer input device, an eye tracker typically emulates a computer mouse. Much like point-select operations with a mouse, the eye can "look-select", and thereby activate soft controls, such as buttons, icons, links, or text. Evaluating eye trackers for computer input, therefore, requires a methodology that addresses both the conventional issues for computer input using a mouse, and the unique characteristics of the eye and the eye tracking apparatus.

In 1978, Card, English, and Burr undertook the first comparative evaluation of the mouse [3]. Using tasks that combined cursor positioning with text selection, they compared a mouse to a joystick and two keying methods. Their study clearly established the superiority of the mouse. Many follow-on studies confirmed their findings. However, the methodologies used in the studies, taken as a whole, vary considerably, and this makes comparisons difficult. In view of this, a technical committee of the International Organization for Standardization (ISO TC159/SC4/WG3) undertook an initiative in the 1980s to standardize the testing methodology for computer pointing devices [29]. Draft versions were disseminated and assessed in the 1990s, with the final standard published in 2000 [12].

Of interest here is Part 9, "Requirements for Non-Keyboard Devices" (ISO 9241-9). ISO 9241-9 lays out the methodology for assessing both performance and user comfort with input devices, such as mice, trackballs, touchpads, joysticks, or pens. Since an eye tracker emulates a mouse for computer input, it falls within the scope of the standard. The standard specifies seven performance tests, as described in Figure 3.

| Test | Test Procedure |

|---|---|

| One-directional tapping | Manoeuvring a tracking symbol (e.g., a cursor) between two targets of width W separated by distance D and select the targets, using the device's selection method (e.g., pressing a button). |

| Multi-directional tapping | Acting similarly to in one-directional tapping, except using a circular arrangement of targets. |

| Dragging | Clicking and dragging, as in selection of an item in a pull-down menu or dragging of an object (e.g., a file icon) from one window to another. |

| Path-following | Moving an object (such as a circle) of width B between the borders of two parallel lines of length D separated by distance K without touching the boundary lines. |

| Tracing | Moving an object of width B within a track of width K formed by two concentric circles of radii R and R + K without touching the boundary lines. |

| Free-hand input | Writing legible symbols (e.g., letters or digits) along a horizontal line of boxes of a specified dimension. |

| Grasp-and-park | Using the same hand, performing a series of simple pointing tasks while operating a key on the keyboard between tasks. |

Most pointing devices are capable, to varying degrees, of performing all the tasks in Figure 3. The unique challenge in using an eye tracker for computer input is apparent in considering these same tasks. While "look-select" is naturally suited to eye input, controlling the movement of an object or cursor along a path is not. The eyes move by saccades – quick movements of the point of gaze from one location to another. It is not feasible, for example, to use an eye tracker for path following, tracing, or free-hand input – tasks easily done with a mouse or pen.

The test most commonly used for performance comparisons is the tapping task, either one-directional or multi-directional. Although traditional measures of speed and accuracy always serve as points of evaluation and comparison, the primary performance metric specified in the standard is "throughput" (TP) in bits per second (bits/s). Throughput is a composite measure that includes both the speed and accuracy in performance. Evidence suggests that a user's predisposition to emphasize speed vs. accuracy does not affect throughput [18]. Thus, differences in throughput are less susceptible to variation in the measures of speed or accuracy alone, and are more likely due to inherent properties in the test conditions. This is an important and worthwhile property of throughput as a human performance metric.

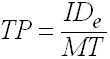

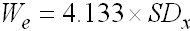

The equation for throughput is Fitts' "index of performance" [8] except using an effective index of difficulty (IDe). Specifically,

| (1) |

where MT is the mean movement time, in seconds, for all trials within the same condition, and

. .

| (2) |

IDe, in bits, is calculated from D, the distance to the target, and We, the "effective target width". We is calculated as

. .

| (3) |

where SDx is the standard deviation in the selection coordinates measured along the axis from the home position to the center of the target. If multi-directional tapping is used, the data are first transformed to effectively treat each trial as one-dimensional along the x axis. Using the effective target width allows throughput to incorporate the spatial variability in human performance. Thus, throughput includes both speed and accuracy [17].

If one considers mouse evaluations in research not following the standard, throughput ranges from about 2.6 bits/s to 12.5 bits/s. Studies conforming to the standard report mouse throughputs from about 3.7 bits/s to 4.9 bits/s [28]. The narrower spread in the ISO-conforming data is a clear sign that ISO 9241-9 improves the quality and comparability of device evaluations.

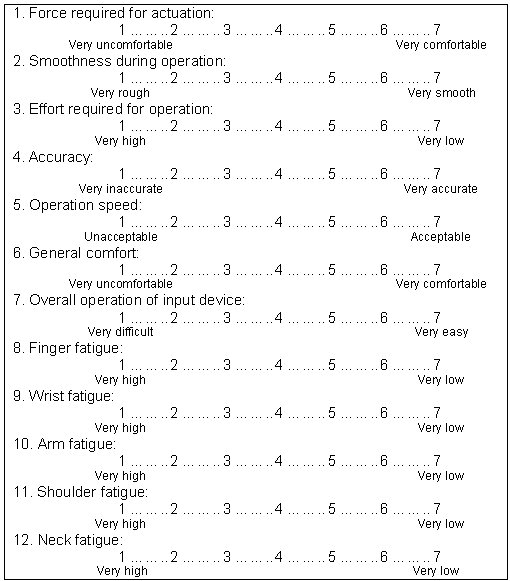

ISO 9241-9 assesses comfort using a questionnaire soliciting Likert-scale responses to twelve items. See Figure 4. Items 1 through 7 are considered "general indices", while items 8 through 12 are considered "fatigue indices". The questionnaire items are generally modified to suit the device condition under test. In assessing an eye tracker, for example, the items for finger, wrist, and arm fatigue are probably not relevant. An item may be substituted for "eye fatigue", instead.

Figure 4. ISO 9241-9 questionnaire to assess device comfort

3 EYE INPUT

Rather than controlling a cursor and moving it to a location, the eye simply looks at the location. However, unlike a mouse or other pointing device, the eye is not capable of moving a cursor or fixation point smoothly along a path. Changing the eye fixation point involves a rapid distance-traversing movement known as a saccade. Furthermore, the eye is incapable of fixating on a single pixel on a computer display, to the exclusion of other pixels. Herein lies a significant challenge in using an eye tracker for computer input. The problem (to call it that) is due to the part of the eye responsible for sharp central vision – the fovea. The fovea is the area of highest spatial resolution in the eye. It performs visual perception in tasks where detail is paramount, such as reading, driving, or sewing. Although it is often noted that the fovea captures only about two degrees of the visual field (a region about twice the width of a thumb at arm's length) [7, p. 14, 14-16, 31], a reverse perspective is more revealing: because the fovea's visual field is about two degrees, single-pixel sensing of the eye's fixation point on a computer display is simply not possible. This limitation is compounded by the inherent jitter in eye fixation [16, 32]. The eye's point of gaze is never perfectly still. Small jumps in the estimated coordinates of fixation are germane to eye tracking systems, through no fault of the technology.

Target selection is another challenge for eye input to computers. While a computer mouse includes both movement tracking and selection capabilities, there is no equivalent or inherent "button click" capability for the eye. Using an eye tracking system for computer input, therefore, requires an additional and satisfactory implementation of the select operation that typically follows pointing. One possibility is to use a separate physical switch for selection. The hand, a head action, or even a sip-and-puff straw can activate the switch.

Speech is another potential modality for selection. Of course, these are not viable options if the goal is eyes-only input. Other possibilities engage the eye tracking technology in some manner. The most common method is dwell-time selection – maintaining the point of fixation on a selectable target for a pre-determined time interval (e.g., 700 ms). Dwell-time selection has performance implications. Too long and the user is frustrated. Too short and unintended selections occur. Blinks, winks, or nods are other selection possibilities, provided the eye tracking system includes the requisite image processing capabilities.

It is clear from the brief characterization of eye input above that eye tracking systems present significant challenges when used for computer input. There are a myriad of parameters that are ripe for experimental testing. Whether it is the selection method, the duration of dwells or blinks for selection, or techniques to filter, alias, or smooth a noisy data stream, a methodology that allows for the empirical evaluation and comparison of alternatives is necessary. Two example evaluations using eye trackers are elaborated below. Both embrace the ISO standardized testing methodology for pointing devices in the context of eye tracking for computer input.

4 EXAMPLE #1 – DWELL-TIME VS. KEY SELECTION

In this first example, three selection methods for an eye tracker are compared.1 A fourth condition – a mouse – is used as a baseline condition. The idea of a baseline condition is to include a technique that is commonly known and with previous experimental testing of a similar nature. If the results for the baseline condition match those for the same condition in previous research, the methodology "checks out", so to speak.

4.1 Participants

Sixteen paid volunteer participants (11 male, 5 female) were recruited from the local university campus. Participants ranged from 22 to 33 years (mean = 25). All were daily users of computers, reporting 4 to 12 hours usage per day (mean = 7). None had prior experience with eye tracking. All participants had normal vision, except one who wore contact lenses. Nine participants were right-eye dominant, seven left-eye dominant, as determined using an eye dominance test [5].

4.2 Apparatus

An Arrington Research2 ViewPoint head-fixed eye tracking system served as the primary input device (Figure 5). The eye tracker was connected to a conventional desktop PC running Microsoft Windows XP. The system's display was a 19" 1280 × 1024 LCD. The eye tracker sampled at 30 Hz with an accuracy of 0.25° - 1.0° visual arc, or about 10 - 40 pixels with the configuration used.

Figure 5. Experimental setup

The experimental software was a discrete-task implementation of the multi-directional tapping test in ISO 9241-9, as described in Figure 3. It is similar in operation to the experimental software in an early ISO-conforming evaluation for pointing devices [6]. Raw eye data and event data were collected and saved for follow-up analyses.

4.3 Procedure

Participants were briefed on the purpose and objectives of the experiment and on the operation of the eye tracker and the selection methods employed. They sat at a viewing distance of approximately 60 cm from the display. Calibration was performed before the first eye technique, with re-calibration as needed.

At the onset of each trial, a home square appeared at the centre of the screen. See Figure 6a. At the same time, the circle for the designated target was outlined in blue with a blue dot in the centre. The home square disappeared after participants dwelled on it, pressed the SPACE key, or clicked the left mouse button depending on the interaction technique. To exclude physical reaction time, positioning time started when the eye or mouse moved after the home square disappeared. The task was to select the target circle. When the target circle received focus, it changed to white with a red dot in the center (Figure 6b). The dot helped participants fixate at the centre of the target. The grey background was designed to reduce the eye stress caused by colors, such as a white background. For the three eye techniques, the mouse pointer was hidden to reduce visual distraction.

(a) (b)

Figure 6. Multi-dimensional target selection task. (a) gaze on home square with target identified (b) gaze on target

An interval of 2.5 seconds was allowed to complete a trial after the home square disappeared. If no target selection occurred within 2.5 seconds, a time-out error was recorded. Then, the next trial followed.

Participants were instructed to point to the target as quickly as possible (look at the target or move the mouse depending on the input method), and select the target as quickly as possible (dwell on the target, press the SPACE key, or click the left mouse button depending on the input method). After finishing the trials, the participants were interviewed and completed a questionnaire.

4.4 Design

The experiment was a 3 × 2 × 2 within-subjects design. The independent variables and levels were as follows:

- Input Method - ETL, ETS, ESK, Mouse

- Target Width - 75, 100 pixels

- Target Distance - 275, 350 pixels

The Eye Tracker Long (ETL) input method required participants to look at an on-screen target and dwell on it for 750 ms for selection. The dwell time was 500 ms for the Eye Tracker Short (ETS) technique. The Eye+SPACE Key (ESK) technique allowed participants to "point" with the eye and "select" by pressing the SPACE key upon fixation. To minimize asymmetric learning effects, the four input methods were counterbalanced using a 4 × 4 balanced Latin square.

The two additional independent variables were included as recommended in ISO 9241-9 to ensure the trials covered a reasonable and representative range of difficulties. Target Width was the diameter of the target circle. Target Distance was the radius of the layout circle, which was the distance from the center of the home square to the center of the target circle.

For each test condition, 16 target circles were presented for selection (Figure 6), with each selection constituting a trial. The target width and distance conditions were randomized within each input method. The order of presenting the 16 target circles was also randomized. The dependent variables were throughput (bits/s), movement time (ms), and error rate (%). As movement time is represented in the calculation of throughput (see Equation 1), separate analyses for movement time are not presented here.

The total number of trials was 16 participants × 4 interaction techniques × 2 distances × 2 widths × 16 trials = 4096.

4.5 Results and Discussion

Throughput

As evident in Figure 7, there was a significant effect of input method on throughput (F3,45 = 47.46, p < .0001). The throughput for the mouse of 4.68 bits/s is in the range cited earlier for ISO 9241-9 mouse evaluations [28], thus verifying the methodology. The 500 ms dwell time of the ETS technique seemed just right. ETL had a lower throughput than ETS. This is in part due to the 250 ms difference in the dwell time settings. ESK was the best among the three eye tracking techniques. This is attributed to participants effectively pressing the SPACE key immediately upon fixation on the target. This eliminated the need to wait for selection. The throughput of the ESK technique was 3.78 bits/s, which approaches the 4.68 bits/s for the mouse. Considering the mouse has the best performance among non-keyboard input devices [28], the ESK technique is very promising. As the user must press the SPACE key (or other key), this observation is tempered by acknowledging that the ESK technique is only appropriate where an additional key press is possible and practical.

Figure 7. Throughput (bit/s) by input method. Error bars show ±1 SD.

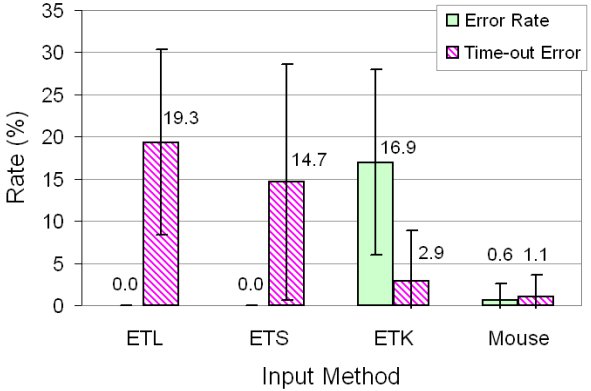

Error Rates and Time-out Errors

For the ETL and ETS techniques, participants selected the target by dwelling on it. Thus, the outcome was either a selection or a time-out error. Therefore, the error rates for ETL and ETS were zero, as shown in Figure 8. Time-out errors for the ETL, ETS and ESK techniques were mainly caused by eye jitter and limitations in the eye tracker's accuracy. The longer the time taken to perform a selection, the greater the chance of a time-out error. ESK had 2.9% time-out errors, which is substantially less than for the other eye conditions, and approaches the 1.1% time-out errors for the mouse.

The ESK method had a high error rate. This is a classic example of the speed-accuracy tradeoff. Here, it is attributed to participants pressing the SPACE key just before fixating on the target, or slightly after the eye moved out of the target. Because no participant had prior experience with eye tracking, few could proficiently perform the coordinated task of eye pointing and hand pressing of the SPACE key. The error rate for the ESK technique varied a lot across participants (SD = 11.4, max = 35.6, min = 3.1). Participants would likely demonstrate lower error rates if further training was provided and improved feedback mechanisms were considered and tested.

Figure 8. Error rate and time-out error by input method.

Questionnaire

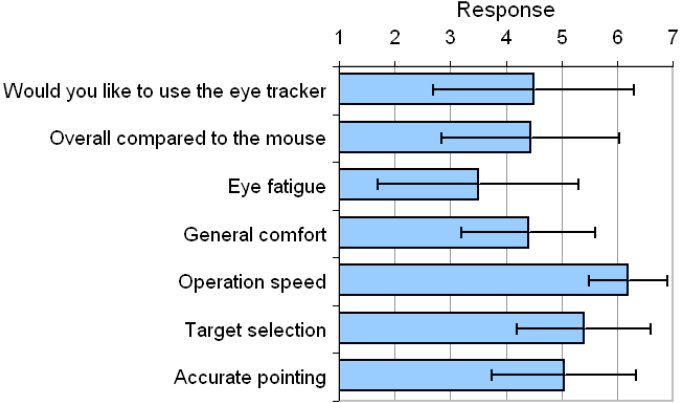

The device assessment questionnaire consisted of 12 items, modeled on the response items suggested in ISO 9241-9 (see Figure 4). The questions pertained to eye tracking in general, as opposed to a particular eye tracking selection technique. Each response was rated on a 7-point scale, with 7 as the most favorable response, 4 the mid-point, and 1 the least favorable response. Responses for 7 of the 12 items are shown in Figure 9.

Figure 9. Eye tracker device assessment questionnaire. Response 7 is the most favorable, response 1 the least favorable.

As seen, participants generally liked the fast positioning time of the eye tracker. On operation speed, the mean score was high at 6.2. However, eye fatigue was a concern (3.5). Participants complained that staring at so many targets made their eyes dry and uncomfortable. Eye fatigue scored lowest among all the questions. Participants gave eye tracking a modest favorable response overall of 4.5, just slightly higher than the mid-point. Discussions following the experiment revealed that participants liked to use eye tracking and believed it could perform similar to the mouse. Of the three eye tracking techniques, participants expressed a preference for the Eye+SPACE Key technique. Concerns were voiced, however, on the likely expense of eye tracking system, the troublesome calibration procedure, and the uncomfortable requirement of maintaining a fixed head position.

5 EXAMPLE #2 – BLINK VS. DWELL-TIME SELECTION

The second example evaluates and compares dwell time versus blink for target selection. The evaluation also pushes the limits of eye tracking technology by using small targets.

5.1 Participants

Twelve volunteer participants (9 male, 3 female) were recruited from the local university campus. None had prior experience using an eye tracker but all were experienced mouse users. Vision was normal for all participants except for one who wore glasses and another who wore contact lenses during the experiment.

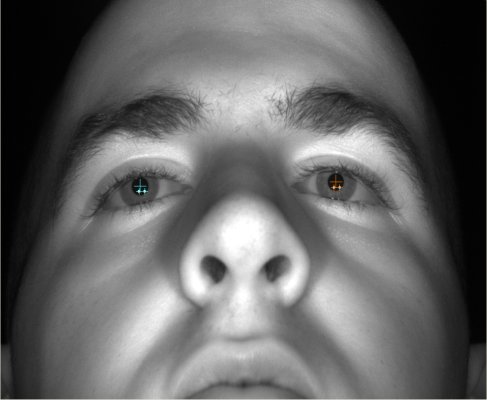

5.2 Apparatus

The eye tracker was an EyeTech Digital Systems3 TM3 eye tracker working with Quick Glance version 5.0.1 software. Unlike the device used in the previous study, the TM3 is reasonably portable as there is no chin rest. It is also less cumbersome to set up and calibrate. The device uses infrared emitters to illuminate the eyes to gather reference points for tracking eye position and movement. The tracker confirms proper detection of the user's eyes through cross hairs on the image of the face (Figure 10).

Calibration presents 16 circles on the display with the user looking at the center of each for 1.5 seconds until the next target appears. The procedure returns a numeric score for each eye where a lower score means less estimated deviation from the point of fixation to the centre of the target. Before the start of the experiment, the eye tracker was calibrated iteratively to achieve a score no higher than 4.0 for each eye with no more than a 0.4 deviation between each eye. Calibration took an average of six minutes per participant.

Figure 10. QuickGlance software displaying cross hairs on user's eyes for reference points

Dwell-time selection uses a default dwell area of 20 mm where the fixation point must remain within the designated area for a specified amount of time before a click event occurs. We used the smallest allowable setting of 5 mm in the experiment. An error was logged if the computed fixation point was outside the target at the end of the dwell interval. An audible click was heard at the same time as a selection event. Cursor movement was smoothed using a smoothing factor of 10, as set in the software.

The camera operates at 30 frames per second, with a 16 × 12 cm field of view, and a pixel density of 64.6 pixels/cm. The host computer was a Lenovo 3000 N100 laptop with a 15" screen running Microsoft Windows XP. The eye tracker was placed in front of, and below, the screen with the angle adjusted accordingly.

5.3 Procedure

Following a briefing on the goals and objectives of the experiment, participants were asked to sit in front of the laptop with their head approximately 60 cm in front of the display and eye tracker (Figure 11). They were asked to sit comfortably and to try not to move their head during the experiment.

Figure 11. Experiment setup. The EyeTech TM3 eye tracker is seen below the LCD.

After successful calibration, the experimental software was launched. The software was a Java application implementing a serial-task version of the multi-dimensional tapping test specified in ISO 9241-9. Participants were instructed on how to select with the eye tracker and on the presence of the "click" auditory feedback. They were allowed to practice as much as desired before beginning the experiment. When participants were ready to start, the software was launched in data collection mode. They were instructed to begin each block of trails by selecting the red target "as quickly and accurately as possible". This initiated the time measurement.

After the experiment was finished, each participant completed a questionnaire to solicit qualitative responses on their experience with the eye tracker.

5.4 Design

The experiment was a 3 × 2 × 2 × 4 within-subjects design. The independent variables and levels were as follows:

- Input Method – Blink (eye tracker), Dwell (eye tracker), Mouse

- Target Width – 16, 32 pixels (6, 12 mm)

- Target Distance – 256, 512 pixels

- Block – 1, 2, 3, 4

The eye tracker conditions differed in the selection technique. For both blink and dwell time, the duration was set to 500 ms. The target width conditions are at the extreme end of the capabilities of eye tracking technology for selecting small targets. Thus, the results are expected to be somewhat poorer than for larger targets.

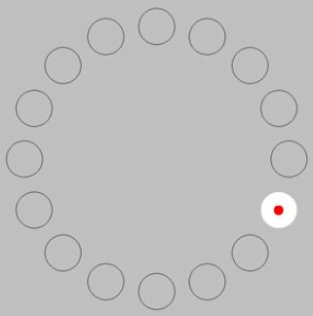

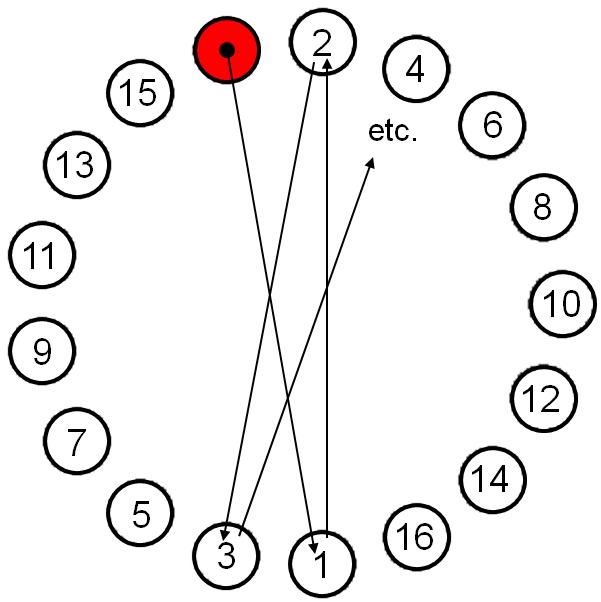

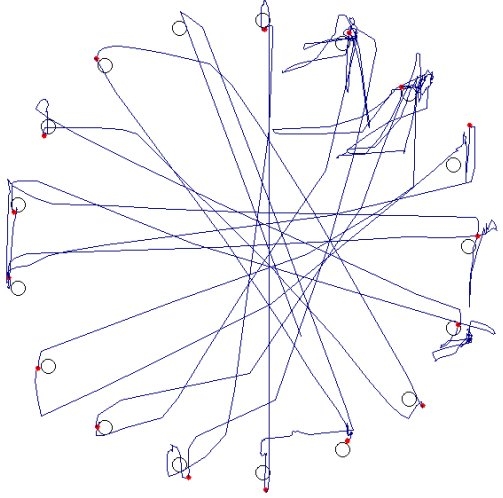

For each target distance-width condition, the participant was presented with 17 circular targets arranged in a circle. The diameter of the layout circle is the target distance. The diameter of the target circle is the target width. Figure 12 shows an example with the order of target selection indicated. The first trial begins with the first selection (circle in red). Each arrangement of 17 targets creates 16 trials. With each selection, the next target is highlighted in red.

Figure 12. Sample block with order of target selection

The dependent variables were throughput (bits/s), movement time (ms), and error rate (%). As with the last example, analyses for movement time are not presented here.

The order of administering the input methods was counterbalanced. Given the overhead in calibrating the eye tracker, a nested (2 × 2) × 2 counterbalancing scheme was used, with 3 participants in each group:

- Blink, Dwell, Mouse

- Dwell, Blink, Mouse

- Mouse, Blink, Dwell

- Mouse, Dwell, Blink

After each block was completed, a dialog box displayed the participant's results for the block. Participants were encouraged to rest before continuing to the next block. After all 4 blocks were completed; a final dialog displayed their overall results.

The total number of trials was 12 participants × 3 input methods × 2 widths × 2 distances × 4 blocks × 16 trials/block = 9,216.

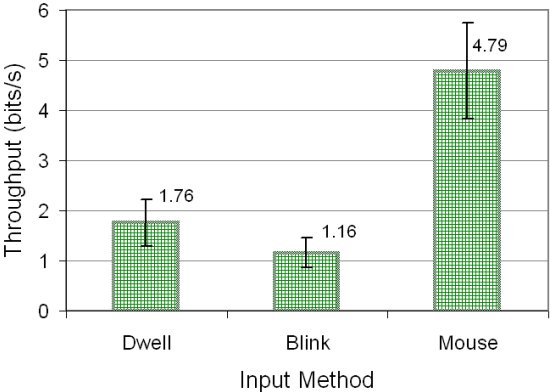

5.5 Result and Discussion

Throughput

As Figure 13 depicts, and as expected, the mouse had a much higher throughput than either eye tracking condition (F2,11 = 159.36, p < .0001). The throughput for the mouse was 4.79 bits/s, which was close to the value of 4.68 bits/s reported in the previous study (using a different host computer and different software). This alone is testimony to the consistency ISO 9241-9 brings to pointing device evaluations.

Figure 13. Throughput (bits/s) by input method

Comparing the eye tracking conditions, dwell-time selection had a much higher throughput. The throughput of 1.79 bits/s using dwell-time selection was 51.6% higher than the throughput of 1.16 bits/s observed when using blink selection. These values are low compared to the mouse and compared to the eye tracking evaluation presented in the preceding section. However, it is important to keep in mind at least two factors. One is the use of a fixed-head tracking system with the ArringtonResearch ViewPoint which inherently simplifies the eye tracking problem. The other is the very stringent target conditions in the present evaluation. Error rates were (understandably) high and somewhat erratic, as discussed further in the next section. This, no doubt, played a significant role in the low values for throughput. Notably, throughput values below 2 bits/s are common, even in studies using ISO 9241-9. Examples include a remote pointing device at 1.4 bits/s [19], a touchpad at 1.8 bits/s [6], a touchpad at 1.0 bits/s [21], and a joystick at 1.8 bits/s [20]. So, overall, the throughput values for the eye tracking conditions presented here are promising.

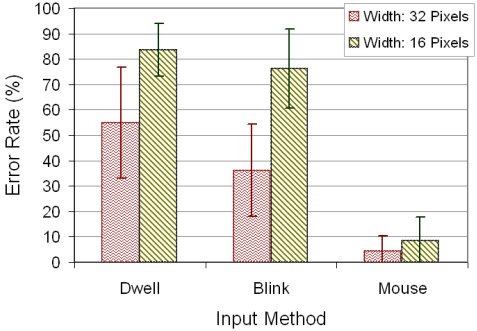

Error Rates and Accuracy

Given the small targets in the present study, error rates were understandably high. Our first analysis considers errors over the four blocks of trials. As seen in Figure 14a, participants' accuracy improved with practice (F3,10 = 3.247, p < .05). Improvement for the eye tracking conditions was most significant with dwell-time selection. Nevertheless, even on the 4th block, error rates were high: 52.2% for blink selection and 58.3% for dwell-time selection. During the experiment, most participants remained still, as instructed. Clearly, they were concerned with improving their performance, and they did. It is important to bear in mind that each block took approximately one minute to complete; so, there was insufficient practice for a true learning effect to emerge.

(a) (b)

Figure 14. Error rates (a) by block and input method (b) by input method and target width. Error bars show ±1 SD.

Figure 14b shows a dramatic increase in errors for the eye tracking conditions when selecting very small targets. Obviously target width was the catalyst. The target widths of 32 and 16 pixels translated into on-screen physical widths of about 12 mm and 6 mm, respectively. Combined with a viewing distance of about 60 cm, the targets transected visual angles of about 1.15° and 0.57°, respectively. Thus, the target sizes taxed the limits of eye physiology. Considering the eye's constant micro-saccades and the fovea size, even a "perfect" eye tracker limits selection to targets transecting of ≈1° of visual angle [2]. So, the results in Figure 14 are expected.

To provide further insight on the eye tracking experience, Figure 15 traces the changes in eye fixation for one block of trials using dwell-time selection with target distance = 512 pixels and target width = 16 pixels. Saccades are evident in the straight-line segments.

At the end of each trail, most traces reveal a stream of fluctuations in the estimated point of gaze. The final selection coordinate (small circle, red) was slightly off the target in most cases.

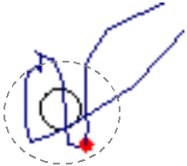

Figure 15. Multi-directional test using an eye tracker with dwell-time selection. The targets are circles with diameter 16 pixels, or about 6 mm as measured on the display. Viewing distance was about 60 cm.

In the research literature, many creative solutions are proposed and tested to accommodate the accuracy and jitter problems when using an eye tracker for computer input. An example is shown in Figure 16a. Two ideas are proposed: an expansion factor (EF) and grab-and-hold selection [24]. Using an expansion factor means that a selectable target is automatically assigned a larger, expanded area according to an expansion factor set in the software. The expanded area is not seen by the user, but is used in the interface to create a virtual expansion to the targeted region. The idea has been used successfully in conventional graphical user interfaces [9, 23], and may also work with eye tracking interfaces. Grab-and-hold is a technique to improve dwell-time selection. As soon as a fixation point is detected within the target or the target's expanded area, the target is grabbed and the dwell timer is started. Provided there is no saccade during the dwell interval, the target is selected at the end of the dwell interval, even if the fixation points exhibit drift or jitter and never actually enter the target. This is seen in the fixation area in the Figure 16a.

(a) (b)

Figure 16. (a) Virtual expansion of target area to facilitate selection [24] (b) Target from the 7 o'clock position in Figure 15. An error becomes a correct selection using an expansion factor (dotted line).

Figure 16b shows one of the target selections logged as an error in Figure 15. A hypothetical expanded area is shown around the target. Had the experimental software used the expanded area and grab-and-hold selection, the result would likely be correct selection. The example above is given mainly to acknowledge the sort of research initiatives that can be explored with an evaluation methodology of the likes presented in the two examples here and as offered in ISO 9241-9.

Questionnaire and Interviews

The eye tracker assessment questionnaire given at the end of the experiment included 11 items asking participants about their overall experience, preferences, eye tracking performance, and fatigue. The rating was done on a 5-point Likert scale with 1 the least favorable response and 5 the most favorable. The mid-point score was 3.

Participants were impressed with the performance of the eye tracker, in particular with its smoothness (3.6) and operation speed (3.7). Eye fatigue was the biggest concern (1.2). In the discussions after the experiment, participants said they enjoyed using the eye tracker but still preferred to use a standard mouse. There was a concern about the long calibration process, keeping the head still for so long, and maintaining a specific distance from the eye tracker. Participants generally preferred blink selection to dwell-time selection, as they felt it afforded more control over when to click. Dwell-time selection also produced involuntary clicks, as noted by some participants.

6 EXPERIMENTAL VARIABLES

The two examples above are experimental in nature. Although the title of this chapter does not specifically reference an experimental methodology, it is inferred. Undertaking an evaluation of an eye tracking system usually aims to answer research questions such as "What value of dwell time yields the best user performance in terms of speed and accuracy", "Is user performance better using dwell-time selection or blink selection?", or "If a certain algorithm or interaction modification is introduced, does user performance improve?". These questions are best answered using a controlled experiment; two examples were given above. One advantage of control experiments is that conclusions of a "cause and effect" nature are possible. If, for example, the experiment involved comparing two or more dwell-time selection intervals and user performance was best with a particular interval, then it is possible to conclude that the improvement was due to, or caused by, the dwell-time selection interval – provided the experiment was designed and conducted according to an established methodology for experimental research with human participants. This is precisely the sort of information researchers are pursuing in their investigations.

While a complete tutorial on experiment design is beyond the scope of this chapter, some final comments are offered on the two primary variables in experimental research.

Whether the experiment engages an eye tracker for computer input or tests some other aspect of the human-computer interface, the independent variables and the dependent variables set the tone for the entire experiment.

6.1 Independent Variables

Independent variables (a.k.a. factors) are the conditions tested. They are so named because they are under control of the investigator, not the participant; thus, they are "independent" of the participant. The settings of the variable or factor are called "levels". Any circumstance that might affect performance is potentially an independent variable in an experiment. Examples include key size (big vs. small), key press feedback (click vs. no click), filtering algorithm (on vs. off), user position (standing vs. sitting vs. walking), ambient lighting (sun vs. room vs. dim vs. dark), gender (male vs. female), and so on. There is no limit to the possibilities.

"Input method" was cited as an independent variable in both examples above. Although quite broad, the term served to encompass the levels, which were 750 ms dwell time vs. 500 ms dwell time vs. key press selection vs. mouse in the first example and dwell time vs. blink vs. mouse in the second example. Both examples investigated methods of selection; however, as a mouse condition was included, "input method" is a reasonable name for the factor.

In experimental evaluations of eye tracking systems, numerous other independent variables appear in the literature. A few examples are given in Figure 17. Note that there is both a name for the independent variable (left column) and a delimitation of two or more levels of the variable (right column).

| Independent Variable | Study | Description |

|---|---|---|

| Cursor redressment method | Zhang, Ren, & Zha, 2008 | Comparison of four methods to improve the stability of the eye cursor by counteracting eye jitter. The methods were force field, speed reduction, warping to target centre, and no action. |

| Prediction mode | Zhang & MacKenzie, 2007 | Comparison of letter prediction and word prediction in eye typing using a soft keyboard. |

| Algorithm | Zhai, Morimoto, & Ihde, 1999 | Comparison of two settings of an algorithm to improve target selection by warping the eye cursor's area to encompass the target. |

| Fisheye mode | Ashmore, Duchowski, & Shoemaker, 2005 | Comparison of four fisheye techniques to improve target selection. The techniques were no action, always on, and appearing only after fixation begins (with and without a grab-and-hold algorithm). |

| Typing system | Itoh, Aoki, & Hansen, 2006 | Comparison of three eye typing systems for Japanese text entry. The systems were Dasher and two variants of GazeTalk (positioning the text window either at the top left or in the centre of the display). |

| Feedback mode | Majaranta, MacKenzie, Aula, & Räihä, 2006 | Comparison of four feedback modes for keys on a soft keyboard used for text entry. The modes were visual only, click+visual, speech+visual, and speech only. |

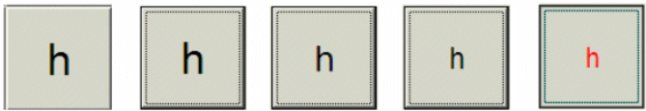

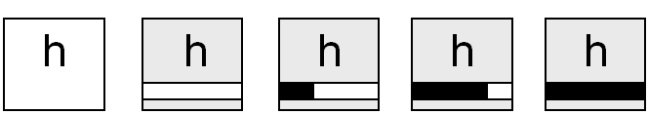

The last entry in Figure 17, feedback mode, is a typical example of an aspect of eye tracking interaction well suited to experimental testing. Providing users with feedback about the state of the system is a long-held principle of good user interface design [e.g., 25, p. 17]. And the same holds true for eye tracking systems. More true, in fact. Even though eye-tracking systems are often configured to emulate a mouse, an on-screen tracking symbol typically is not used, as it is distracting to the user and reduces the naturalness of look-select interaction. If dwell-time selection is used, pre-selection feedback helps inform the user that the point of gaze is on a selectable item (and that selection is imminent!). The feedback-mode example in Figure 17 compared four types of feedback: visual only, click+visual, speech+visual, and speech only. The three visual modes indicated the progress of the dwell interval using a shrinking letter, which turned red on final selection. This is shown in Figure 18a. The idea of a shrinking letter is to "draw in" the user's visual attention during the dwell interval, and thus improve the stability in the point of gaze. An alternative is a simple progress bar, as shown in Figure 18b. The idea worked. The text entry speed was significantly higher use the shrinking-letter feedback. Of the three visual feedback modes, the best performance occurred with the addition of auditory feedback using a "click" sound on selection.

(a)

(b)

Figure 18. Pre-selection and dwell-time feedback. Pre-selection begins in the second frame.

Dwell status is indicated using (a) a shrinking letter [22] or (b) a progress bar [10].

6.2 Dependent Variables

Dependent variables are the measured behaviours of participants. They are dependent because they "depend on" what participants do. The most common dependent variables relate to the speed and accuracy with which participants perform tasks in the experiment. In the case of text entry using eye typing, speed is usually reported in words per minute or characters per minute. However, in interactions such as basic pointing and selecting, speed is usually reported in its reciprocal form, the time to complete a task. Beginning with ISO 9241-9 and with related work on computer pointing devices, throughput (bits/s) is also widely used as a dependent variable. As noted above, throughput is a composite measure including both the speed and accuracy in participants' responses.

Besides coarse measures of speed, accuracy, and throughput, researchers often devise new dependent measures as appropriate for an interaction technique under investigation. In fact, any observable, measurable aspect of the interaction is a potential dependent variable, provided it offers insight into human performance with an interaction technique. Some examples from the eye tracking literature are given in Figure 19.

| Dependent Variable | Study | Description |

|---|---|---|

| Read-text events | Majaranta et al., 2006 | During eye typing, the number of times per phrase the point of gaze switched to the typed text field for review of the text typed so far. |

| Overproduction rate | Hansen, Tørning, Johansen, Itoh, & Aoki, 2004; Itoh et al., 2006 | During eye typing, the ratio of the actual number of gaze selections to the minimum number needed for construction of a given sentence. |

| Entry of target events | Zhang et al., 2008 | During target selection, the number of times per trial the eye cursor entered the target region. |

| Saccade rate | Takahashi, Nakayama, & Shimizu, 2000 | During an audio-response task, the number of saccades per second. |

| Scan paths | Pan et al., 2004 | During viewing of Web pages, the minimum string distance between two observed sequences of scan paths that achieved the same effect. |

| Off-road glances | Sodhi et al., 2002 | In a driving task, the number of times the driver made an off-road glance (at the radio, mirror, or odometer). |

It is easy to imagine how the dependent variables in Figure 19 can elicit a finer granularity of insight into participant behaviour. They provide information not just on the overall responses for trials but on aspects of the interaction that occur during trials.

In most research papers, the independent and dependent variables come together in the results section in statements of the form, "there was a significant effect of {independent variable} on {dependent variable}". The statement is typically accompanied with the supporting statistical test such as an analysis of variance. Consult any of the papers cited in Figure 18 and Figure 19 for examples.

7 CONCLUSION

The central issues in evaluating eye trackers for computer input have been presented, along with two examples of experimental evaluations. One evaluation used the ArringtonResearch ViewPoint head-fixed eye tracker (see Figure 5); the other used an EyeTech Digital Systems TM3 (see Figure 11). The examples included "input method" as the main independent variable. The methods included different settings of the time interval for dwell-time selection (500 ms and 750 ms), and a comparison of dwell-time vs. blink selection. Another method combined eye pointing with key selection method. In both evaluations, a mouse was included as a baseline condition. The main dependent variable was throughput (bits/s) as stipulated in ISO 9241-9 – the 2000 ISO standard on evaluating and comparing non-keyboard input devices to computers. The eye tracking conditions yielded throughput values in the range of 1.16 bits/s to 3.78 bits. These were as expected and were lower than the values of about 4.7 bits/s for the mouse. Overall, the eye tracking conditions show considerable promise for providing an alternative input modality for human interaction with computers.

Acknowledgments

Thanks are offered to research assistants William Zhang and Matthew Conte. This research is sponsored by the Natural Sciences and Engineering Research Council of Canada. Thanks are also extended to EyeTech Digital Systems for the loan of a TM3 eye tracker.

References

| 1. | Ashmore, M., Duchowski, A. T., and Shoemaker, G., Efficient eye pointing with a

fisheye lens, Proceedings of Graphics Interface 2005, (Toronto: Canadian Information

Processing Society, 2005), 203-210.

|

| 2. | Barcelos, T. S. and Morimoto, C. H., GinX: Gaze-based interface extensions,

Proceedings of the ACM Symposium on Eye Tracking Research and Applications -

ETRA 2008, (New York: ACM, 2008), 149-152.

|

| 3. | Card, S. K., English, W. K., and Burr, B. J., Evaluation of mouse, rate-controlled

isometric joystick, step keys, and text keys for text selection on a CRT, Ergonomics, 21,

1978, 601-613.

|

| 4. | Chapanis, A., Man-machine engineering. Wadsworth Publishing Company, 1965.

|

| 5. | Collins, J. F. and Blackwell, L. K., Effects of eye dominance and retinal distance on

binocular rivalry, Perceptual and Motor Skills, 39, 1974, 747-754.

|

| 6. | Douglas, S. A., Kirkpatrick, A. E., and MacKenzie, I. S., Testing pointing device

performance and user assessment with the ISO 9241, Part 9 standard, Proceedings of the

ACM Conference on Human Factors in Computing Systems - CHI '99, (New York:

ACM, 1999), 215-222.

|

| 7. | Duchowski, A. T., Eye tracking methodology: Theory and practice. Berlin: Springer,

2007.

|

| 8. | Fitts, P. M., The information capacity of the human motor system in controlling the

amplitude of movement, Journal of Experimental Psychology, 47, 1954, 381-391.

|

| 9. | Grossman, T. and Balakrishnan, R., The bubble cursor: Enhancing target acquisition by

dynamic resizing of the cursor's activation area, Proceedings of the ACM Conference on

Human Factors in Computing Systems - CHI 2005, (New York: ACM, 2005), 281-290.

|

| 10. | Hansen, J. P., Hansen, D. W., and Johansen, A. S., Bringing gaze-based interaction back

to basics, Proceedings of HCI International 2001, (Mahwah, NJ: Erlbaum, 2001), 325-328.

|

| 11. | Hansen, J. P., Tørning, K., Johansen, A. S., Itoh, K., and Aoki, H., Gaze typing

compared with input by head and hand, Proceedings of the ACM Symposium on Eye

Tracking Research and Applications - ETRA 2004, (New York: ACM, 2004), 131-138.

|

| 12. | ISO, Ergonomic requirements for office work with visual display terminals (VDTs) -

Part 9: Requirements for non-keyboard input devices (ISO 9241-9), International

Organisation for Standardisation. Report Number ISO/TC 159/SC4/WG3 N147,

February 15, 2000.

|

| 13. | Itoh, K., Aoki, H., and Hansen, J. P., A comparative usability study of two Japanese gaze

typing systems, Proceedings of the ACM Symposium on Eye Tracking Research and

Applications - ETRA 2006, (New York:, 2006), 59-66.

|

| 14. | Kammer, Y., Scheiter, K., and Beinhauer, W., Looking my way through the menu: The

impact of menu design and multimodal input on gaze-based menu selection, Proceedings

of the ACM Symposium on Eye Tracking Research and Applications - ETRA 2008, (New

York: ACM, 2008), 213-220.

|

| 15. | Komogortsev, O. and Khan, J., Perceptual attention focus prediction for multiple viewers

in case of multimedia perceptual compression with feedback delay, Proceedings of the

ACM Symposium on Eye Tracking Research and Applications - ETRA 2006, (New York:

ACM, 2006), 101-108.

|

| 16. | Law, B., Atkins, M. S., Kirkpatrick, A. E., and Lomas, A. J., Eye gaze patterns

differentiate novice and experts in a virtual laparoscopic surgery training environment,

Proceedings of the ACM Symposium on Eye Tracking Research and Applications -

ETRA 2004, (New York: ACM, 2004), 41-48.

|

| 17. | MacKenzie, I. S., Fitts' law as a research and design tool in human-computer interaction,

Human-Computer Interaction, 7, 1992, 91-139.

|

| 18. | MacKenzie, I. S. and Isokoski, P., Fitts' throughput and the speed-accuracy tradeoff,

Proceedings of the ACM Conference on Human Factors in Computing Systems - CHI

2008, (New York: ACM, 2008), 1633-1636.

|

| 19. | MacKenzie, I. S. and Jusoh, S., An evaluation of two input devices for remote pointing,

Proceedings of the Eighth IFIP Working Conference on Engineering for Human-Computer Interaction - EHCI 2000, (Heidelberg, Germany: Springer-Verlag, 2001),

235-249.

|

| 20. | MacKenzie, I. S., Kauppinen, T., and Silfverberg, M., Accuracy measures for evaluating

computer pointing devices, Proceedings of the ACM Conference on Human Factors in

Computing Systems - CHI 2001, (New York: ACM, 2001), 119.-126.

|

| 21. | MacKenzie, I. S. and Oniszczak, A., A comparison of three selection techniques for

touchpads, Proceedings of the ACM Conference on Human Factors in Computing

Systems - CHI '98, (New York: ACM, 1998), 336-343.

|

| 22. | Majaranta, P., MacKenzie, I. S., Aula, A., and Räihä, K.-J., Effects of feedback and

dwell time on eye typing speed and accuracy, Universal Access in the Information

Society (UAIS), 5, 2006, 199-208.

|

| 23. | McGriffin, M. and Balakrishnan, R., Acquisition of expanding targets, Proceedings of

the ACM Conference on Human Factors in Computing Systems - CHI 2002, (New York:

ACM, 2002), 57-64.

|

| 24. | Miniotas, D., Špakov, O., and MacKenzie, I. S., Eye gaze interaction with expanding

targets, Extended Abstracts of the ACM Conference on Human Factors in Computing

Systems - CHI 2004, (New York: ACM, 2004), 1255-1258.

|

| 25. | Norman, D. A., The design of everyday things. New York: Basic Books, 1988.

|

| 26. | Pan, B., Hembrooke, H. A., Gay, G. K., Granka, L. A., Feusner, M. K., and Newman, J.

K., The determinants of web page viewing behavior: An eye-tracking study, Proceedings

of the ACM Symposium on Eye Tracking Research and Applications - ETRA 2004, (New

York: ACM, 2004), 147-154.

|

| 27. | Sodhi, M., Reimer, B., Cohen, J. L., Vastenburg, E., Kaars, R., and Kirschenbaum, S.,

Off road driver eye movement tracking using head-mounted devices, Proceedings of the

ACM Symposium on Eye Tracking Research and Applications - ETRA 2002, (New York:

ACM, 2002), 61-68.

|

| 28. | Soukoreff, R. W. and MacKenzie, I. S., Towards a standard for pointing device

evaluation: Perspectives on 27 years of Fitts' law research in HCI, International Journal

of Human-Computer Studies, 61, 2004, 751-789.

|

| 29. | Stewart, T., Ergonomics user interface standards: Are they more trouble than they are

worth?, Ergonomics, 43, 2000, 1030-1044.

|

| 30. | Takahashi, K., Nakayama, M., and Shimizu, Y., The response of eye movement and

pupil size to audio instruction while viewing a moving target, Proceedings of the ACM

Symposium on Eye Tracking Research and Applications - ETRA 2000, (New York:

ACM, 2000), 131-138.

|

| 31. | Tien, G. and Atkins, M. S., Improving hands-free menu selection using eyegaze glances

and fixations, Proceedings of the ACM Symposium on Eye Tracking Research and

Applications - ETRA 2008, (New York: ACM, 2008), 47-50.

|

| 32. | Wobbrock, J. O., Rubinstein, J., Sawyer, M. W., and Duchowski, A. T., Longitudinal

evaluation of discrete consecutive gaze gestures for text entry, Proceedings of the ACM

Symposium on Eye Tracking Research and Applications - ETRA 2008, (New York:

ACM, 2008), 11-19.

|

| 33. | Zhai, S., Morimoto, C., and Ihde, S., Manual gaze input cascaded (MAGIC) pointing,

Proceedings of the ACM Conference on Human Factors in Computing Systems - CHI

'99, (New York: ACM, 1999), 248-253.

|

| 34. | Zhang, X. and MacKenzie, I. S., Evaluating eye tracking with ISO 9241 -- Part 9,

Proceedings of HCI International 2007, (Heidelberg: Springer, 2007), 779-788.

|

| 35. | Zhang, X., Ren, X., and Zha, H., Improving eye cursor's stability for eye pointing tasks,

Proceedings of the ACM Conference on Human Factors in Computing Systems - CHI

2008. Florence, Italy: New York:ACM, 2008, 525-534.

|

-----

Footnotes

1. Portions of this example are based on work by Zhang and MacKenzie [34].2. http://www.arringtonresearch.com/

3. http://www.eyetechds.com/