Sand, A., Remizova, V., MacKenzie, I. S., Spakov, O., Nieminen, K., Rakkolainen, I., Kylliäinen, A., Surakka, V., & Kuosmanen, J. (2020). Tactile feedback on mid-air gestural interaction with a large fogscreen. Proceedings of the 23rd International Conference on Academic Mindtrek - AcademicMindtrek '20, pp. 161-164. New York, ACM. doi:10.1145/3377290.3377316 [PDF]

ABSTRACT Tactile Feedback on Mid-Air Gestural Interaction with a Large Fogscreen

Antti Sand1, Vera Remizova1, I. Scott MacKenzie2, Oleg Spakov1, Katariina Nieminen1, Ismo Rakkolainen1, Anneli Kylliäinen1, Veikko Surakka1, & Julia Kuosmanen1

1Tampere University

Tampere, Finland2 York University

Toronto, Ontario, CanadaContact author: Antti Sand, antti.sand@tuni.fi

Projected walk-through fogscreens can be used to render user interfaces, but lack inherent tactile feedback, thus hindering the user experience. This study examines this by investigating wireless wearable vibrotactile feedback on mid-air hand gesture interaction with a large fogscreen. Participants (n = 20) selected objects from a fogscreen by tapping and dwell-based gestural techniques. The results showed that the participants preferred the tactile feedback. Further, while tapping was the most effective selection gesture, dwell-based target selection required haptic feedback to feel natural.CCS CONCEPTS

• Human-centered computing → Pointing; • Hardware → Displays and imagers; Haptic devices.KEYWORDS

Touchless user interface, mid-air hand gestures, fogscreen, haptic feedbackACM Reference Format:

Antti Sand, Vera Remizova, I. Scott MacKenzie, Oleg Spakov, Katariina Nieminen, Ismo Rakkolainen, Anneli Kylliäinen, Veikko Surakka, and Julia Kuosmanen. 2020. Tactile Feedback on Mid-Air Gestural Interaction with a Large Fogscreen. In Academic Mindtrek 2020 (AcademicMindtrek '20), January 29-30, 2020, Tampere, Finland. ACM, New York, NY, USA, 4 pages. https://doi.org/10.1145/3377290.3377316

1 INTRODUCTION

Intangible projected mid-air displays employing flowing light-scattering particles bring new possibilities to displaying information. An interactive fogscreen is such a walk-through, semi-transparent display to which interactive objects are projected.

The fogscreens are permeable, visible and allow physical interaction. They can be used as touch screens and as interactive public displays while tracking and sensing user interaction. However, no tactile feedback can be perceived when interacting with fogscreens.

We investigated the potential of wearable vibrotactile feedback for mid-air gestural interaction with a large fogscreen. We investigated two gestures for physical interaction with the fog, tapping and dwell-based selection gestures, with and without tactile feedback. In fog tapping, hand movement imitates a conventional physical tap on a vertical screen. Dwell-based selection implies that a finger dwells in the fog over an object for one second to select. The difference between the gestures is that dwell-based selection gesture does not require removing the user's hand from the fog between selections. The user may continuously point and select targets while their hand travels from one target to another in the fog. Fog tapping requires the hand to leave the fog to register a target selection.

Visual feedback alone may be insufficient for this type of interaction, due to slight turbulence in the fog caused by the intersecting finger. This can blur the projected graphics. Furthermore, previous studies revealed the need for tactile feedback [5]. We tested the two gestures with two feedback modalities (auditory + visual, auditory + visual + haptic) when interacting with a large fogscreen to understand the effect of tactile feedback on user preference and performance. Haptic feedback was produced by a custom-built light-weight wireless vibrotactile actuating devices on the user's hand.

The contribution of this work quantifies the subjective experience and the effects of haptic feedback on user performance. We carried out a user study to objectively measure and compare performance and overall subjective user preference. Our findings can inform the design of haptic feedback with mid-air gestural interaction on large projected particle screens.

2 RELATED WORK

2.1 Particle displays

Images have been projected on water, smoke, or fog for more than 100 years [9]. Modern fogscreens are concise, thin-particle-projection systems producing image quality superior to previous methods [2]. The fogscreen we used consists of a non-turbulent fog flowing inside a wider non-turbulent airflow [13]. Small movements of the fog are possible, which in turn causes a slight movement in the projected graphics. The screen is permeable, dry, and cool to touch, so it is possible to physically reach or walk through the image. Fogscreens can be very engaging when the audience can directly interact and play with them.

2.2 Mid-air interaction techniques

The advent of depth-sensing methods that track 2/3D position of the hand has enabled mid-air hand interaction for large screens. Midair hand gestures with large conventional projector displays have been widely researched over the last decade. Several hand selection gestures were evaluated for their intuitiveness and effectiveness. These include push [4, 18], AirTap [4, 17], dwell [4, 16, 18, 19], or thumb trigger [4, 16, 17]. Point-and-dwell selection techniques are commonly used [19], intuitive [18], and easy to detect [4].

Palovuori et al. [11] improved Microsoft Kinect tracking for the fogscreen and demonstrated the potential of fogscreens as an interaction medium. Martinez et al. [10] merged an interactive tabletop screen with a vertical fogscreen in their MisTable system. Sand et al. [15] experimented with a small fogscreen with ultrasonic mid-air tactile feedback and found that participants preferred tactile feedback when selecting objects on the fog. Similar conclusions about the preference of tactile feedback in gestural interaction have been made with other touchless user interfaces [5], but large fogscreens have not been extensively studied.

3 EXPERIMENT SYSTEM DESIGN

3.1 Screen, projection and tracking

Our interaction solution utilizes a large fogscreen coupled with depth-sensing Microsoft Kinect Sensor V2 for Windows. The Kinect device was placed behind the fog screen and monitored the area in front of the screen. It tracked skeletal data of the user's hand while our software analyzed hand gestures.

We used an early prototype of the fogscreen [8, 14]. It has a 1.55 × 1.05 m area available for interaction. We used an Optoma ML750e mini LED projector, which produces 700 ANSI lumens at a contrast of 15000:1. The projector faces the user on the other side of the fog. In this configuration, the image is brighter than when projecting from the user's side and the user cannot block the projector's light with their body.

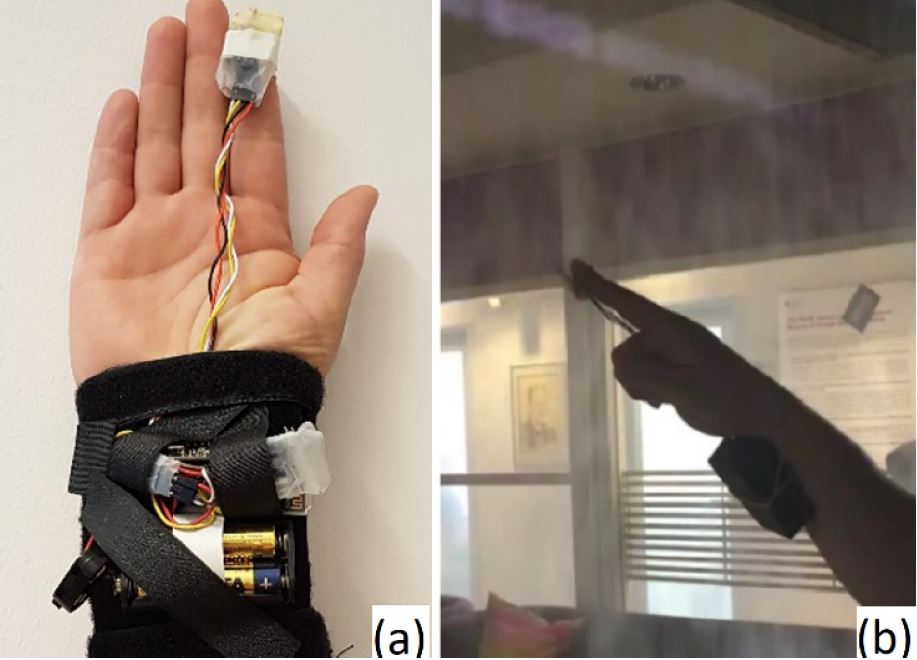

3.2 Haptic device

To provide the user with haptic feedback, we designed a lightweight wireless wearable vibrotactile actuation device. The design uses an Arduino Nano microcontroller board combined with an HC-05 serial Bluetooth radio and a Texas Instruments DRV2605L haptic motor controller with an eccentric rotating mass (ERM) vibration motor. The choice of a Bluetooth connection over a WiFi connection was made during extensive piloting, which revealed erratic WiFi signal delays due to interference. We powered it with two AA batteries regulated to 5V through a Pololu S7V8A stepper voltage regulator.

Figure 1: (a) Haptic device, (b) device in the experiment.

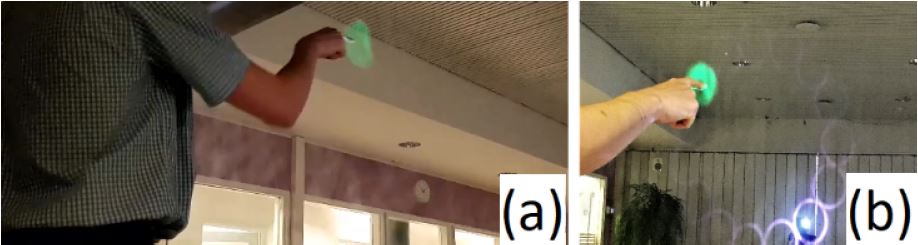

Figure 2: (a) User tapping to select a target, (b) user holding their finger over the target for dwell-based selection.

The bulk of the device is worn around the user's wrist with the motor controller and the coin-cell ERM motor taped to the user's dominant hand's index finger in the palmar side (See Figure 1). Earlier studies found that the placement of the actuator (on the finger or on the wrist) might not affect user's performance [5]. However, placing it on the finger is preferred by users [5, 12]. Also, it was found that user responses are faster when a vibrotactile stimulus is applied to the hand used for target selection [12].

3.3 Interaction and feedback

The targets for selection are blue circles on a black background arranged in a circular layout, with only one target visible at a time. The background effectively attenuates the bright spot of the projector, which might otherwise be uneasy for the user's eyes (see Figure 2). Selecting one target makes it disappear and a new target is presented on the other side of the circle rotating clockwise.

A successful selection of the tapping gesture required the finger to exit the fog within the borders of the target's current position. After this, the target disappears, a clicking sound is played, and a new target appears. A special error sound is played if the finger incorrectly leaves the fog. This informs the user that their tapping gesture was not executed correctly.

In the target acquisition state of the dwell-based gesture, a rotating animation of a green arc is rendered on the target to visualize the dwell progress. After the dwell time is reached, target selection is registered, wherein the target disappears. A click sound is played and a new target appears in a new location of the fog. If the finger leaves the target during the dwell, the dwell counter is reset. A small white cursor is visible as help for target selection.

In fog tapping with haptic feedback, the user feels an instantaneous distinct vibrotactile feedback pattern in the fingertip as the finger enters the fog. After the finger returns to its initial position, the user feels a clicking haptic pattern that confirms a successful target selection. No clicking pattern is provided in case of an erroneous fog tapping gesture.

In dwell-based selection, the haptic feedback begins immediately when the user touches the target. The user then feels a continuous vibration, indicating the progress of the dwell interval. Upon the selection, a clicking pattern is presented via haptic feedback similar to that of tapping.

To generate recognizable tactile cues, we used well-known characteristics of tactile-cue design: frequency, duration, rhythm, waveform, and location [1, 3]. Vibrotactile stimulation patterns were empirically selected in the range of 150-300 Hz. This range was shown [6, 7] to stimulate the Pacinian corpuscles in both glabrous and hairy skin areas, which results in good perception and recognition of haptic sensations. We empirically selected short pulses of 60 ms at 200 Hz to form a clicking sensation.

For dwell-based gesture, we used a long stimulus of 1000 ms divided into four waveforms of 250 ms where the frequency rapidly ramped up from 0 Hz to 200 Hz and back to 0 Hz at the end of each sequence, to create rhythm in the waveform. No participant reported the intensity of the tactile feedback as lacking, but one participant said it felt too intense.

We measured the movement time between targets and counted target re-entries. We assumed that the user moves to the next target more quickly if they received a haptic confirmation that the previous selection was completed. In dwell-based selections, the assumption was that haptic feedback during dwelling could reduce the number of target re-entries.

4 EXPERIMENT

The large, open experiment room had no direct sunlight. We dimmed the lighting to improve the projected image so that it was still comfortable to read and answer the questionnaire.

Twenty healthy university students and staff members attended the tests. Ages ranged from 20 to 66 years (mean = 35.3, SD = 12.9). Some of the participants had prior experiences with mid-air gesturing or with projected particle screens (e.g., they had seen fogscreens in shopping malls or in research demonstrations), but none had first-hand experience interacting with a fogscreen.

4.1 Procedure

Upon arriving at the laboratory the participants read an information sheet, gave informed consent, and completed a background form. The first gestural technique was explained and they watched a video of the selection method in question. The gesture was explained and demonstrated, followed by enough practice trials for the participant to feel comfortable with the interaction.

The participants were instructed to make selections as fast and accurately as possible. The four conditions (tapping, dwell-based, with and without haptic feedback) were presented in a counterbalanced order to offset learning effects.

After the experiment, a final rating scale and a free-form questionnaire about the interaction with the fogscreen was provided. We asked participants to rank the four conditions in order of preferences from the most (1) to least (4) preferred.

4.2 Design

The experiment was a within-subjects study. Each participant was presented with four conditions: two selection gestures with and without haptic feedback. Each condition consisted of 540 selections of targets. After each selection, the target disappeared and a new target appeared on the opposite side of the circle, rotating clockwise. Targets were divided into sets of 15, with each set having a different combination of target amplitude (the distance from the origin of the circular layout, 100, 350, 600 pixels) and target width (40, 70, 100 pixels).

A 2 selection methods × 2 feedback modes within-subjects analysis of variances (ANOVA) was performed on the data combined for all amplitudes and widths. Bonferroni corrected t-tests were used for pairwise post hoc comparisons.

We used a Friedman test to compare the subjective ratings for statistical significancy, a Wilcoxon signed-ranked test with Bonferroni corrections was conducted for pairwise comparisons, resulting in significance level set at p < .008.

5 RESULTS

Analyses revealed some anomalous behaviours that resulted in outliers. In fog tapping, selections where the selection coordinate was greater than 3× the radius from the target centre were removed (96 selections). In dwell-based interaction, selections that took more than 10 seconds were removed (48 selections). The total number of outlier selections was 96 + 48 = 144, or 1.3% of the original 10,800 selections. All analyses below are with outliers removed.

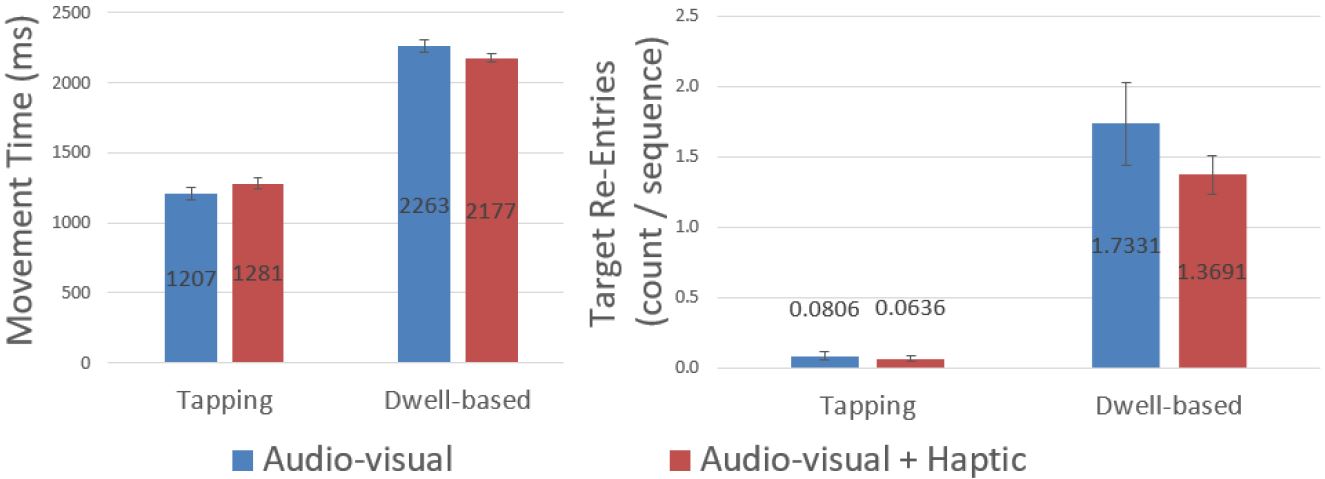

The addition of haptic feedback affected the movement time slightly — in fog-tapping the inclusion of haptic feedback increased the movement time and in dwell-based selection it reduced the movement time. These differences were subtle and not statistically significant (F1,19 = 0.3, p > .05).

Similarly, the addition of haptic feedback lessened the target re-entries, most notably in dwell-based selections, but these differences were not statistically significant (F1,19 = 2.18, p > .05). These effects are illustrated in Figure 3.

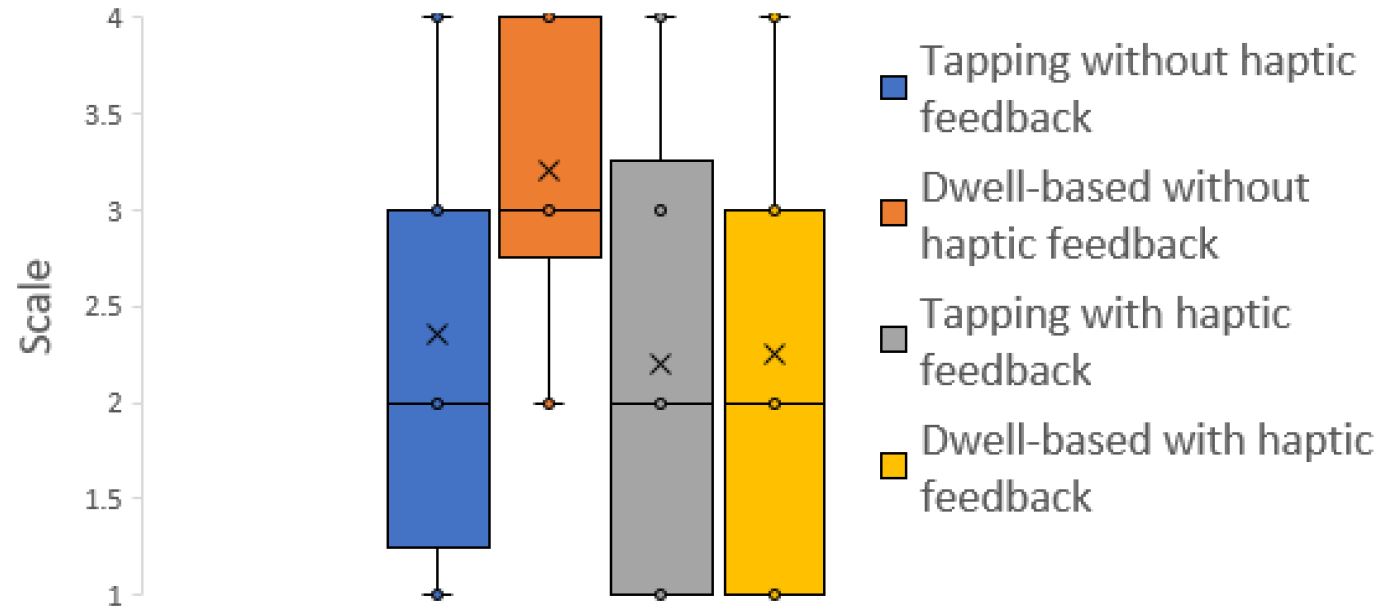

After testing, participants ranked their preferences among the four conditions (2 selection methods × 2 feedback modes). The means of the responses are shown in Figure 4. A lower score is better. Friedman tests showed a statistically significant effect for participants' preference of interaction gesture, χ2(3) = 7.980, p = .046.

Figure 3: Mean values by selection method and feedback mode. Error bars are standard error of the means (SEMs).

Figure 4: Mean of participants' preference ratings by selection method. A lower score is better.

Post hoc pairwise comparisons with Wilcoxon signed-rank tests showed that dwell-based selection with haptic feedback was significantly preferred over that without haptic feedback, Z = -2.879, p = .004.

6 DISCUSSION

The users ranked tapping without haptic feedback as the most preferred interaction method but also ranked the dwell-based selection with haptic feedback as the second most preferred interaction method. Overall, subjectively, no method was rated significantly worse than the others. The overall preference for tapping is likely explained by the perceived delay when using dwell-based gestures, as it required participants to hold their hand still for 1 second. As found in previous research [4, 19], dwell-based gestures are tiring but practical if recognition quality of other gestures is poor.

The inclusion of haptic feedback did not affect the measured performance of the participants, but in the subjective ratings the participants somewhat preferred the inclusion of haptic feedback. In the experiment, the haptic feedback was reactive — it gave affirmation to the user in much the same way as the visual and auditory feedback. This could have affected the measured performance. However, these results show that haptic feedback has its use in gestural interaction with large fogscreens. Further studies will be conducted to measure objective gains.

7 CONCLUSIONS

We studied the effects of vibrotactile feedback in gestural interaction with a large projected particle screen. Fog tapping and dwell-based selection were compared, both with and without vibrotactile feedback. Vibrotactile feedback yielded a statistically significant difference when used with dwell-based gestures, but not with tapping. Subjective ratings reflected these findings. Tapping without haptic feedback was the most preferred interaction, but dwell-based gestures with haptic feedback were preferred over dwell-based gestures without haptic feedback.

ACKNOWLEDGMENTS

This work was supported by the Academy of Finland [grant number: 326430].

REFERENCES

[1] Salvatore Aglioti and Francesco Tomaiuolo. 2000. Spatial stimulus-response compatibility and coding of tactile motor events: Influence of distance between stimulated and responding body parts, spatial complexity of the task and sex of subject. Perceptual and motor skills 91 (09 2000), 3-14.

[2] Barry G. Blundell. 2011. 3D Displays and Spatial Interaction: From Perception to Technology Volume I Exploring the Science, Art, Evolution and Use of 3D Technologies. Walker Wood Limited, Auckland, NZL.

[3] Stephen Brewster and Lorna M. Brown. 2004. Tactons: Structured Tactile Messages for Non-visual Information Display. In Proc. of the Fifth Conference on Australasian User Interface - Volume 28 (AUIC '04). Australian Computer Society, Inc., Darlinghurst, Australia, Australia, 15-23.

[4] Florian Camp, Alexander Schick, and Rainer Stiefelhagen. 2013. How to Click in Mid-Air. In Proc. of the First International Conference on Distributed, Ambient, and Pervasive Interactions - Volume 8028. Springer-Verlag, Berlin, Heidelberg, 78-86.

[5] Euan Freeman, Stephen Brewster, and Vuokko Lantz. 2014. Tactile Feedback for Above-Device Gesture Interfaces: Adding Touch to Touchless Interactions. In Proc. of the 16th International Conference on Multimodal Interaction (ICMI '14). ACM, New York, NY, USA, 419-426.

[6] E Bruce Goldstein. 2009. Sensation and perception. Cengage Learning.

[7] Lynette A Jones and Nadine B Sarter. 2008. Tactile displays: Guidance for their design and application. Human factors 50, 1 (2008), 90-111.

[8] Satu Jumisko-Pyykkö, Mandy Weitzel, and Ismo Rakkolainen. 2009. Biting, Whirling, Crawling - Children's Embodied Interaction with Walk-through Displays. In Human-Computer Interaction - INTERACT 2009. Springer Berlin Heidel- berg, Berlin, Heidelberg, 123-136.

[9] Paul C. Just. 1899. Ornamental fountain. U.S. Patent No. 620592.

[10] Diego Martínez, Sriram Subramanian, and Edward Joyce. 2014. MisTable: Reach-through Personal Screens for Tabletops. Conference on Human Factors in Computing Systems - Proceedings.

[11] Karri Palovuori and Ismo Rakkolainen. 2018. Improved interaction for mid-air projection screen technology. In Virtual and Augmented Reality. IGI Global.

[12] Alexandros Pino, E. Tzemis, Nikolaos Ioannou, and Georgios Kouroupetroglou. 2013. Using Kinect for 2D and 3D Pointing Tasks: Performance Evaluation. Lecture Notes in Computer Science 8007 (07 2013), 358-367.

[13] Ismo Rakkolainen and Karri Palovuori. 2002. Walk-thru screen. In Projection Displays VIII, Ming Hsien Wu (Ed.), Vol. 4657. Int. Soc. for Optics and Photonics, SPIE, 17-22.

[14] Ismo Rakkolainen, Antti Sand, and Karri T. Palovuori. 2015. Midair User Interfaces Employing Particle Screens. IEEE Computer Graphics and Applications 35, 2 (2015), 96-102.

[15] Antti Sand, Ismo Rakkolainen, Poika Isokoski, Roope Raisamo, and Karri Palovuori. 2015. Light-weight immaterial particle displays with mid-air tactile feedback. In 2015 IEEE International Symposium on Haptic, Audio and Visual Environments and Games (HAVE). 1-5.

[16] Matthias Schwaller and Denis Lalanne. 2013. Pointing in the Air: Measuring the Effect of Hand Selection Strategies on Performance and Effort. In Human Factors in Computing and Informatics. Springer Berlin Heidelberg, Berlin, Heidelberg, 732-747.

[17] Daniel Vogel and Ravin Balakrishnan. 2005. Distant Freehand Pointing and Clicking on Very Large, High Resolution Displays. In Proc. of the 18th Annual ACM Symposium on User Interface Software and Technology (UIST '05). ACM, New York, NY, USA, 33-42.

[18] Robert Walter, Gilles Bailly, Nina Valkanova, and Jörg Müller. 2014. Cuenesics: Using Mid-air Gestures to Select Items on Interactive Public Displays. In Proc. of the 16th International Conference on Human-computer Interaction with Mobile Devices & Services (MobileHCI '14). ACM, New York, NY, USA, 299-308.

[19] Soojeong Yoo, Callum Parker, Judy Kay, and Martin Tomitsch. 2015. To Dwell or Not to Dwell: An Evaluation of Mid-Air Gestures for Large Information Displays. In Proc. of the Annual Meeting of the Australian Special Interest Group for Computer Human Interaction (OzCHI '15). ACM, New York, NY, USA, 187-191.