|

NATS 1700 6.0 COMPUTERS, INFORMATION AND SOCIETY

Lecture 8: From Napier to Babbage

| Previous | Next | Syllabus | Selected References | Home |

| 0 | 1 | 2 | 0 | 4 | 5 | 6 | 7 |

8 | 9 | 10 | 11 | 12 | 13 |

14 | 15 | 16 | 17 | 18 | 19 |

20 | 21 | 22 |

Introduction

-

With the advent of the Renaissance and the re-awakening of curiosity the new age brought with it, the history of

computing becomes punctuated by an increasing number of inventions and applications. Here is a brief timeline of

the major historical, scientific and technological dates, that takes us to the end of the nineteenth century and

gives us a context within which we can better understand our specific narrative.

- Giovanni de Dondi's all-mechanical clock, with sun and planet gears (ca 1364)

- Brunelleschi and the discovery of perspective (ca 1420)

- Johan Gensfleisch zum Gutenberg: the first printed Bible (ca 1456)

- Johan Froben publishes the first book with pages numbered with Arabic numerals (1516)

- Galileo Galilei: 1564-1642

- Huygens builds the first pendulum-driven clock, accurate to a few seconds per day (1656)

- Leeuwenhoek and the invention of the microscope (ca 1590)

- Lippershey and the invention of the telescope (ca 1608)

- John Napier publishes Mirifici Logarithmorum Canonis Descriptio (1614)

- William Oughtred invents the slide-rule, an analog calculator based on logarithms (1622)

- Pascal's calculating machine (ca 1641)

- Newton's (1642-1727) discovery of a method of interpolation for computing the area under a circle (1670)

- Leibnitz' calculating machine (ca 1671)

- Linnaeus (1707-1778) and modern systematic botany

- Newcomen's steam engine (1712)

- Adam Smith (1723-1790): economic theory

- Bouchon's invention of a mechanical system for memorizing weaving patterns (1725)

- The Industrial Revolution: second half of the 18th century

- Diderot publishes the Encyclopédie (1751-1772)

- David Ricardo (1772-1823): economic theory

- The French Revolution (1789)

- Claude Chappe introduces the first practical system of visual telegraphy, the semaphore (1792)

- William Farish introduces the numerical grading of students' papers (1812)

- Jacquard invents perforated cards to control weaving patterns in looms (1805)

- von Sömmering invents the telegraph (1809)

- Laënnec invents the stethoscope (1816)

- Babbage builds the Difference Engine (1822). In 1833 he starts working on the Analytical Engine

- Samuel Morse introduces the modern telegraph (1835)

- Associated Press starts the first telecommunications network (ca 1840)

- George Boole publishes The Mathematical Analysis of Logic (1847)

- A telegraph cable first spans the Atlantic Ocean (1858)

- Darwin and Wallace publish simultaneous papers on evolution by natural selection (1858)

- A G Bell is granted a patent for the telephone (1876)

- G Marconi transmits the first radio signal (1895)

- This is a long period, rich of discoveries and inventions. I will limit myself to emphasizing just a few of the

major milestones in the history of computing. You should visit Erez Kaplan's website dedicated to

Calculating Machines,

where you can also operate (via a Java applet) a 1885 Felt adding machine. Various devices were invented by

Wilhelm Schickard, Blaise Pascal, Gottfriend Wilhelm von Leibniz, Charles, the third Earl Stanhope, Mathieus Hahn,

J H Mueller, Charles Xavier Thomas de Colmar, Charles Babbage, and others. Refer to the useful Calculating Machines,

where you can also operate (via a Java applet) a 1885 Felt adding machine. Various devices were invented by

Wilhelm Schickard, Blaise Pascal, Gottfriend Wilhelm von Leibniz, Charles, the third Earl Stanhope, Mathieus Hahn,

J H Mueller, Charles Xavier Thomas de Colmar, Charles Babbage, and others. Refer to the useful  Chronology of Digital Computing Machines (to 1952).

This source will remain a valuable reference for the next several lectures. Chronology of Digital Computing Machines (to 1952).

This source will remain a valuable reference for the next several lectures.

Finally, Martin Campbell-Kelly has written a nice history of the (modern)

computer--starting with Babbage--which appeared in the September 2009 issue of

Scientific American. Go to the Library.

-

You may also want to take a look at illustrations depicting some of the more famous devices invented in this period:

Pascal's Machine,

Leibniz' Machine,

Babbage's Difference Engine

Topics

- Galileo Galilei was the first to draw some fundamental conclusions from an observation that almost everybody makes:

a chandelier, suspended from a ceiling, swings back and forth when there is a draft. What Galileo did, and nobody we

know of had done before, was to use his pulse to measure the time of each oscillation. He found that such time, for a

given chandelier, did not depend on the amplitude of the oscillation. This observation became the basis for the

development of precise clocks, culminating in 1656 with Huygens's pendulum clock, which accurate to a few seconds per day.

The reason for mentioning this is that, as we shall see, precise clocks are essential for (modern) computers to function.

The essence of any clockwork is the conversion of stored energy into regular movements in equal intervals. We now say

this is an example of analog-to-digital conversion.

- In 1614 John Napier

invented the logarithms, and shortly thereafter introduced what became known as Napier's Bones, a mechanical

device for multiplying, dividing and taking square roots and cube roots. Logarithms are one of the great computational

breakthroughs of all times. In arithmetics the easy operations are additions and subtractions. Multiplications and

divisions, not to mention square and cubic roots, are much more difficult operations, prone to errors. However,

especially in astronomy, these laborious operations were the bread and butter of various calculations, such as the

prediction of eclipses. Napier found a practical way to reduce all multiplications, divisions and root extractions

to additions and subtractions. In modern notation, here is how this works.

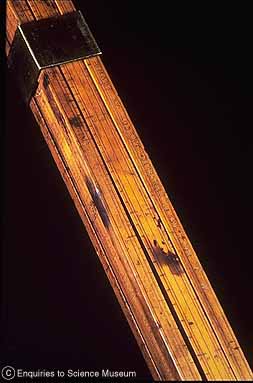

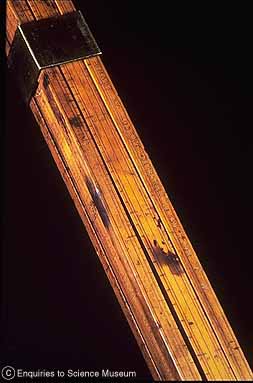

Slide Rule by Robert Bissaker (1654)

- Take a fixed number, say 10, and ask yourself to which power do I need to raise 10 to get any other number, say 100

or 1000. This is not difficult (102 = 100, 103 = 1000) because 100 and 1000 are in fact

'powers' of 10. But what about 235.7? Well, argued Napier, we could probably write 10x = 235.7, where x

is likely to be a number between 2 and 3, since 235 is between 100 and 1000. He was able to prove this conjecture,

but he had to do a lot of manual, traditional calculations to get the actual value of x (2.372359582). He repeated

these calculations for a large sample of numbers, and published them. These calculated numbers are the logarithms:

2.372359582 is the logarithm (base 10) of 235.7, just as 2 is the logarithm (base 10) of 100, and 3 is the logarithm

(base 10) of 1000, and so on. Now, how can we turn, say, multiplications into additions? I will not burden you with

the (simple) proof, but will only state the results: the logarithm of a product is the sum of the logarithms of

the factors. If we denote by log10x the logarithm (base 10) of x, we have

log10(xy) = log10x + log10y. Similarly, log10(x/y) = log10x - log10y,

and log10(xy) = y log10x (this is actually a multiplication,

but it too represents substantial labour savings). If you think about it, this discovery/invention (there

are various opinions on which term is appropriate to mathematics) makes it much easier, not only to do manual

calculations, but to build devices that perform calculations. Since the times of Napier, mathematicians have

contributed steadily to computing, and the new area of computational mathematics now occupies

a distinguished position in computer science and mathematics.

- One of the first inventions to result from the introduction of logarithms was the slide rule,

invented by William Oughtred in 1622. It is interesting to note that, despite this early date, slide rules began

to be used in earnest by engineers, accountants, and scientists only towards the end of the nineteenth century,

and continued to be the tool of choice of such people until they were displaced by the personal computer. For instance,

the Panama Canal was designed with the slide rule. An interesting place to start from is The

Slide Rules section of the the Museum of HP Calculators. Visit also the International

Slide Rule Museum .

- The next milestone I wish to speak about is represented by Newton (1642-1727), and in particular his invention/discovery

of calculus. Netwon must actually share this honour with Gottfried Wilhelm Leibniz (1646-1716), who

arrived at it independently at about the same time. Netwon's original problem was that of calculating the area enclosed

by a geometrical figure such an ellipse. One thing led to another, and by the time he managed to solve his problem,

he had a complex, powerful mathematical tool that did for geometry and algebra what logarithms had done for arithmetics.

Babbage's difference engine, and later analytic engine (see below) embodied calculus.

- The next episode I want to mention is Charles Babbage and his calculating engines. A very good biography

of

Babbage

is available from the Department of Computer Science at Virginia Tech, which hosts one of the best collections of

materials relating to the history of computing. Babbage

is available from the Department of Computer Science at Virginia Tech, which hosts one of the best collections of

materials relating to the history of computing.

- The importance of Babbage stems from the fact that his analytic engine is in fact the first

modern computer. The philosophical and social basis of Babbage's work was the division of human labour and

its corollary, automation. It was a purely mechanical device, yet it had all the essential components of the

computer as we know it today. See also Lecture 10. "The machine

was to have four parts: a store, made of columns of wheels, in which up to a thousand 50-digit numbers could be

placed; the mill, where arithmetic operations would be performed by gear rotations; a transfer system composed of

gears and levers; and a mechanism for number input and output. For the latter, he planned to adapt Jacquard's

punched cards. Babbage also anticipated many other of the most important features of today's computers: automatic

operation, external data memories, conditional operations and programming." (Ian McNeil, ed., An Encyclopaedia of

the History of Technology, London, Routledge, 1990, 1996, p. 700). The analytic engine was never built,

due to various reasons, including the crucial one that the manufacture of many of its parts required a precision

not attainable by the machining technology of the time. The analytic engine and its printer have now been built,

and they work as Babbage said they would. See the story Babbage

Printer Finally Runs on the BBC website.

- As mentioned above, Babbage's analytic engine could be programmed, and it was (at least on paper). The story becomes

here a bit fuzzy, but tradition has it that Babbage wrote a few dozen programs for the machine. Lady Augusta Ada Byron,

Countess of Lovelace, and daughter of Lord Byron. became a steady collaborator of Babbage, and is said to have written

the very first program for any computer. Here is a short biography of

Ada Lovelace, in whose honor Ada, "The

Language for a Complex World," was named many years later. Ada Lovelace, in whose honor Ada, "The

Language for a Complex World," was named many years later.

Questions and Exercises

- There is quite a bit of information in this lecture. So, just make sure you read the material and that you

understand it well.

Picture Credit: National Museum of Science & Industry, London.

Last Modification Date: 13 Aug 2009

|

![]() Calculating Machines,

where you can also operate (via a Java applet) a 1885 Felt adding machine. Various devices were invented by

Wilhelm Schickard, Blaise Pascal, Gottfriend Wilhelm von Leibniz, Charles, the third Earl Stanhope, Mathieus Hahn,

J H Mueller, Charles Xavier Thomas de Colmar, Charles Babbage, and others. Refer to the useful

Calculating Machines,

where you can also operate (via a Java applet) a 1885 Felt adding machine. Various devices were invented by

Wilhelm Schickard, Blaise Pascal, Gottfriend Wilhelm von Leibniz, Charles, the third Earl Stanhope, Mathieus Hahn,

J H Mueller, Charles Xavier Thomas de Colmar, Charles Babbage, and others. Refer to the useful ![]() Chronology of Digital Computing Machines (to 1952).

This source will remain a valuable reference for the next several lectures.

Chronology of Digital Computing Machines (to 1952).

This source will remain a valuable reference for the next several lectures.

![]() Babbage

is available from the Department of Computer Science at Virginia Tech, which hosts one of the best collections of

materials relating to the history of computing.

Babbage

is available from the Department of Computer Science at Virginia Tech, which hosts one of the best collections of

materials relating to the history of computing.![]() Ada Lovelace, in whose honor Ada, "The

Language for a Complex World," was named many years later.

Ada Lovelace, in whose honor Ada, "The

Language for a Complex World," was named many years later.