Akamastu, M., MacKenzie, I. S., & Hasbrouq, T. (1995). A comparison of tactile, auditory, and visual feedback in a pointing task using a mouse-type device. Ergonomics, 38, 816-827. [software]

A Comparison of

Tactile, Auditory, and Visual Feedback in a

Pointing Task Using a Mouse-Type Device

Motoyuki Akamatsu1, I. Scott MacKenzie2, & Thierry Hasbrouc3

1National Institute of Bioscience and Human-TechnologyAIST, MITI, Japan

2Department of Computing & Information Science

University of Guelph, Canada

3Cognitive Neuroscience Laboratory

CNRS, France

Abstract

A mouse was modified to add tactile feedback via a solenoid-driven pin projecting through a hole in the left mouse button. An experiment is described using a target selection task under five different sensory feedback conditions ("normal", auditory, colour, tactile, and combined). No differences were found in overall response times, error rates, or bandwidths; however, significant differences were found in the final positioning times (from the cursor entering the target to selecting the target). For the latter, tactile feedback was the quickest, normal feedback was the slowest. An examination of the spatial distributions in responses showed a peaked, narrow distribution for the normal condition, and a flat, wide distribution for the tactile (and combined) conditions. It is argued that tactile feedback allows subjects to use a wider area of the target and to select targets more quickly once the cursor is inside the target. Design consideration for human-computer interfaces are discussed.Keywords: Tactile feedback, sensory feedback modalities, human-computer interfaces, Multi-modal mouse

1. Introduction

While interacting with the natural world, we obtain different modalities of sensory information to inform and guide us. These include primarily the visual, auditory, and tactile modalities. This ensemble of sensory information is easily integrated in the cortex, permitting our psychomotor functions to manipulate objects with great facility. In contrast, the interface between humans and computers is limited in the sensory modalities available. The strong dependence on the visual channel may cause visual fatigue, or may necessitate directing too much attention to the CRT. In many work settings, operators routinely divide attention among many facets of their work. Many of these are "off-screen".

This research investigates the addition of different sensory modalities in a human-computer interface using a modified mouse. We begin with a discussion of some pertinent issues in sensory feedback in the context of mouse-based interfaces. We follow with a description of the means by which tactile stimuli were added to our "multi-modal mouse". Following a brief review of past research in tactile feedback, we describe an experiment aimed at measuring human performance in a routine target acquisition task under different sensory feedback conditions.

1.1 Sensory Feedback in Mouse-Based Interfaces

The recent emergence of the mouse as a pointing device in graphical user interfaces (GUIs) represents a vast improvement on the previous practice of mapping key strokes to cursor movement. In a mouse-based interface, cursor movements follow from hand motion in a two-dimensional space -- the desktop. In manipulating a mouse, we obtain both visual and kinesthetic information of movement and position.

The act of grasping an object such as a mouse issues forth a tactile sensation in addition to the kinesthetic sensation obtained through muscle and joint receptors. This is important, for example, to confirm touching the object, and in this sense tactile information is a substitute for visual information. There is evidence that the addition of tactile information reduces response times in interactive systems (Nelson, McCandlish, and Douglas 1990). When tracing the shape of an object with the finger tip, for example, the addition of tactile information leads to increased velocity in finger movements, and, implicitly, reduces the visual load in completing tasks (Akamatsu 1991).

If we consider the task space into which mouse movements are mapped, we are not so lucky. The task space is presented to us on a CRT as a set of objects, with associated actions, structure, and so forth. The objects possess clear physical properties, such as shape, thickness, colour, density, or contrast. Although a tactile sensation follows from the initial grasp of the mouse, while subsequently maneuvering within the task space, no such sensation follows. When the cursor enters an object, it figuratively "touches" the object. When the cursor moves across a white background vs. a grey or patterned background, it passes over different "textures". Yet no sensory feedback (tactile or otherwise) is conveyed to the hand or fingers on the mouse. This, we conjecture, is a deficiency in the interface -- a deficiency that signals missed opportunities in designing human-computer interfaces.

Currently, the visual channel provides most of the sensory feedback in the task space of a GUI. Still, visual information is usually withheld until an action is initiated, usually by pressing a button. The act of simply touching an object usually carries no added visual sensation; the cursor is superimposed on the target, but the appearance of the target is otherwise unchanged.

Auditory stimuli are used moderately in human-computer interfaces, usually to signal an error or the completion of an operation. Such stimuli are simple to include since speakers are built-in on present-day systems. Gaver (1989) describes a complete GUI -- a modification of the Macintosh's Finder -- using auditory feedback to inform the user of many details of the system, such as file size or the status of file open and close operations. Numerous other examples exist in which auditory stimuli have been exploited as ancillary cues in human-computer systems (e.g., DiGiano 1992).

In a pragmatic sense, the sort of "touch" sensations argued for in the present paper may be realized easily in sound. Tactile sensations are more difficulty to implement since modifications to the mouse or additional transducers are required.

In the next section we describe how tactile feedback was added to a conventional mouse. We call the modified mouse a multi-modal mouse or sensory integrative mouse.

1.2 The Multi-Modal Mouse

We modified a standard mouse to present tactile feedback to the index finger.[1] Tactile information is given by means of an aluminum pin (1 mm × 2 mm) projecting from a hole on the left mouse button (see figure 1). The pin is driven by a pull-type solenoid (KGS Corp., 31 mm × 15 mm × 10 mm) via a lever mechanism. The pin is covered by a rubber film fixed to the backside of the mouse button. The film serves to return the pin to its rest position when the control signal is turned off. The modification increased the weight of the mouse by about 30%.

(b)

(c)

Figure 1. The multi-modal mouse. (a) A pin driven by a solenoid

projects through a hole in the left mouse button. (b) Bottom view of the

modified mouse. (c) The index finger rests above the hole and receives a

tactile sensation when the pin projects upward by 1 mm.

The stroke of the pin is 1 mm when the solenoid is driven by a 12 volt DC signal. The pin rises to 95% of its full stroke within 4 ms of application of the signal.

In the test interface, the visual display is a window containing targets. The targets are shaded in a raised perspective to simulate momentary button-like switches. When selected by pushing the mouse button, the shading disappears and the targets are shown in a flat perspective simulating a button being pressed down. This is the usual visual feedback in GUIs such as Microsoft's Windows or Next's NextStep. Software drivers were written to add tactile information (signal "on") when the tip of the cursor is on a target in the test window. The tactile sense disappears (signal "off") when the cursor leaves the target or the target is selected by pressing the mouse button.

Since the addition of tactile feedback is the most novel in the present study, the next section briefly reviews previous research in this area.

1.3 Tactile Feedback

A simple use of tactile feedback is shape encoding of manual controls, such as those standardized in aircraft to control landing flaps, landing gear, the throttle, etc. (Chapanis and Kinkade 1972). Shape encoding is particularly important if the operator's eyes cannot leave a primary focus point (away from the control) or when operators must work in the dark.

Not surprisingly, systems with tactile feedback, called tactile displays, have been developed as a sensory replacement channel for the handicapped. The most celebrated product is the Octacon, developed by Bliss and colleagues (Bliss et al. 1970). This tactile reading aid, which is still in use, consists of 144 piezoelectric bimorph pins in a 24-by-6 matrix A single finger is positioned on the array (an output device) while the opposite hand maneuvers an optical pickup (an input device) across printed text. The input/output coupling is direct; that is, the tactile display delivers a one-for-one spatial reproduction of the printed characters. Reading speeds vary, but rates over 70 words/min. after 20 hr of practice have been reported (Sorkin 1987).

A tactile display with over 7000 individually moveable pins was reported by Weber (1990). Unlike the Octacon, both hands actively explore the display. With the addition of magnetic induction sensors worn on each index finger, a user's actions are monitored. A complete, multi-modal, direct manipulation interface was developed supporting a repertoire of finger gestures. This amounts to a GUI without a mouse or CRT -- true "touch-and-feel" interaction.

In another 2D application called Sandpaper, Minski et al. (1990) added mechanical actuators to a joystick and programmed them to behave as virtual springs. When the cursor was positioned over different grades of virtual sandpaper, the springs pulled the user's hand toward low regions and away from high regions. In an empirical test without visual feedback, users could reliably order different grades of sandpaper by granularity.

Some of the most exciting work explores tactile feedback in 3D interfaces. Zimmerman et al. (1987) modified a VPL DataGlove by mounting piezoceramic benders under each finger. When the virtual fingertips touched the surface of a virtual object, contact was cued by a "tingling" feeling created by transmitting a 20-40 Hz sine wave through the piezoceramic transducers. Nevertheless, the virtual hand could still pass through an object. This problem was addressed by Iwata (1990) in a six degree-of-freedom mechanical manipulator with force reflection. In the demonstration interface, users wear a head-mounted display and maneuver a cursor around 3D objects. When the cursor comes in contact with a "virtual" object, it is prevented from passing through the object. The sensation on the user's hand is a compatible force-generated tactile sense of touching a "real" solid object: The manipulator strongly resists the hand's trajectory into the object: Movement is stopped.

The underlying rational for much of the research in tactile displays is in maintaining stimulus-response (SR) compatibility in the interface. When a cursor or virtual hand comes in contact with a target, the most correct way to convey this sense to the operator is through a touch sensation in the controlling limb.

In the next section we describe an experiment conducted to test the effect of different sensory modalities on human performance in a routine target acquisition task.

2. Method

2.1 Subjects

Ten subjects participated in the experiment. All subjects were regular users of mice in their daily work.

2.2 Apparatus

The experiment was conducted using the multi-modal mouse described earlier. The host system was a NEC PC9801. A second PC9801 served as a data collection system to sample mouse coordinates (1,000 times per second) and button presses, with data saved in output files for subsequent analysis. Subjects sat in a special isolation room while the experimenter controlling the software sat in an adjoining room.

2.3 Procedure

Subjects performed a routine target selection task, as follows. The experiment screen consisted of a rest rectangle, a target, and a start circle which appeared at the beginning of a trial (see figure 2). The target was 22 pixels high and 21 pixels wide. When ready to begin a trial, subjects positioned the cursor (by manipulating the mouse) in the rest rectangle near the bottom of the screen. Soon after, a small circle appeared below and slightly to the left of the target (25° clockwise from vertical). Subjects then positioned the cursor within the circle. After a random interval of 0.5 to 1.5 seconds, the circle disappeared signaling the start of a trial. Subjects were instructed to move the cursor as quickly and accurately as possible to within the target and select the target by pushing the left mouse button.

Figure 2. The task window consists of a rest rectangle, a start

circle (appearing momentarily at the beginning of a trial), and a target.

2.4 Design

The experiment employed a 5 × 2 fully within-subjects factorial design with repeated measures on each condition. The factors were feedback condition with five levels and target distance with two levels. The five feedback conditions were

| "normal" | no additional feedback indicating the cursor was over the target |

| auditory | a 2 kHz tone was heard while the cursor was inside the target |

| tactile | the pin under the finger tip pressed upward presenting a tactile sensation to the finger while the cursor was over the target |

| visual | the shading of the target changed while the cursor was over the target |

| combined | a combination of the auditory, tactile, and visual stimuli described above |

For the auditory condition the tone was 2 kHz with an intensity of 46 dB(A). The room had an ambient sound pressure level of 33 dB(A).

For the visual condition, the brightness of the target increased. In the normal condition, the brightness of the main area of the target was 6.0 cd/m2. The raised perspective was achieved by displaying the top and right edges at 15.0 cd/m2 while the bottom and left edges were shadowed at 1.7 cd/m2. Under the visual feedback condition, the brightness of the main area increased to 15.0 cd/m2. The top and right edges intensified to 35.5 cd/m2; the bottom and left edges to 6.7 cd/m2.

In all conditions, the target appearance changed to "flat" (6.0 cd/m2) while the mouse button was pressed, as described earlier.

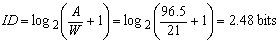

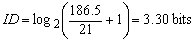

The distance from the start circle to the target was either 96.5 pixels or 186.5 pixels, computed using the Pythagorean identity. The distances yield two distinct task difficulties which were quantified using a variation of Fitts' index of difficulty (Fitts 1954). In the present experiment, we used the Shannon formulation with the two dimensional interpretation given by MacKenzie and Buxton (1992). The close target task had a difficulty of

| (1) |

where "W" is the smaller of the target width or target height (21 pixels). The far target task had a difficulty of

| (2) |

In each of five days of testing, subjects received a block of 40 trials for one feedback condition. The two distances were presented randomly for a total of 20 trials for each distance. The order of feedback conditions was randomized with a different modality given each day. Each subject performed 5 × 2 × 20 = 200 trials in one session of the experiment. All subjects participated in two sessions of the experiment.

On each trial the following measurements were taken:

| total response time | the total time to complete the trial, from the cursor leaving the start circle until pushing the button |

| final positioning time | the time to complete the trial once the cursor entered the target region |

| x | the x coordinate of selection |

| y | the y coordinate of selection |

| error rate | computed from the x, y selection coordinates |

| bandwidth | (see below) |

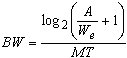

In the human motor-sensory domain, bandwidth is a measure widely used in human factors/ergonomics (e.g., Card et al. 1978). The calculation used in the present study is a composite of both the speed and accuracy of responses. It was a variation of Fitts' index of difficulty, adjusted for accuracy (MacKenzie 1992b, Welford 1968). Bandwidth was calculated as

| (3) |

where We is the "effective target width", adjusted for a nominal error rate of 4%. We is calculated as 4.133 times the standard deviation in the spatial distribution of selections.

At the end of the experiment each subject was asked to rank the five feedback conditions by order of preference.

3. Results and Discussion

Only the second session of the experiment analysed in order to avoid examining the first exposure of subjects to a new sensory feedback condition. The mean response time was 658 ms. The results of an ANOVA indicated no significant difference on the total response times across the five feedback conditions (F4,36 = .97). As expected, the far target took longer to select than the close target (741 ms vs. 575 ms; F1,9 = 207.78, p < .0001).

The mean error rate was 4%. Again, there was no significant difference across the five feedback conditions (F4,36 = 1.98, p > .05). Error rates were higher for the close target than for the far target (F1,9 = 11.25, p < .01). Although the latter effect is surprising, no specific explanation is offered at the present time.

The mean bandwidth was 3.8 bits/s, a figure similar to those reported elsewhere for the mouse in point-select tasks (see MacKenzie, 1992a, for results from five studies). Although bandwidth did not differ significantly across the five feedback conditions (F4,36 = 0.54), there was a significant effect for target distance with a higher bandwidth on the close target (4.2 bits/s vs. 3.3 bits/s, F1,9 = 109.47, p < .0001).

3.1 Final Positioning Time

Although initially the results above do not bode well for the different feedback conditions tested herein, further analysis reveals some interesting characteristics of the behavioural responses. Final positioning times were compared in an effort to learn what effect the different sensory modalities might have on the final phase of target acquisition; that is, following the onset of the added sensory feedback. The data in figure 3 show that the final positioning time was least for the tactile condition (237 ms) and highest for the normal condition (298 ms). The difference is statistically significant (F4,36 = 4.90, p < .005). Overall, the ranking was tactile, combined, auditory, colour, and normal.

Figure 3. Final positioning times. Tactile feedback yielded the

quickest responses (237 ms), and normal feedback the slowest (298 ms).

Vertical lines show 95% confidence intervals.

This effect is evidence that the addition of tactile stimuli (and to a lesser effect, auditory and colour stimuli) yields quicker motor responses. No doubt, this is due to the close SR compatibility in the task: the stimulus was applied to the finger, the response followed from the finger. The quicker responses were observed only on the final positioning times; however, this measurement is the best indicator of the effect of the sensory modality. There is no reasonable basis to expect the different sensory modality to effect movement time prior to the onset of sensory feedback. The movement prior to reaching the target area is (or may be) controlled by visual information only and, thus, the difference among sensory feedback conditions becomes pronounced only when the cursor has arrived within the target area.

3.2 Effective Target Width

To further investigate the behavioural responses under the different sensory modalities, we analysed the effective target widths and examined the distribution of selection coordinates. Figure 4 shows the effective target widths by feedback condition. The narrowest We was for the normal condition (20.7 pixels). The widest We was for the combined condition (25.1 pixels), and the second widest was for the tactile condition (23.9 pixels). The differences were statistically significant (F4,36 = 4.37, p < .005).

Figure 4. The effective target width. The combined feedback condition

yielded the widest target width (25.1 pixels), the normal feedback condition

the narrowest (20.7 pixels). The vertical lines show 95% confidence intervals.

We interpret this as follows: In the normal feedback condition, there is a trend in the data suggesting that subjects positioned the cursor in the centre of the target (or nearby) before selecting the target. This is due to the relative paucity of feedback stimuli to inform the subject that the target has been reached. On the other hand, when additional feedback stimuli are presented, the "on-target" condition is sensed earlier and more completely; hence target selection can proceed over a wider area of the target (figure 4), and the button press/release operation can be performed more quickly once the cursor is inside the target (figure 3).

As an example of the distribution of selections, figure 5 compares the y selection coordinates for the normal and tactile feedback conditions. The y coordinates are used since the primary axis of movement was vertical; thus target height (along the y axis) was like target width in the Fitts' paradigm. (There is a similar but less apparent effect if the x coordinates are plotted.) The cause of the effect postulated in the previous paragraph is easily seen in the figure. The distribution under the normal condition (figure 5a) was more peaked; subjects tended to complete their moves in the centre of the target. Under the tactile condition (figure 5b), the distribution was flatter; subjects tended to use more of the target. Since accuracy in target selection tasks is only meaningful in the "hit" or miss" sense, there are obvious benefits in using more of the target area, one being the use of large targets to elicit faster responses. When tactile sensations are exploited, wider targets also permit greater response noise (spatial variability) without loss of feedback. This is important, for example, if the operator's visual focus shifts away from the target.

Figure 5. Distribution of selection coordinates. (a) The Normal

condition had a narrow, peaked distribution. (b) The tactile

condition hand a wide, flat distribution.

Finally, on their preferred choice of feedback, subjects ranked colour first, then tactile, then sound and combined feedback (tied). Normal feedback was the least preferred.

4. Conclusions

Although one might argue that the tactile vs. normal difference in final positioning times is slight (61 ms), we add that the arguments presented herein are not for dramatic performance differences when different sensory modalities are added in human-computer interfaces. Indeed, large performance differences were not expected in the present experiment. The task was simple and void of characteristics wherein sensory modalities could mate in appropriate and somewhat complex ways with interactive tasks. For example, if the experimental task had required subjects to remain "on-target" for a period of time while performing a secondary task, such as using a pencil in the other hand to tick a box, the performance benefits of tactile (and auditory) feedback would likely be more dramatic.

The effect of combined sensory feedback on final positioning time and effective target width was almost the same as for tactile feedback alone. Even though there was an effect for each sensory feedback condition (tactile, auditory, visual), the effect of the combined feedback condition was about the same as the most effective single condition. This suggests that there isn't an additive effect in combining sensory information on the task. We feel the operator utilizes the most effective information (i.e., tactile feedback) among the sensory information available.

In a complete human-machine interface, the use of non-visual feedback modalities (such as auditory or tactile feedback) are expected to yield performance improvements in cases where the visual channel is near capacity This will occur, for example, if the operator's attention is divided among different regions of the CRT display or among multiple tasks. Ancillary tasks could be on-screen (e.g., monitoring the progress of multiple tasks in a multi-task system) or off-screen (e.g., bank tellers, airline reservation operators, air-traffic controllers). Alternate sensory modalities (especially the tactile sense) are felt to offer tremendous potential in the overall performance of systems such as these. Operators will be able to remain "on-target" while fixating on another component of their work.

Although the tactile information given in our experiment was controlled by an on-off signal, our multi-modal mouse can also display texture or other surface information. This is achieved by providing to the actuator a continuously varying signal with amplitude and frequency corresponding to the characteristics of the screen surface. This idea is similar to the joystick prototype of Minski et al. (1990). We have implemented an interface to demonstration this effect. Without visual feedback, users can easily differentiate among several surface textures just by moving the cursor over the textured surface.

Auditory feedback is problematic mainly because it is disturbing to persons nearby. Nevertheless, we did observe a reduction in final positioning time in the presence of audio feedback.

Visual feedback also reduced final positioning times; however, the addition of visual feedback will serve to increase the visual load on the operator. Both visual and auditory feedback for motor output tasks suffer in SR compatibility. Tactile feedback for motor responses maintains SR compatibility and should be encouraged whenever its integration into the human-machine interface is possible.

Acknowledgement

We would like to thank the NSERC Japan Science and Technology Fund for assistance in conducting this research.

References

AKAMATSU, M. 1991, The influence of combined visual and tactile information on finger and eye movements during shape tracing, Ergonomics, 35, 647-660.AKAMATSU, M. and SATO, S. 1992, Mouse-type interface device with tactile and force display -- multi-modal integrative mouse, Proceedings of the Second International Conference on Artificial Reality and Tele-Existence (ICAT '92), 178-182.

BLISS, J. C., KATCHER, M. H., ROGERS, C. H. and SHEPPARD, R. P. 1970, Optical-to-tactile image conversion for the blind, IEEE Transactions on Man-Machine Systems, MMS-11, 58-65.

CARD, S. K., ENGLISH, W. K. and BURR, B. J. 1978, Evaluation of mouse, rate-controlled isometric joystick, step keys, and text keys for text selection on a CRT, Ergonomics, 21, 601-613.

CHAPANIS, A. and KINKADE, R. G. 1972, Design of controls, In H. P. Van Cott and R. G. Kinkade (Eds.), Human engineering guide to equipment design, 345-379, Washington, DC: U.S. Government Printing Office.

DiGiano, C. 1992, Program aurilization: Sound enhancements to the programming environment, Proceedings of Graphics Interface '92, 44-52, Toronto: Canadian Information Processing Society.

FITTS, P. M. 1954, The information capacity of the human motor system in controlling the amplitude of movement, Journal of Experimental Psychology, 47, 381-391.

GAVER, W. 1989, The Sonic Finder: An interface that uses auditory icons, Human-Computer Interaction, 4, 67-94.

IWATA, H. 1990, Artificial reality with force-feedback: Development of desktop virtual space with compact master manipulator, Computer Graphics, 24(4), 165-170.

MACKENZIE, I. S. 1992a, Movement time prediction in human-computer interfaces, Proceedings of Graphics Interface '92, 140-150, Toronto: Canadian Information Processing Society.

MACKENZIE, I. S. 1992b, Fitts' law as a research and design tool in human-computer interaction, Human-Computer Interaction, 7, 91-139.

MACKENZIE, I. S. and BUXTON, W. 1992, Extending Fitts' law to two dimensional tasks, Proceedings of the CHI '92 Conference on Human Factors in Computing Systems, 219-226, New York: ACM.

MINSKI, M., OUH-YOUNG, M. STEELE, O., BROOKS, Jr., F. P, and BEHENSKY, M. 1990, Feeling and seeing: Issues in force display, Computer Graphics, 24(2), 235-270.

NELSON, R. J., MCCANDLISH, C. A., and DOUGLAS, V. D., 1990, Reaction times for hand movements made in response to visual versus vibratory cues, Somatosensory and Motor Research, 7, 337-352.

SORKIN, R. D. 1987, Design of auditory and tactile displays, In G. Salvendy (Ed.), Handbook of human factors, 549-576, New York: Wiley.

WEBER, G. 1990, FINGER: A language for gesture recognition, Proceedings of INTERACT '90, 689-694, Amsterdam: Elsevier Science.

WELFORD, A. T. 1968, The fundamentals of skill, London: Methuen.

ZIMMERMAN, T. G., LANIER, J., BLANCHARD, C., BRYSON, S. and HARVILL, Y. 1987, A hand gesture interface device. Proceedings of the CHI+GI '87 Conference on Human Factors in Computing Systems, 189-192, New York: ACM.