Fitton, D., MacKenzie, I. S., Read, J. C., & Horton, M. (2013). Exploring tilt-based text input for mobile devices with teenagers. Proceedings of the 27th International British Computer Society Human-Computer Interaction Conference – HCI 2013, pp. 1-6. London: British Computer Society. doi:10.14236/ewic/HCI2013.34 [PDF] [software]

Exploring Tilt-Based Text Input For Mobile Devices With Teenagers

Dan Fitton,1 I. Scott MacKenzie,2 Janet C. Read,1 & Matthew Horton1

1ChiCI Research GroupUniversity of Central Lancashire

Preston, UK, PR1 2HE

{DBFitton, JCRead, MPLHorton}@UCLan.ac.uk

2Dept. of Computer Science and Engineering

York University, Toronto, Canada

mack@cse.yorku.ca

Abstract: Most modern tablet devices and phones include tilt-based sensing but to-date tilt is primarily used either for input with games or for detecting screen orientation. This paper presents the results of an experiment with teenage users to explore a new tilt-based input technique on mobile devices intended for text entry. The experiment considered the independent variables grip (one-handed, two-handed) and mobility (sitting, walking) with 4 conditions. The study involved 52 participants aged 11-16 carrying out multiple target selection tasks in each condition. Performance metrics derived from the data collected during the study revealed interesting quantitative findings, with the optimal condition being sitting using a two-handed grip. While walking, task completion time was 22.1% longer and error rates were 63.9% higher, compared to sitting. Error rates were 31.4% lower using a two-handed grip, compared to a one-handed grip. Qualitative results revealed a highly positive response to target selection performed using the method described here. This paper highlights the potential value of tilt as a technique for text input for teenage users.Keywords: Mobile text input, tilt-input, teenage users, performance measurement

1. INTRODUCTION

Tilt sensing is supported by the majority of modern mobile phones and tablet devices and is motivated primarily by the need to maintain correct screen orientation as a device is rotated. Tilt sensing is now commonplace as an input method for games on mobile devices (i.e., tilting mapped to specific game input) and is established as an input method on platforms such as the Nintendo Wii and the Sony PlayStation 3. Tilt has not yet been extensively explored as a text input method for mobile devices but offers certain advantages over on-screen keyboards, such as not requiring the user to physically touch (and obscure) the screen as well as the potential for eyes free input.

This paper builds on previous empirical research on tilt-input for mobile devices (MacKenzie & Teather 2012) and investigates the performance of tilt-based target selection intended for text input on mobile devices, with users aged 11-16. Teenagers and "tweenagers" (ages 10-13) have been chosen as participants in this work as this population is known for their heavy use of text input on mobile devices and their early adoption of new technologies (Yardi & Bruckman 2011). For example, a study of North American teenagers aged 13-17 in 20121 showed that 68% sent text messages daily, 58% used social networking daily, and 43% regularly used their mobile device for social networking. In addition to their heavy use of these devices, where new input technologies are welcomed, these users – soon to be adults – are ideal candidates for involvement in, and commentary on, the design and evaluation of future technologies. The study described explores future possibilities by studying two independent variables relevant to mobile text input: grip (one-handed, two-handed) and mobility (walking, sitting) and adds commentary on use by gathering the opinions of the participants.

2. RELATED WORK

Several examples of tilt-based text input techniques for mobile devices have been explored previously but the large majority of these studies have used small watch or phone sized devices. TiltType (Partridge & Chatterjee 2002) is intended for small wrist-worn devices and uses a combination of accelerometer sensing and buttons for character selection. In UniGesture (Sazawal et al. 2002), which is also intended for very small devices, characters are grouped into seven input zones and a recognition algorithm is used for word prediction. In common with the work presented herein, UniGesture adopts a "rolling marble" paradigm for selection. The TiltText system (Wigdor & Balakrishnan 2003) augments standard 12-button text entry on mobile devices with tilt input to disambiguate character selections, which was shown to improve input speed. Exploration in the area of gaming has shown that, in the context of a mobile driving game (Gilbertson et al. 2008), tilt-based input enhances the user experience when compared to key-based input. Other work has shown that within a shooting game supporting tilt, gesture, and button-based input on an iPod device (Browne & Anand 2011), tilt-input was preferred by participants and enabled longer periods of play. Previous work has explored wrist-tilt performance using "rolling marble" target acquisition tasks on early PDA devices (Crossan & Murray-Smith 2004). The study was carried out with seated participants and found that selection tasks in the upper half of the screen showed poorer performance and greater variability in cursor path. The work on GesText (Jones et al. 2010) provided a set of design factors for accelerometer text entry drawn from previous research, and studied, with the use of a relatively complex gesture set, the use of a Wiimote for mid-air text entry based on tilt gestures. The key finding from this work was that the performance of participants improved with regular usage.

Work to-date has not yet considered the empirical investigation of tilt-based input in the manner presented in this paper which is with small-tablet format devices. Additionally, no previous work has engaged teenagers as participants in this kind of tilt-input study.

3. METHOD

In this section we describe the methodology for the user study. Two independent variables explored in this study were grip (one-handed, two handed) and mobility (sitting, walking) giving four conditions (sitting 1-handed, sitting 2-handed, walking 1-handed and walking 2-handed).

3.1 Participants

The 52 participants were all from the same UK school and aged between 11 and 16 years (mean age 12.5). 56% of the participants were male; all participants owned a mobile phone and of these 71% had touch screen devices. All 52 participants had previously played a game utilizing tilt-input on one or more of the expected platforms: phones, tablets, the Nintendo Wii, and the Sony PS3. No formal selection criteria were used in choosing participants who were dispatched (by their teacher – who was given no instruction as to which children to send but therefore may have made some selection decisions herself) from their regular school lessons to participate in the study. The participants came to the study voluntarily (to the extent that once they were in the experimental setting they were allowed to leave, and to the extent that the teacher only sent willing participants to the study – but as the study was carried out in a school, the extent of their ability to not participate is always somewhat contentious) and no financial or reward incentives were given for participation although as the participating pupils got a chance to leave their class this could be construed as a partial incentive.

3.2 The Experimental Interface

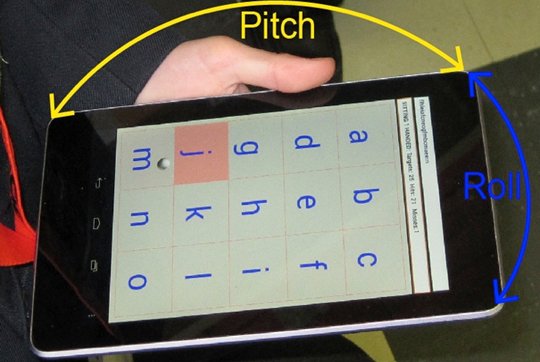

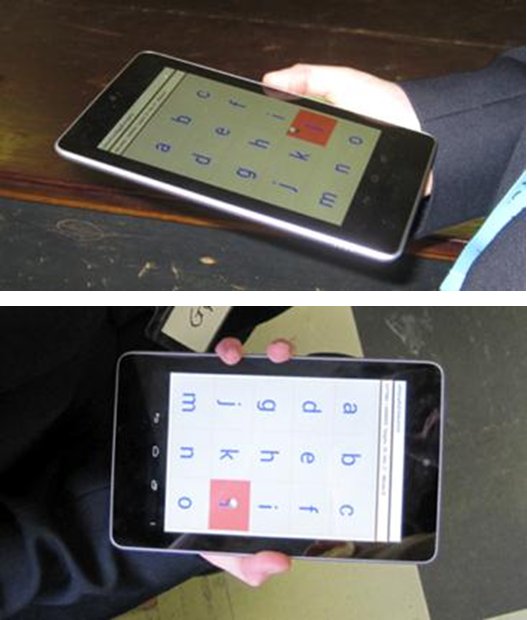

Many tilt-based games map the tilt of device relative to a horizontal axis onto a game element (e.g., a platform) and use simulated physics to control the motion of other game elements (e.g., ball motion under gravity and respecting momentum). The control method used in this study is different: A position-control mapping is used whereby the tilt of the device directly maps to the position of the ball. This ensures that the position of the ball can be changed rapidly and can be controlled with ease. The zero position of the interface (when the ball is in the centre of the screen) occurs when the device is level (i.e., parallel to the ground) in both the pitch and roll axes. The tilt-input application (shown in Figure 1) was developed for the Android platform and directly mapped the pitch and roll of the device (sensed using the internal accelerometer) to the position of a ball shown on the screen. To begin a task, the ball was positioned in one corner of the screen; this prevented the accidental starting of tasks. The application guided the participants through tasks, requiring them to select 25 targets, in sequence, within a 5 × 3 grid which was shown on the interface. Selection was achieved by positioning the ball within the appropriate square (acquisition) and maintaining it within the square for 500 ms. (dwell). Each target was intended to represent a character and was approximately 3 cm × 2.5 cm. The targets to select were randomly selected by the application and were indicated on the interface by a highlight in the appropriate square (in Figure 1 the participant is attempting to select the square corresponding to the character "j"). When the ball entered the boundary of the target square the highlighting was made more prominent and on successful selection auditory feedback was provided (a short "click" sound). The application allowed all "characters" on the interface to be selected throughout the task, with erroneous selections recorded. After each successful selection the corresponding character appeared at the top of the screen. The number of remaining targets was displayed on the interface along with the task condition. As the participant completed the task, data were collected and recorded in local files on the device.

Figure 1. Participant completing tilt input task (pitch and roll axis indicated).

3.3 Procedure

The study involved groups of four participants (of the same age) working simultaneously with the sequence of conditions (seated with one-handed grip, seated with two-handed grip, walking with one-handed grip, walking with two-handed grip) with the order given using a balanced Latin square. The participants began seated where they were introduced to the purpose of the study and given a questionnaire with preliminary items on age, sex, mobile phone usage, and experience using tilt-input. They were then given a brief demonstration and key information about the task (e.g., to walk around the outside of the square (that was marked out on the floor for the walking tasks), to assume the grip/mobility for the next condition before starting the task, and to stop at any time if needed, etc.). The participants were then given a device each and allowed to complete a practice task of selecting 25 targets. This lasted approximately 1 minute – the data from this phase was recorded but is not used in this paper.

Figure 2. Area for walking task.

After the practice task, the participants completed two tasks (25 inputs per task) in each condition before moving on to the next. Each participant used an identical Google Nexus 72 (small tablet format) Android device. In the sitting condition the participants were seated on chairs. In the walking condition participants walked around the outside of a 2.5 m square marked out with chairs at the corners and white tape on the floor (Figure 2). The application guided the participant through to completion of the tasks in each of the conditions in the correct order (maintaining balancing of tasks) with textual instructions on the screen. On completion of all four tasks (and 8 × 25 target selections), the participants answered questions based on the ISO 9241-9 standard for evaluating input with responses on a 5-point scale. The final question asked whether the participant would like to use this selection method for text input on his or her phone. The study was designed to last approximately 15 minutes per group to ensure that the teenagers did not tire or lose interest.

4. RESULTS

No problems were encountered in the study procedure or during data collection. The participants were observed while carrying out the tasks and all age groups were able to follow the instructions for each task independently.

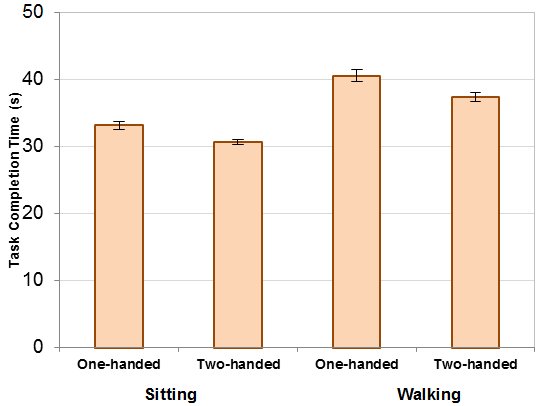

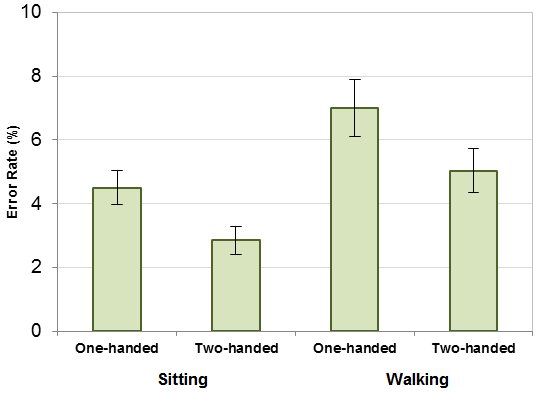

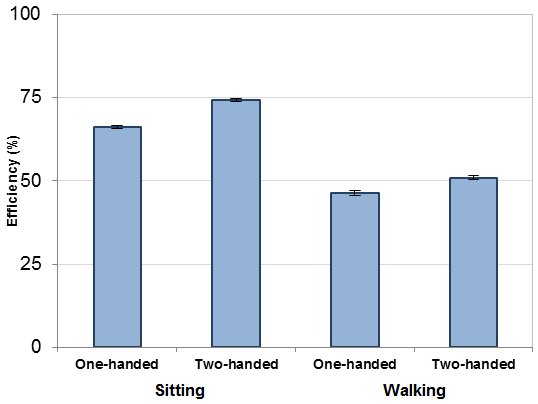

The data recorded in the log files of the tilt-input application included targets expected, targets selected, the efficiency of the path taken (by the ball), and the timing of character input. The simplest performance metric was task completion time; the results are shown in Figure 3. Two other performance metrics were error rate (Figure 4) and efficiency of ball movement (Figure 5). Error rate in this context is the ratio of inadvertent, or extra, selections to correct selections. As there were always 25 correct selections per task, 2 extra inadvertent selections would result in an error rate of 2 / 25 = 8%. Efficiency of ball movement is the ratio of the minimum distance between targets and the actual distance moved by the ball. 100% efficiency implies a straight-line movement from the centre of each target to the next target.

Figure 3. Mean task completion times for all conditions. Error bars show ±1 SD.

Figure 4. Mean error rates for all conditions. Error bars show ±1 SD.

Figure 5. Mean efficiency rates for all conditions. Error bars show ±1 SD.

All group effects were not statistically significant (Task completion time (F3,48 = 0.393, ns), Error rate (F3,48 = 1.87, ns), Efficiency (F3,48 = .358, ns)) implying that counterbalancing had the desired effect of cancelling out learning effects between the four test conditions. The main effects for mobility (sitting vs. walking) and grip (one-handed vs. two-handed) were statistically significant for all three dependent variables (Task Completion Time: Mobility (F1,48 = 155.6, p < .0001), Grip (F1,48 = 59.1, p < .0001), Error Rate: Mobility (F1,48 = 7.23, p < .001), Grip (F1,48 = 10.76, p < .05), Efficiency: Mobility (F1,48 = 614.9, p < .0001), Grip (F1,48 = 172.7 p < .0001)). From the data collected, task completion time was shown to be 22.1% longer while walking than sitting, and 7.7% lower in the two handed grip tasks compared to one-handed grip. The error rate (number of inadvertent characters input) was 63.9% higher while walking than sitting, and 31.4% lower in the two-handed grip tasks compared to one-handed grip. The efficiency of ball movement (directness of ball path between targets) was 30.6% less in the walking conditions than sitting, and 11.5% higher in the two-handed grip tasks compared to one-handed grip.

The post study survey was intended to provide a measure of overall subjective satisfaction with the input method, with the final question asking each participant if they would like to use this input method on their own phone. Responses, that show enthusiasm for tilt based text input as an idea, are shown in Figure 6.

Figure 6. Reponses: "I would like to use tilt-input on my phone".

5. DISCUSSION

All participants were able to complete the tasks in each condition without problems. Observations from the study revealed that no participants had issues "learning" the input technique. Completing the task while walking gave a relatively low mean increase in task completion time of 22.1% (an additional 7 seconds) compared to sitting. When seated the participants had no distractions and had complete control over the movement of the device. When walking the movement of the body is partly transmitted to the device to make accurate tilt-input more challenging. The participants also had to navigate around the course, turning corners and often maintaining pace with, or overtaking, other participants. These additional challenges in tilt control in the walking tasks were reflected in a 30% drop in the mean efficiency of ball path compared to the sitting conditions. It is interesting to note that while the mean completion time increased 22.1% in the walking conditions the increase in error rate was almost three times higher (63.9% higher in the walking tasks compared to sitting). This represents an increase in the mean of 2.4 erroneously input characters per 25 characters of input, as seen in the error bars in Figure 4. The walking tasks showed the highest variability and the sitting two-handed grip the lowest. These findings support the findings from a study with adults by Straker et al. 2009, who reported increased time for computer related tasks when walking as opposed to sitting.

Across both walking and sitting conditions, the two-handed grip was shown to improve performance, reducing the mean task completion time, reducing the error rate, and increasing efficiency. A two-handed grip clearly increases the control the user has over the movement of the device and reduces the physical effort involved. It should be noted that the device used in this study was conducive to one handed grip – it included a textured rear cover to increase friction, had rounded edges, and a relatively low weight of 340 g. By way of comparison, a 3rd generation WiFi iPad weighs 680 g. Two distinct single-handed grip techniques were observed during the study, gripping the edge of the device (Figure 7, top), and placing the flat of the hand across the rear of the device (Figure 7, bottom). This was an unexpected observation and while exact details of this grip preference were not recorded, it was noted that the latter technique was adopted only by small number of male participants – those with larger hands.

Figure 7. Different single-handed grip techniques: Gripping a single edge (top); Gripping opposing edges (bottom).

From observations during the studies screen glare (reflections from strong light sources nearby) did not appear to pose a problem for the participants. The experiment was carried out indoors on an overcast day but this issue may prove problematic for this interaction method when used outdoors where tilting the device to reduce glare would have unintended input effects.

Aggregating the performance metrics gives an overall measure of the challenge of completing the tasks in the four conditions. All three metrics show highest performance in the sitting + two-handed grip condition, followed by sitting + one-handed grip, followed by walking + two-handed grip, and finally walking + one-handed grip. The challenge of interaction with a mobile device while walking is well known (e.g., Lin et al. 2007, Mizobuchi et al. 2005). In a walking scenario some form of adaption may be necessary. Approaches to this include changing the interface layout (such as that used in Kane et al. 2008) or applying a correction process to compensate for the lack of accuracy (such as that used in Goel et al. 2012).

Given the findings for accuracy and speed, the use of tilt as opposed to touch for text input is promising – in touch input it has been shown that greater force and greater impulse is needed when standing as opposed to sitting (Chourasia et al. 2013) and so tilt may have an advantage in this space, the other clear reason for its further study is its possibility for blind typing which, as teenagers seek to "send messages in class while avoiding detection" (see Starner (2004) pg 4) is of interest to this user community.

6. CONCLUDING REMARKS

The findings from this study have shown that teenagers can successfully use the tilt-based input method described in this paper to select targets on mobile devices under the four study conditions. More work is needed to determine how effective this tilt-input method is for "real world" text input where the user has to locate and select characters while constructing words and sentences. Future work will also include an evaluation of this technique on other sizes of mobile devices, along with exploration of the parameters used in the tilt-application, such as those that control the ball position in relation to tilt, and selection dwell time. The participants in the study were positive qualitatively about this method for tilt input and the prospect of using it on their own mobile phones. This suggests that teenagers may be willing to try such a facility if it were widely available.

7. REFERENCES

Browne, K., & Anand, C., 2011. An empirical evaluation of user interfaces for a mobile video game. Entertainment Computing, 3(1), pp. 1– 10. https://doi.org/10.1016/j.entcom.2011.06.001

Chourasia, A. O., & Wiegmann, D. A., Chen, K. B., Irwin, C. B., & Sesto, M. E., 2013. Effect of sitting or standing on touch screen performance and touch characteristics. Human Factors, 55(4), pp. 789-802. https://doi.org/10.1177/0018720812470843

Crossan, A., & Murray-Smith, R., 2004. Variability in wrist-tilt accelerometer based gesture interfaces. Proceedings of MobileHCI 2004, New York: ACM, pp. 144– 155. https://doi.org/10.1007/978-3-540-28637-0_13

Gilbertson, P., Coulton, P., & Chehimi, F., 2008. Using "tilt" as an interface to control "no-button" 3-D mobile games. Computers in Entertainment, 6(3), 1-13. https://doi.org/10.1145/1394021.1394031

Goel, M., Findlater, L., & Wobbrock, J. O., 2012. WalkType: Using accelerometer data to accommodate situational impairments in mobile touch screen text entry. Proceedings onf the ACM SIGCHI Conference on Human Factors in Computing Systems – CHI 2012, New York: ACM, pp. 2687– 2696. https://doi.org/10.1145/2207676.2208662

Jones, E., Alexander, J., Andreou, A., Irani, P., & Subramanian, S., 2010. GesText: Accelerometer-based gestural text-entry systems. Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems – CHI 2012, New York: ACM, pp. 2173– 2182. https://doi.org/10.1145/1753326.1753655

Kane, S. K., Wobbrock, J. O., & Smith, I. E., 2008. Getting off the treadmill: Evaluating walking user interfaces for mobile devices in public spaces. Proceedings of MobileHCI 2008, New York: ACM, pp. 109– 118. https://doi.org/10.1145/1409240.1409253

Lin, M., Goldmana, R., Pricea, K. J., Sears, A., & Jacko, J., 2007. How do people tap when walking? An empirical investigation of nomadic data entry. International Journal of Human-Computer Studies, 65(9), pp. 759– 769. https://doi.org/10.1016/j.ijhcs.2007.04.001

MacKenzie, I. S., & Teather, R. J., 2012. FittsTilt: The application of Fitts" law to tilt-based interaction. Proceedings of the 7th Nordic Conference on Human-Computer Interaction - NordiCHI 2012. New York: ACM, pp. 568-577. https://doi.org/10.1145/2399016.2399103

Mizobuchi, S., Chignell, M., & Newton, D., 2005. Mobile text entry: Relationship between walking speed and text input task difficulty. Proceedings of MobileHCI 2005, New York: ACM, pp.122– 128. https://doi.org/10.1145/1085777.1085798

Partridge, K., Chatterjee, S., Sazawal, V., Borriello, G., & Want, R., 2002. TiltType: Accelerometer-supported text entry for very small devices. Proceedings of the ACM Symposium on User Interface Software and Technology – UIST 2002, New York: ACM, pp.201– 204. https://doi.org/10.1145/571985.572013

Sazawal, V., Want, R., & Borriello, G., 2002. The Unigesture approach: One-handed text entry for small devices. Proceedings of Human Computer Interaction with Mobile Devices – MobileHCI 2002, New York: ACM, pp. 256– 270. https://doi.org/10.1007/3-540-45756-9_20

Starner, T. 2004. Keyboards redux: Fast mobile text entry. Pervasive Computing, 3(3), pp.97-101. https://doi.org/10.1109/MPRV.2004.1321035

Straker, L., Levine, J., & Campbell, A. 2009. The effects of walking and cycling computer workstations on keyboard and mouse performance. Human Factors, 51(6), pp.831-844. https://doi.org/10.1177/0018720810362079

Wigdor, D., & Balakrishnan, R., 2003. TiltText: Using tilt for text input to mobile phones. Proceedings of the ACM Symposium on User Interface Software and Techology – UIST 2003, New York: ACM, pp. 81– 90. https://doi.org/10.1145/964696.964705

Yardi, S., & Bruckman, A., 2011. Social and technical challenges in parenting teens" social media use. Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems – CHI 2011, New York: ACM, pp. 3237– 3246. https://doi.org/10.1145/1978942.1979422

-----

Footnotes

1. Social media, social life: How teens view their digital lives. A common sense media research study. (2012). Common Sense Media.

2. http://www.google.com/nexus/7/