MacKenzie, I. S. (2009). Citedness, uncitedness, and the murky world between. Extended Abstracts of the ACM Conference on Human Factors in Computing Systems - CHI 2009, pp. 2545-2554. New York: ACM. [PDF]

Citedness, Uncitedness, and the Murky World Between

I. Scott MacKenzie

Dept. of Computer Science and EngineeringYork University

Toronto, Canada M3J 1P3

mack@cse.yorku.ca

ABSTRACT

We test a recent claim in an opinion piece (interactions, May/June 2008, pp. 45-47) that publications by HCI researchers have little or no impact. The alleged "phenomenon of uncitedness" was not supported. An examination of all 443 papers in the CHI Proceedings (1991-1995), ACM TOCHI (1994-1999), and Human-Computer Interaction (1991-1995) found an average of 93.8, 106.7, and 80.4 citations per paper, respectively. H-index as an impact measure is explained, with values given for members of the CHI Academy. The mean of 34.3 suggests that the group, taken as a whole, have had a significant impact on human-computer interaction.Keywords: Citation analyses, H index, research impact

ACM Classification Keywords: H5.m. Miscellaneous.

Introduction

A recent article in the ACM's bimonthly publication interactions carried an ominous title: HCI Impact and Uncitedness [6]. The article professed a general malaise in the execution and dissemination of HCI research. The central problem, we are told, is that a significant number, perhaps a majority, of research publications are never read or cited. In the article, Hopson [6] claims the following:

Sadly, most standard methods of communicating research results don't work very well. Academics have struggled for years to come to terms with the "phenomenon" of uncitedness, the proportion of published works that are never subsequently cited by other works. Depending on how it is measured and the particular field of research, estimates of uncitedness range from a mere 24 percent for some scientific fields, up to a startling 93 percent for the arts and humanities (p. 47)

Notably, no source is cited to back up the claim that between 24 and 93 percent of research publications are never cited.

In this alt-chi contribution, Hopson's claims are put to the test. Citations, as a measure of "impact", are tallied, critiqued, and examined. Samples are compiled for CHI conference publications, journal publications, and for researchers. The recently proposed "H-index" as a measure of impact is explained, with its strengths and weaknesses elaborated. Examples using CHI Academy members are given. As an introduction, some comments are offered on each sentence in the quotation above.

In the first sentence, we learn that "standard methods of communicating research results don't work very well". Really? There are two primary methods of communicating research results. One is through peer-reviewed archival publications. A salient characteristic of a research archive is that items persist. Published results may be tested, refined, extended, and even refuted, but the archive remains intact. Items are added, but not deleted. Of course, such is not the case for web postings: here today, gone tomorrow! All researchers of merit expend considerable effort keeping abreast of their field, and they do this largely through the study of published archival research. With the advent of Internet-accessible archives, this aspect of communicating research results has never been better. The other method of communicating results is through presentations at conferences or other gatherings of researchers. Few would dispute that such events are an excellent means for researchers to share and, more importantly, learn.

In the second sentence, we learn that academics have struggled for years with the "phenomenon of uncitedness". As an academic, I am unaware of any such struggle. Of course, if there was a phenomenon of uncitedness, a struggle might emerge, but I am equally unaware of this condition. Sure, citations to one's work are important. They indicate impact (see below), and it would be terrific to have numerous citations to every research publication that exists. That this is not so, misses the point. Hopson's point is that there is a phenomenon of uncitedness – that a significant number of publications are not just lacking copious citations, but are void of citations. If true, that's a concern. More about this later.

In the third sentence, some stark numbers are put forward. The word "mere" in "a mere 24%" seems odd. Indeed, it is a significant concern if 24% of research publications are never cited. That the rate is as high as 93% is a disaster, if true. Of course, the qualifier "up to" is an out – like retailers advertising "up to 70% off". So, what is the extent of uncitedness in HCI research? Let's see.

The Search in Research

The (not so) Good Old Days

As a graduate student in the 1980s, I spent countless hours searching through the volumes of citation indices at my institution's resource rooms. I was researching Fitts' law [4]. Needless to say, it was imperative to "get up to speed" on what researchers before me had done. One slip or oversight and disaster loomed. Can you imagine learning from a referee or an examiner that one's contribution to the field is, in fact, "old news"? Were I a graduate student today, chances are I'd ignore those printed volumes of citation indices.

Citations Just a Button-Click Away

The Internet has brought considerable change to many aspects of our lives, not the least of which is the pursuit of scholarship. The world's massive archive of published research is, to a large extent, accessible and searchable from an office or home computer. Simply enter a topic of interest into a search engine such as Google and a wealth of information is returned. Refine the search, and the result narrows to the specific topic of interest.

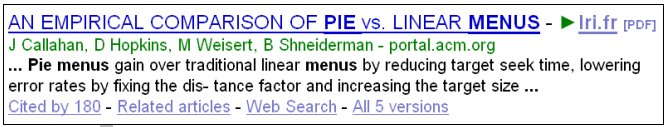

Researchers wishing to avoid blogs or sites of dubious or commercial intent, often prefer Google Scholar (http://scholar.google.ca/) to Google. The results returned are, for the most part, publications. For example, consider the search term "pie menus". Figure 1 shows the first of ten thousand or so entries returned by Google Scholar. Click on the link and the complete details of the paper are given. In many cases, there is also a link to a PDF version of the paper.

Figure 1. First item returned from Google Scholar using "pie menus"

as the search term. The article has 180 citations.

In researching a topic, one is usually familiar with a seminal work, such Callahan et al.'s paper [1] on pie menus. What is often sought is a list of other papers citing this work. With Google Scholar, this list is just a button click away. If there is at least one such citation, the output will contain a "Cited by" link (bottom-left in Figure 1).

Citation Counts

With 180 citations, Callahan et al.'s paper on pie menus is clearly a significant work. In fact, citation count is so important that Google Scholar uses this statistic to sort the search results. Citation count even trumps search term count. In other words, a publication with many occurrences of the search term is not automatically deemed highly relevant. It is the citation count that matters most.

Other Services

Google Scholar is not the only service for document and citation searching. It seems there are two categories of such services: (i) fee-for-use services using a proprietary database, and (ii) front-end interfaces built upon Google Scholar. Fee-for-use services include the ACM Digital Library (http://portal.acm.org), IEEE Xplore (http://ieeexplore.ieee.org), Elsevier Scopus (http://www.scopus.com), and Thomson Reuters ISI Web of Knowledge (http://isiwebofknowledge.com).

The main problem with these services is that they cast a net limited to documents within their internal sphere of access – their proprietary database. Google Scholar, on the other hand, casts a wide and transparent net in seeking out documents. The search is performed on the entire Internet. Results are compiled without prejudice to a document's origin.

Front ends for Google Scholar are free. They include Harzing's Publish or Perish (http://www.harzing.com) or Roussel's scHolar index (http://insitu.lri.fr/~roussel/). Their main service is an improved user interface and the tallying of relevant statistics, such as a researcher's H-index (see below).

False Negatives, False Positives

The Callahan et al. paper mentioned above is published by the ACM. Although the document is easily found in the ACM Digital Library (DL), the citation count there is only 51, far short of the 180 citations found by Google Scholar.1 Evidently, the results returned by the ACM DL, for this paper, contain a preponderance of "false negatives" – documents that are not found but that exist and are relevant. This problem is endemic to the fee-for-use services. In fact, it appears false negatives (legitimate documents not returned) out-number true positives (legitimate documents returned). This is a serious limitation. Researchers investigating a topic don't care about the source of related work: They want all of it!

The flip side is that the wide net cast by Google Scholar may return "false positives" – documents found but of a dubious nature. In fact, false positives seem to be relatively infrequent with Google Scholar. Well down in the list of 180 citing papers for the pie menus example, one still finds legitimate published papers – ones not returned in the ACM DL search. The papers may be book chapters or papers published in journals or conferences not indexed in the ACM DL. Even toward the end of the list, the results are largely PDF papers, rather than, say, web postings. Some are unpublished or in languages other than English. A few false positives is a small price to pay if one also knows there are few false negatives.

Citations to HCI Papers

So, what is the state of affairs in Human-Computer Interaction? Are HCI papers cited frequently, moderately, infrequently, or, as Hopson [6] suggests, rarely or not at all? In this section, some relevant statistics are presented. We begin with the pre-eminent conference in HCI – the ACM's Conference on Human Factors in Computing Systems, or "CHI" as it is commonly known. We then examine citations to papers published in two well-known HCI journals, the ACM's Transactions on Computer-Human Interaction (TOCHI) and Taylor and Francis's Human-Computer Interaction (formerly published by Erlbaum).

To be fair to the authors, we do not and should not expect papers to be cited immediately. It takes time for research to disseminate and even more time for subsequent research to appear that cites related previous research. Our analyses, therefore, are on papers published 10+ years ago.

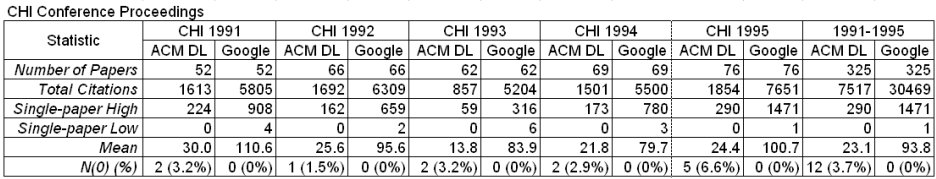

Citations to CHI Conference Papers

Figure 2 gives a range of citation statistics for all full papers published in the CHI proceedings over a five-year span, from 1991 to 1995. The statistics were compiled separately using searches with the ACM DL and with Google Scholar.

Figure 2. Citation analysis using the ACM Digital Library and Google Scholar

for papers published in the ACM's Conference on Human Factors in Computing

Systems ("CHI") from 1991 to 1995. The last row gives the number and percent

of papers with zero citations.

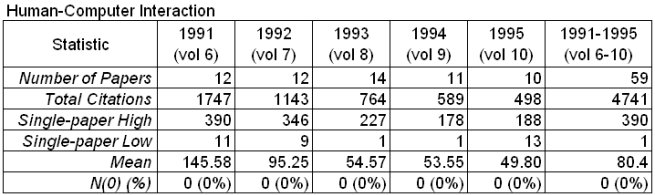

Figure 3. Citation analysis as per Figure 2, except for papers published

in the ACM's Transactions on Computer-Human Interaction from 1994 (vol. 1)

to 1998 (vol. 5).

Figure 4. Citation analysis as per Figure 2, except for papers published

in the Taylor and Francis journal Human-Computer Interaction (formerly

published by Erlbaum) from 1991 to 1995.

The results in Figure 2 are impressive. Of the 325 papers published in the CHI Proceedings from 1991 to 1995, the mean number of citations per paper is 93.8, as returned by Google Scholar. The lower figure of 23.1 citations per paper by the ACM DL is simply wrong, as it only encompasses citations from papers in the ACM DL. For the ACM DL and other fee-for-use services, false negatives are the norm, rather than the exception. As for Hopson's uncitedness claimed, it does not hold up. All of the 325 publications have at least one citation. While the lower-ranking entries may be infrequently cited, Hopson's claim that, as a minimum, 24% of all papers are never cited is unsupported – at least for HCI's "CHI" conference.

A brief look at two of HCI's most prestigious journals – the ACM's Transactions on Human-Computer Interaction and Taylor and Francis's Human-Computer Interaction – tells a similar story. During the first five years of publication for the ACM's TOCHI, from 1994 to 1998, there were 59 papers published (Figure 3). At the present time, there is an average of 106.7 citations per paper, as returned by Google Scholar. As with the CHI conference papers, 100% of the papers have at least one citation.

Taylor and Francis's journal Human-Computer Interaction bears similar statistics (Figure 4). From 1991 to 1995, there were 59 papers published, logging an average of 80.4 citations per paper. No statistics are available through the ACM DL since this journal is not included in the ACM's database. This is a good example of the false-negative problem germane to fee-for-use services with proprietary databases. Any citation appearing in a paper published in Human-Computer Interaction will not appear in statistics returned by searches in the ACM DL. Given the high regard for the journal Human-Computer Interaction, this is a serious drawback in using the ACM DL as tool for citation searches to HCI papers. Despite the potential for false positives, the absence, or near absence, of false negatives makes Google Scholar the tool of choice for searches of scholarly publications.

Citation Counts

There was a recent posting to the chi-announcements@acm.org mailing list from Dianne Murray, General Editor, Interacting with Computers. In announcing a Most Cited Paper award, Murray noted "The only objective and transparent metric that is highly correlated with the quality of a paper is the number of citations."2 Few would argue. However, only recently are citation counts readily available. Before services like Google Scholar emerged, citation counts were difficult to obtain.

Publishers of academic journals, competing for prestige and library subscriptions, have always taken the extra effort to compile citation statistics and to promote their products (journals) via "impact factors" – statistics computed from the citation counts to papers in their journal collection. However, citation counts on conference papers or on individual researchers were never conveniently available in the pre-Internet and pre-Google days. Due to this, a murky world of impact evaluation prevailed (and, to some extent, still does). Typically, the impact of a researcher was indirectly inferred from the quantity of publications or on the impact of the journals in which the researcher's papers were published. Today, this practice is highly outmoded: the citation statistics for publications by individual researchers are easily and directly available using, for example, services that access Google Scholar. Even granting agencies are now requesting that applicants list not only their publications but also the number of citations for each publication.3

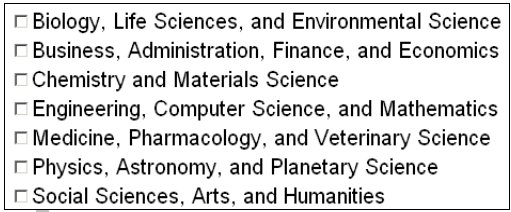

To search on a person, rather than a topic, one simply adds the prefix "author:" to a Google Scholar search term. There are several mechanisms to narrow the search (i.e., reduce false positives). One is to include initials (e.g., author:"j smith" or author:"jk smith"). Another is to specify the subject area(s) via advanced search options. The subject areas are shown in Figure 5.

Figure 5. A search in Google Scholar may be narrowed by specifying

a subject area.

As with searches on a topic, the results are returned sorted by the number of citations.

Researchers inclined to complain about the "noise" (false positives) in Google Scholar's results are not likely to do so when the search is aimed at gathering citation counts on their own published papers. The near absence of false negatives means searches using Google Scholar to gather citation counts are generous. If a paper exists that cites another paper, there is a high probability it will be found and included in the citation counts returned by Google Scholar. Computing accurate citation counts may require some manual filtering, however, to eliminate false positives.

Impact

So, what does one do with citation counts? How does citation count relate to impact? The answers to these questions are subject to considerable discussion and debate among academics and researchers. Clearly publishing research papers is important. In fact, some go further: "the goal of scientific research is publication" [3, p. ix]. Of course, having a long list of publications means very little if the papers are not read, and then cited, by other researchers. So, publication per se is just the beginning. For impact, more is required. For impact, one's papers must be cited by other researchers.

In this section, some common measures for collecting citation counts as measures of impact are introduced. Following this, some caveats are noted. We will finish with a look at the impact of CHI Academy members using what is presently the most accepted single measure of impact – the H-index.

H-index

If a researcher's publications are ordered by the number of citations to each paper, the H-index is the point where the rank equals the number of citations. In other words, a researcher with H-index = n has n publications each with n or more citations. Physicist J. Hirsch first proposed the H-index in 2005 [5]. H-index quantifies in a single number both research productivity (number of publications) and overall impact of a body of work (number of citations).

Of course, the H-index is subject to distribution anomalies. Consider two researchers with the same H-index. One has many citations to one or two publications and very few citations to the remaining papers. The other has no highly cited publications, but many receiving a modest number of citations. Which researcher has greater impact? We leave this to the reader to consider.

There are other anomalies. Consider, again, two researchers with the same H-index. One received his PhD in 1979 and has publications dating back 30 years. The other received her PhD in 1999 and has publications dating back only 10 years. Clearly, there is a difference, but it is not captured by the H-index. To smooth over these and other anomalies of the H-index, a variety of related impact measures exist. These are summarized in Figure 6. For completeness, H-index is also included.

Impact

MeasureDescription H-index The number, h, where the rank equals the number of citations G-index The number, g, where the top g articles received g2 citations HC-index "Contemporary" H-index – adds parameterized age-weighting to citations; i.e., citations to recent papers count more than citations to older papers HI-index "Individual" H-index – divides each citation count by the number of authors on a publication AW-index "Age Weighted" index – the number of citations is divided by the age of the publication, computed over an entire body of work Figure 6. Some common impact measures (source: http://www.harzing.com)

Caveats

There are a few caveats in using citation counts as an indicator of impact [7]. For example, it is silly to consider a citation that serves only to identify a flaw in previous research as indicating impact of that previous research (unless one separately gauges positive and negative impact). While such citations no doubt exist, they are likely a small minority of all citations.

Another caveat is in counting self citations – citations by someone who (co-)authored the original paper. Although a modest amount of self-citation is expected and unavoidable, clearly such citations in no way indicate impact of the cited work. In recognition of this, some citations services, such as Elsevier's Scopus, include an option to exclude self-citations in compiling citation statistics (see Figure 7). So, what is a "good" value for H-index? This is not a debate to be entered into here. However, one suggestion is that a modest H-index is equal to a researcher's years of service (e.g., years since PhD).4 Outstanding researchers should substantially exceed this.

Figure 7. Self-citations may be excluded in compiling citation

statistics (from Elsevier's Scopus)

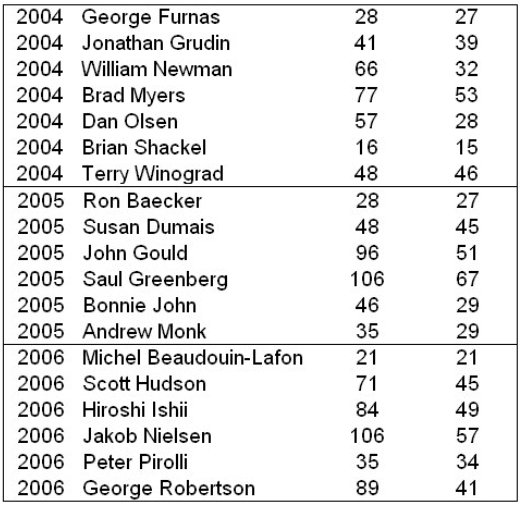

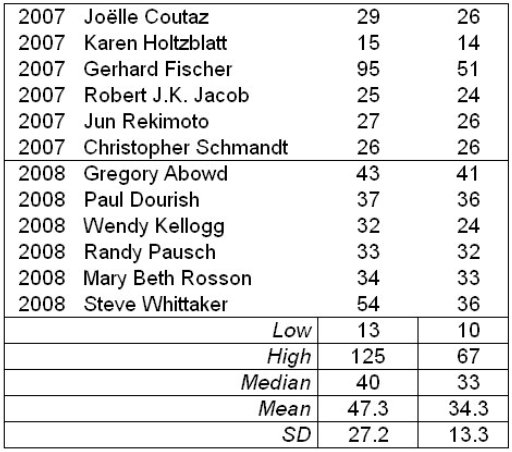

CHI Academy – Looking Good

The ACM's SIGCHI recognizes outstanding research contributions, or impact in the field, by inducting members into the CHI Academy. The process began in 2001. As of 2008, there were 49 members. So, how do CHI Academy members stack up in terms of their H-indices? Quite well, it seems. Figure 8 gives the H-index for all 49 CHI Academy members. As an illustration of the difficulty in computing H-index, two values are given for each person. The first used an initials+surname search (e.g., author:"sk card"). The second further constrained the search using "Engineering, Computer Science, and Mathematics" as the subject area. Adding a researcher's initials helps eliminate false positives for common surnames. However, if a researcher's initials appear inconsistently in their publications (e.g., WAS Buxton vs. W Buxton vs. B Buxton), the effect is to eliminate true positives.

Figure 8. H-index values for members of the CHI Academy. Values were obtained

using Google Scholar via Roussel's scHolar index (http://insitu.lri.fr/~roussel/).

Values in the first H-index column (1) were obtained using an initials+surname

search (e.g., author:"sk card"). Values in the second H-index column (2) were

further narrowed specifying "Engineering, Computer Science, and Mathematics" as

the subject area.

Adding a subject area designation had a dramatic effect for some entries (e.g., Kyng, Robertson, or Fischer), but little effect for others. Where the effect is large, it simply means that someone else with the same initials+surname is well published and well cited in another field of research. Of course, this process could also eliminate true positives if the researcher's publications are sometimes indexed in other subject areas, as may occur in multi-disciplinary fields such as HCI.

There are, of course, some lingering false positives that could be identified with further inspection of the results. For example, should there be a second "S Greenberg" or "S Hudson" publishing in engineering, computer science, or mathematics, their citation counts are also included.

The results in Figure 8 are impressive. Using the values in the right-hand column, CHI Academy members have an average H-index of 34.3. This is quite good. It means that, on average, each researcher's 34th most cited publication has 34 citations. Of course, the top-ranked publications have many more. For example, Card et al.'s The Psychology of Human-Computer Interaction [2] has over 3,000 citations!

Conclusion

We have put to rest Hopson's claim of a general malaise in HCI research – that between 24% and 93% of research publications are never read or cited [6]. We examined all 443 papers published in the CHI Conference Proceedings (1991-1995), the ACM Transactions on Computer-Human Interaction (1994-1999) and the journal Human-Computer Interaction (1991-1995). For all three publications, the mean number of citations per paper was quite good (93.8, 106.7, and 80.4, respectively). While not all papers make a major contribution – nor is this expected – all have at least some citations.

Acknowledgements

The author wishes to thank Stella Atkins, Ed Lank, Kari-Jouka Räihä, Janet Read, Stacey Scott, and Wolfgang Stuerzlinger for helpful discussions on citation statistics and researcher impact.

References

1. Callahan, J., Hopkins, D., Weiser, M., and Shneiderman, B., An empirical comparison of pie vs. linear menus, Proceedings of the CHI '88 Conference on Human Factors in Computing Systems, (New York: ACM, 1988), 95-100. https://doi.org/10.1145/57167.57182

2. Card, S. K., Moran, T. P., and Newell, A., The psychology of human-computer interaction. Hillsdale, NJ: Erlbaum, 1983. https://doi.org/10.1201/9780203736166

3. Day, R. A. and Gastel, B., How to write and publish a scientific paper, 6th ed. Westport, CT: Greenwood Publishing, 2006. https://doi.org/10.1017/S095026880003079X

4. Fitts, P. M., The information capacity of the human motor system in controlling the amplitude of movement, Journal of Experimental Psychology, 47, 1954, 381-391. https://psycnet.apa.org/doi/10.1037/h0055392

5. Hirsch, J. E., An index to quantify an individuals' scientific research output, Proceedings of the National Academy of Sciences, 102, 2005, 16568-16572. https://doi.org/10.1073/pnas.0507655102

6. Hopson, J., HCI impact and uncitedness, in interactions, 2008, May/June, 45-47. https://doi.org/10.1145/1353782.1353794

7. Parnas, D. L., Stop the numbers game, Communications of the ACM, 50, 2007, 19-21. https://doi.org/10.1145/1297797.1297815

-----

Footnotes

1. Citation counts and other statistics in this paper were compiled during the fall of 2008. They are subject to change as more publications emerge.

2. Posted to chi-announcements@acm.org Wednesday Oct 8, 2008.

3. An example is the Government of Ontario's Early Researcher Award Program. Applicants must indicate the number of citations for each publication listed.

4. http://en.wikipedia.org/wiki/Hirsch_number