Varghese Jacob, S., & MacKenzie, I. S. (2018). Comparison of feedback modes for the visually impaired: Vibration vs. audio. Proceedings of 20th International Conference on Human-Computer Interaction - HCII 2018 (LNCS 10907), pp. 420-432. Berlin: Springer. doi:10.1007/978-3-319-92049-8_30 [PDF] [video]

Abstract. Mobile computing has brought a shift from physical keyboards to touch screens. This has created challenges for the visually impaired. Touch screen devices exacerbate the sense of touch for the visually impaired, thus requiring alternative ways to improve accessibility. In this paper, we examine the use of vibration and audio as alternative ways to provide location guidance when interacting with touch screen devices. The goal was to create an interface where users can press on the touch screen and take corrective actions based on non-visual feedback. With vibration feedback, different types of vibration and with audio feedback different tones indicated the proximity to the goal. Based on the feedback users were required to find a set of predetermined buttons. User performance in terms of speed and efficiency was gathered. It was determined that vibration feedback took on average 41.3% longer than audio in terms of time to reach the end goal. Vibration feedback was also less efficient than audio taking on average 35.2% more taps to reach the end goal. Even though the empirical evidence favored audio, six out of 10 participants preferred vibration feedback due to its benefits and usability in real life. Comparison of Feedback Modes for the Visually Impaired: Vibration vs. Audio

Sibu Varghese Jacob & I. Scott MacKenzie

York University

Dept of Electrical Engineering and Computer Science

Toronto, Canada

sibuvjacob@gmail.com, mack@cse.yorku.ca

Keywords: Mobile User Interface, Feedback Mode, Vibration, Audio, Visually Impaired.

1 Introduction

Technology plays a significant role in most people's lives. An example is the constantly evolving field of computing. Over the years, computing has shifted towards mobility, with mobile computing arguably one of the greatest innovations of our time. From being stuck on a desktop computer and confined to a desk, we are now on the move and using ever-smaller devices.

In mobile computing, user interface (UI) design is of interest as it directly involves the user's satisfaction and ability to complete tasks. Designing a user interface is challenging since it requires optimizing the interface to be aesthetically pleasing while at the same time maintaining the desired functionality, without hindrances.

Mobile user interfaces are especially challenging due to the added constraints to support mobility. For example, there is a need to fit considerable information onto a small screen. Mobile user interfaces should also enable the user to perform the tasks with minimal effort in diverse environments. As much as mobile user interfaces have evolved, there are still improvements needed. One field of interest is improving the accessibility features of mobile user interface to accommodate different characteristics of humans, including those with special needs. One of the most essential pillars of achieving accessibility is providing quality feedback to the user. Feedback can be provided using auditory, visual, and tactile forms where each has advantages and disadvantages. This is particularly important for accessibility since appropriate feedback can guide users with extra information or even act as a form of alert.

Android mobile devices increase accessibility at the system level. As seen in Fig. 1, Android has a built-in feature called TalkBack, which provides spoken feedback. Spoken feedback is meant to assist blind and low-vision users who are unable to look at the screen. In general, this might be a good idea and should work most of the time, but issues arise when the user is in a crowded area where the noise level is higher than usual. Another situation where TalkBack is not effective is for users who are hearing impaired. These types of scenarios highlight the need to provide feedback through other means.

Fig. 1. Android TalkBack feature.

Since visually impaired users are unable to look at the screen, they are challenged in utilizing device functions such as navigation, data entry, accepting/declining actions, etc. The topic of this paper is to explore methods to assist the visually impaired in achieving tasks without looking at the screen. The goal of the user study presented herein is to examine a task that avoids visual feedback and instead relies on vibration or audio feedback. To achieve this, various forms of vibratory and audio feedback will be used to guide the user towards a goal. The feedback should enable the user to perform variety of tasks in different types of applications.

The methodology used to guide a visually impaired user is as follows. A visually impaired user wishes to press a button on the screen to proceed. Since the user is unaware of where the button is located s/he would press the screen at various locations. For each press the user interface determines if a goal button is pressed. If the user presses the wrong location (an alternative button), different variations of vibratory or audio feedback are provided. As the user gets closer to the goal, the vibratory/audio feedback differs, thus informing the user that s/he is getting closer to the goal. The functionality can also be implemented at the operating system or application level. This would be beneficial when the user's goal is unknown. In these forms of implementation all the possible actions that can be performed by the interface would be given variations of vibration/audio feedback and would be used to guide the user to the desired end goal. The user can determine if s/he wants to perform the action that was guided by the user interface.

2 Related Work

An examination of tactile feedback for mobile interaction was conducted by Brewster et al. [1]. They were interested in mitigating the difficulty in using touch-screen keyboards on personal digital assistants (PDAs). Their research was conducted in situations where users were mobile, motivated by the observation that users make more errors when subjected to external factors such as shaking. The first phase of the study was conducted in a lab setting, where participants entered poems into a PDA device. With tactile feedback added, users were able to enter greater amount of text with less errors. The second phase was conducted on an underground train. With tactile feedback present, participants were better able to detect errors and take corrective actions. Qualitative responses strongly favored the presence of tactile feedback.

A study by Shin et al. [5] examined tactile feedback for graphical buttons on touch devices. Different tactile patterns were designed and evaluated. The goal was to provide a sensation of pressing an actual physical button on a device that only has a touch screen. To extend the range of tactile sensations, new hardware was designed implementing six tactile patterns. The participants were asked to rank the different patterns based on which provided the most realistic sense of touching a physical button. From the different patterns, a Touch+Release pattern scored the highest, with participants ranking it the most realistic in providing the feel of clicking a real button. The participants also stated that using vibration reminded them of a warning.

Chang and O'Sullivan [2] conducted a study on audio-haptic feedback in mobile phones aiming to improve the user interface through audio-haptic feedback. The study presented ways to create different types of audio-haptic feedback. In order to test the theory, 30 participants were given a haptic phone and a non-haptic phone and were asked to play ring tones as well as navigate using the menu keys. Participant ranking indicated a preference for the feel of the interaction in the presence of audio-haptic feedback. The study also found audio-haptic feedback improved the "perception of audio quality".

A set of audio-based multi-touch interaction techniques for blind users to properly interact with touch screens was studied by Kane et al. [3]. The motivation for the study arose from the fact that touch-screen devices are hard to use for the visually impaired since they must look at the screen to determine the location of UI elements. Elements in a touch screen cannot be felt to degree of physical elements such as buttons. To improve the accessibility for visually-impaired users, Slide Rule was designed. Slide Rule works by creating a touch surface that talks, and therefore in practice transforms the surface to a "non-visual interface". Slide Rule achieves this by using several basic gesture interactions such as a "one-finger scan" for browsing a list, "second-finger tap" for selection, a "flick gesture" for additional actions and "L-select gesture" which provides hierarchical information. To perform the test, 10 blind users were asked to perform tasks on two devices, one enabled with Slide Rule. The tasks included making a phone call, using email, etc. Accuracy and speed were measured. The study determined that Slide Rule reduced task completion time, compared to a device with physical buttons. But, there was a corresponding increase in errors. The study concluded that touch screens could be used as a form of "input technology for blind users".

Yatani and Truong [6] studied the use of tactile feedback to provide visually impaired users with "semantic information". The motivation arose from the fact that mobile touch-screen devices do not have tactile feedback and thus users are unable to understand the information presented without looking at the screen. To alleviate this problem, a new type of tactile feedback called SemFeel was developed. SemFeel uses different vibratory patterns to convey meaningful information on the location of user touch points. The system uses five vibration motors. SemFeel used vibratory feedback instead of audio feedback so the device could operate quietly and without interrupting others. Two experiments were conducted. The first asked the user to match the vibration pattern produced with a pattern shown on a screen. The goal was to gauge the accuracy of distinguishing patterns. The results indicated that participants had difficulty with counter-clockwise patterns. The second experiment focused on user performance, namely input accuracy, and compared SemFeel with a UI without tactile feedback and a UI with tactile feedback provided by one source. The task consisted of entering a set of numbers using a numeric keyboard. Three types of feedback were compared: no tactile feedback, single tactile feedback (using a single vibration motor), and multiple tactile feedback (SemFeel). The results demonstrated that SemFeel had higher accuracy when compared to no tactile feedback and single-motor tactile feedback. It was also concluded that using SemFeel shows promise for interacting without looking at a display, thus aiding visually-impaired users.

Li et al. [4] conducted a study to determine the effects of replacing a visual interface with auditory feedback. A prototype called BlindSight was developed. The aim was to eliminate the need to look at the screen for accessing a phone's functions and personal information during a phone conversation, thus eliminating interruptions. Two experiments were conducted. In the first phase, 12 participants were asked to enter a 10-digit number on the device. Users were provided with auditory feedback to acknowledge the correct input as well as input errors. Three interfaces were compared: ear, flip, and visual. It was determined that the ear interface was the best option. The second phase of the experiment used eight participants who performed tasks such as adding contacts during a phone conversation. Six of the eight participants preferred the BlindSight interface.

3 Method

An experiment was conducted in which users were asked to determine the effects of vibratory feedback vs. audio feedback. The results were then analyzed on the basis of efficiency as well as the speed in which users were able to complete the task.

3.1 Participants

Twelve participants were recruited. Of the twelve, the data for two of the participants were discarded since they did not seem to fully understand the task. The remaining 10 participants consisted of five male and five female participants. All of the participants who participated were not visually impaired, as this is instead being simulated in the application. Participants were between 19-25 years. All participants use mobile devices daily. The participants received a $10 remuneration for their assistance.

3.2 Apparatus

The test was conducted on an ZTE Grand X4 mobile phone running Google Android 6.0.1 Marshmallow. See Fig. 2. The device has a 5.5" (139.7 mm) display with a resolution of 720 × 180 pixels and a 16:9 screen ratio. The display was an in-plane switching (IPS) liquid-crystal display (LCD) capacitive touchscreen with a 267 ppi pixel density. The device has a vibration actuator as well as a speaker.

Fig. 2. ZTE Grand X4.

A custom application was built using Android Studio version 2.3.3. The application was written in Java for the functionality and used extensible markup language (XML) for the graphical user interface.

The setup screen and results screen of the application are seen in Fig. 3a and Fig. 3b. The application included two modes. In mode 1, the application relied on vibration feedback. In mode 2, the application used audio feedback. The UI for each mode is in Fig. 4a and Fig. 4b. Fifteen buttons appear in each mode.

(a) (b)

Fig. 3. Various parts of the application (a) setup and (b) result screen.

(a) (b)

Fig. 4. Various feedback modes (a) vibration and (b) audio mode.

In mode 1, the vibration features of the device were accessed using the Vibrator

class. Varying types of vibrational feedback were created by increasing the

duration of vibration and changing the vibration pattern. The correct "end

goal" for each of the five trials were buttons 15, 10, 3, 9 and 4. One button

in each of the four directions around the end goal used a vibration pattern of

three 100-ms pulses indicating one button away from goal. The other buttons had

a single 100-ms vibration pulse indicating the end goal was more than a button

away, and finally the end goal was a single 500-ms pulse indicating the correct

button.

In mode 2, audio-feedback features were implemented using the ToneGenerator

class. Different sounds were used to represent user's proximity to the goal.

From the ToneGenerator class, TONE_CDMA_SOFT_ERROR_LITE for 300 ms indicated one

button away from goal, TONE_PROP_BEEP for 100 ms indicate more than one button

away from goal, and TONE_CDMA_HIGH_L for 500 ms indicate the end goal has been

reached.

3.3 Procedure

When participants arrived, the purpose of the experiment was explained. The experimenter then summarized the tasks to participants. Participants used the demonstration buttons in the setup screen to sample the different sounds and vibrations. See Fig. 3a. Participants were then given practice trials. The testing environment had mostly normal background noise. The device was held in participants' hands, as they liked, as trials were performed. An example is seen in Fig. 5.

Fig. 5. Participant performing the experiment.

To offset order effects, the participants were divided into two groups. One group did the audio-feedback trials first, followed by the vibration feedback trials. The other group did the trials in the reverse order.

In the vibration feedback mode, participants were asked to press the buttons that they desire. They were asked to reach the end goal by finding and pressing a predetermined finish button. In order to simulate a situation that visually impaired users encounter, the participants were not aware which buttons would be required to press in order to finish the test. Instead, they were asked to reach the end goal by relying on the feedback provided from the device's vibration. Each button press had varying types of vibrational feedback, as noted earlier. When the participants pressed buttons closer to the end goal, a pattern-based vibrational feedback was provided indicating they are getting closer to the end goal. Once the participant pressed the correct end-goal button, a lengthy vibration was produced to notify the user and terminate the trial.

Users were also provided with a text on the display indicating the completion of the different trials. This is seen in Fig. 4a. After the completion of the first trial, the second trial started with different predetermined finish button. For each trial, the predetermined buttons changed. After the completion of the trials the members of group 1 were given access to the feedback mode 2 which utilizes the audio feedback.

Similar to mode 1, before the test for mode 2 was conducted participants were allowed to sample the demonstration buttons to understand the different tones that indicated the correct direction. Participants were then given access to the test and completed each trial upon pressing the predetermined finish button. The test concluded when all of the trials were completed using both of the feedback modes and the user was brought to the result screen as seen in Fig. 3b.

The experiment took less than five minutes to complete. After the test was completed participants were given an open-ended questionnaire to solicit information and provide feedback on which mode they preferred. The questionnaire also invited participants to provide suggestions for improvement.

3.4 Design

The user study employed a 2 × 5 within-subjects design. The independent variables (and levels) were as follows:

- Feedback mode (vibration, audio)

- Trial (1, 2, 3, 4, 5)

Similarly, there were two dependent variables, time per trial and efficiency. Efficiency was quantified as the number of taps required to complete a trial. In all, there were 10 Participants × 2 Feedback Modes × 5 Trials = 100 Trials.

4 Results and Discussion

There were 100 trials in total, where each participant performed five trials for each of two feedback modes. In general, participants completed tasks faster with audio feedback compared to vibration feedback, thus making audio feedback a viable choice in terms of speed. Audio feedback was also better in terms of efficiency, as vibration feedback took more taps per trial than audio feedback. Although audio feedback performed better in both speed and efficiency, users generally preferred vibration feedback for social reasons.

4.1 Speed - Time per trial

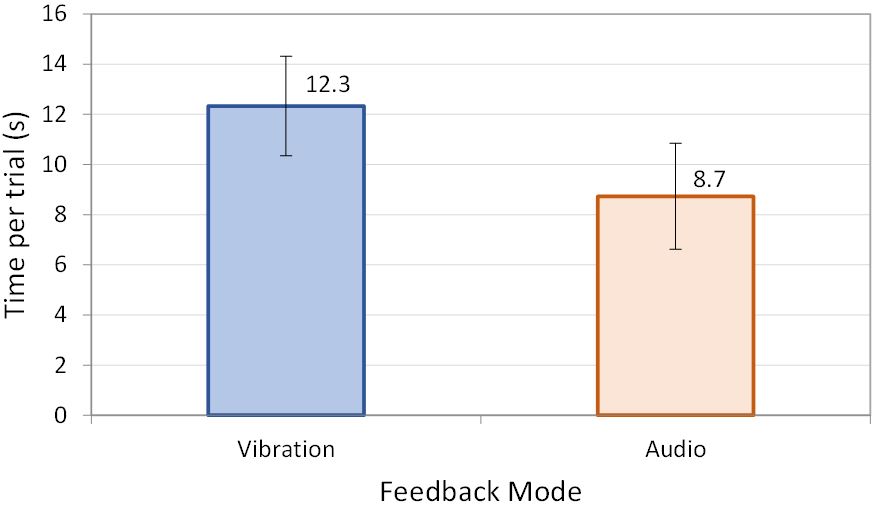

The mean time per trial was 10.5 seconds over the 100 trials in the experiment. The mean for the audio feedback trials was 8.7 s. Vibration feedback took 41.3% longer with a mean time per trial of 12.3 s. See Fig. 6. The difference was statistically significant (F1,9 = 7.57, p < .05).

Fig. 6. Mean time per trial (s) by feedback mode. Error bars represent ±1 SD.

Reducing the time as much as possible in order to reach the goal faster is paramount and audio feedback provides a promising start. This difference could be possibly due to the need to wait and feel the vibration. Audio feedback on the other hand is easily distinguishable allowing participants to quickly realize that they have not reached the end goal yet. The feedback result aligns with Yatani and Truong's findings [6] in which the interface with tactile feedback was slower than an interface without tactile feedback.

A comparison of the mean times per trial between the feedback modes is presented in Fig. 7. Of the five trials, the highest mean completion time for vibration feedback was 14.7 s thus making trial 4 the slowest for vibration. On the other hand, audio feedback experienced its slowest mean completion time in trial 1 which was 11.4 s. It can be observed that the highest mean completion time for vibration observed in trial 4 was 3.3 s or 28.9% slower than the highest time audio feedback experienced in trial 1. The promising finding is how audio feedback shows steady improvement from trial 1 to trial 5, with each trial decreasing in the time to complete the task.

This demonstrates promise for the future since as the users get familiar with the various audio feedback tones, the possibility to reduce completion time improves.

Surprisingly, vibration feedback showed increase in time after trial 1, except for trial 3, peaking at trial 4 after which it reaches its lowest time in trial 5. The considerable delay for vibration could be attributed to participants familiarizing themselves with the interface to properly understand the different variations and meanings.

Fig. 7. Mean time per trial (s) by feedback mode and trial.

As users became familiar with the vibration forms, we can see a significant improvement in trial 5 for vibration feedback. This could suggest an even lower completion time for vibration feedback with continued practice. However, the effect of trial on time per trial was not statistically significant (F4,36 = 0.71, ns). These findings therefore show participants were able to learn audio feedback faster and thus performed better than with vibration feedback.

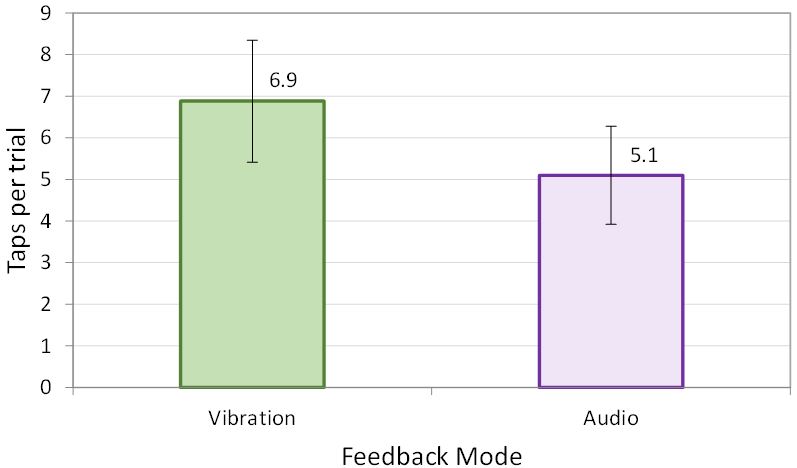

4.2 Efficiency - Number of button taps per trial

The second dependent variable was efficiency, which was measured as the number of button taps required before reaching the desired end goal. Obviously, lower scores are better. The grand mean was 5.9 taps per trial. The mean for audio feedback was 5.1 taps per trial. Vibration feedback took 35.2% more taps per trial with a mean of 6.9 taps. See Fig. 8. However, the difference was not statistically significant (F1,9 = 1.76, p > .05). This demonstrates another area where audio feedback performed better than vibration feedback (although the difference was not statistically significant).

The breakdown by trial paints an interesting picture. As seen in Fig. 9, the mean for vibration feedback was 5.2 taps in trial 1 which was lower than the rest of the trials afterward. As the trials went on, the number of taps for vibration feedback increased, reaching the highest at trial 4 requiring a mean of 8.9 button taps.

Fig. 8. Number of button taps per trial by feedback mode. Error bars represent ±1 SD.

Fig. 9. Number of button taps by trial and feedback mode.

After trial 4, vibration improved, reaching 6.9 taps in trial 5 which was again higher than trial 1 at 5.2 taps. On the other hand, audio feedback had its highest number of taps in trial 1 at 6.8 taps. Comparing the two highest means, namely trial 4 for vibration and trial 1 for audio, we see that audio feedback was 2.1 taps lower or 30.8% less compared to vibration. Audio feedback demonstrated considerable improvement from trial 1 to trial 5, except in trial 3 with a slight increase.

This is in contrast to vibration which demonstrated worse performance over time, except trial 5. Understandably, in the case of audio, as participants became more familiar with the task and the meaning of audio feedback, there was improvement, unlike in the case of vibration. These findings suggest that with continued practice there is a possibility for both of the feedback methods to improve.

The effect of trial on efficiency (taps per trial) was not statistically significant (F4,36 = 0.21, ns). This, again, gives an indication in the higher efficiency capabilities that audio feedback has over vibration (although, again, the difference was not statistically significant).

4.3 Participant Feedback

An open-ended questionnaire was used to gather the participants preference of the feedback modes and the reasoning behind them. The results from the questionnaire were surprising. The majority of the participants preferred vibration feedback over the audio feedback. Of the 10 participants, six preferred vibration feedback while four participants preferred audio feedback. This is in contrast to the empirical evidence that was gathered which demonstrated audio feedback was faster and more efficient. There are several possible reasons for why participants preferred vibration.

One reason for preferring vibration is the annoyance of using audio in some environments. On vibration feedback, one of the participant said the following:

You can use it anywhere, anytime without interrupting others.

There are many places such as libraries which are meant to be quiet. In these places, unnecessary noise is an annoyance to others. Another preference for vibration feedback was due to the effects of a noisy environment on an audio-based feedback. As mentioned by one participant,

If the user is in a noisy environment, then the audio is harder to hear.

In noisy environments such as public places it may not be possible to hear audio feedback. Vibration feedback would not have this issue since it is based entirely on feel.

On the other side, for users preferring audio they mentioned the ability to distinguish audio feedback better as opposed to vibration. One participant mentioned the following:

Vibrations are often more similar to each other, not as easy to distinguish, compared to different auditory signals.

Finally, participants also provided suggestions on improving the applications used for the user study. One participant mentioned using better audio feedback for the buttons "one button away" and "not close". The other improvement suggested by one of the participant was to add more rows in the application.

5 Limitations and Future Work

One of the most profound limitations in this study was the lack of visually impaired participants. The results are based on visually-abled users; results could differ if the trials were performed by visually-impaired users.

Another limitation is that the end-goal button was known in the experiment software. In real use, the end goal is often not known, but simply exists according to a user's intention. However, in some situations, such as games, the end goal may be known; so, the methodology employed here has some applications in practical use.

A possibility for future work is to use swipe gestures, rather than button taps. The user touches and moves their finger on the display. Feedback (audio or vibration) is generated repeatedly, and changes according to the distance from the goal, until the desired contact location is reached. Even if visually-abled participants were used, the talk could be done eyes-free – without looking at the display.

Additional future work could focus on the type of tones that are easier for users to understand as well as their effects on user performance. This also applies to vibration with the goal of improving speed, efficiency, and user satisfaction.

6 Conclusion

In conclusion, this user study compared two types of feedback, namely vibration and audio. The goal was to reduce the hindrances that are faced by visually-impaired users, since the transformation to touch-screen devices. The aim was to identify the ideal form of feedback that guides the user to a goal without the need for visual feedback.

To conduct the study, an application providing two different forms of feedback was designed. From the user study, it was determined that audio feedback performed better both in terms of speed as well as efficiency. Vibration feedback took 41.3% longer to complete and, as well, took 35.2% more taps than audio feedback. In terms of participants preference, six out of 10 participants preferred vibration feedback making it the preferred feedback mode as a practical issue in day-to-day life.

References

1. Brewster, S., Chohan, F., Brown, L.: Tactile feedback for mobile interactions, In: Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems - CHI 2007, pp. 159-162. ACM, New York (2007). https://doi.org/10.1145/1240624.1240649

2. Chang, A., O'Sullivan, C.: Audio-haptic feedback in mobile phones, In: Extended Abstracts of the ACM SIGCHI Conference on Human Factors in Computing Systems - CHI 2005, pp. 1264-1267. ACM, New York (2005). https://doi.org/10.1145/1056808.1056892

3. Kane, S. K., Bigham, J. P., Wobbrock, J. O.: Slide rule: Making touch screens accessible to blind people using multi-touch interaction techniques, In: Proceedings of the ACM SIGACCESS Conference on Computers and Accessibility - ASSETS '08, pp. 73-80. ACM, New York (2008). https://doi.org/10.1145/1414471.1414487

4. Li, K. A., Baudisch, P., Hinckley, K.: BlindSight: Eyes-free access to mobile phones, In: Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems - CHI 2008, pp. 1389-1398. ACM, New York (2008). https://doi.org/10.1145/1357054.1357273

5. Shin, H., Lim, J., Lee, J., Kyung, K., Lee, G.: Tactile feedback for button GUI on touch devices, In: Extended Abstracts of the ACM SIGCHI Conference on Human Factors in Computing Systems - CHI 2012, pp. 2633-2636. ACM, New York (2012). https://doi.org/10.1145/2212776.2223848

6. Yatani, K., Truong, K. N.: SemFeel: A user interface with semantic tactile feedback for mobile touch-screen devices, In: Proceedings of the ACM SIGCHI Symposium on User Interface Software and Technology - UIST 2009, pp. 111-120. ACM, New York (2009). https://doi.org/10.1145/1622176.1622198