Roig-Maimó, M. F., Manresa-Yee, C., Varona, J., & MacKenzie, I. S. (2016). Evaluation of a mobile head-tracker interface for accessibility. Proceedings of the 15th International Conference on Computers Helping People With Special Needs - ICCHP 2016 (LNCS 9759), pp. 449-456. Berlin: Springer. doi: 10.1007/978-3-319-41267-2_63 [PDF]

Abstract – FaceMe is an accessible head-tracker vision-based interface for users who cannot use standard input methods for mobile devices. We present two user studies to evaluate FaceMe as an alternative to touch input. The first presents performance and satisfaction results for twelve able-bodied participants. We also describe a case study with four motorimpaired participants with multiple sclerosis. In addition, the operation details of the software are described. Evaluation of a Mobile Head-tracker Interface for Accessibility

Maria Francesca Roig-Maimó1, Cristina Manresa-Yee1, Javier Varona1, and I. Scott MacKenzie2

1Dept. of Mathematics and Computer Science

University of Balearic Islands, Palma, Spain

{xisca.roig, cristina.manresa, xavi.varona}@uib.es2Dept. of Electrical Engineering and Computer Science

York University, Toronto, Canada

mack@cse.yorku.ca

Keywords – mobile human-computer interaction, assistive technology, head-tracker interface, alternative input, multiple sclerosis

1 Introduction

Nowadays, mobile device usage is growing rapidly. But, users with special needs find challenges using these devices. To offer the same opportunities to all members of a society, users with the full spectrum of capabilities and limitations should be able to access all Information and Communication Technologies [20]. Users with motor impairments (mainly in the upper-body) may be unable to directly use mobile devices or they may experience difficulty interacting via touch [1, 21]. So, how can users with motor impairments interact with mobile devices? Kane et al. [13] reported that people with disabilities rely on mass-market devices instead of using devices specially designed for them. One approach to accessibility is to utilize built-in sensors, for example, sensors which do not require touch input or an external switch. Not surprisingly, there is a higher adoption of tablets than smartphones for people with motor impairments since the larger format brings more possibilities for interaction [21].

The integration of cameras on mobile devices combined with greater processing capacity has motivated research on vision-based interfaces (VBI). Whereas in desktop computers, VBIs are widely used in assistive tools for motion-impaired users [14], in mobile contexts the use of VBIs is relatively new. VBIs detect and use the voluntary movement of a body part to interact with the mobile device, achieving a non-invasive hands-free interface. FaceMe is a new head-tracker interface for mobile devices [18]. It works as a mouse replacement interface in desktop computers [23] and offers promise as a device for accessible computing [14]. The objective here is to explore, extend, and evaluate FaceMe as an assistive tool for mobile devices.

2 Related Work

While users with perceptual impairments (e.g., vision, hearing) may encounter problems with computer output, people with motor impairments face difficulties in computer input. This latter group cannot employ standard input devices, and instead use established solutions for desktop computing. The interfaces range from head wands or switches [16] to eye trackers [4] to head trackers [15].

There are fewer assistive tools for mobile devices than for desktop computers. Mobile accessible solutions typically arrive through third-party developers or via the operating system. Examples include screen magnifiers or screen readers (e.g., VoiceOver on iOS or Talkback on Android) which support users with sight loss.

For able-bodied users, touch gestures are widely used. So, accessible alternatives are required to perform standard gestures. An example is AssistiveTouch on iOS which uses a single, moveable touch point to access the device's physical buttons.

An alternative to touch input is voice-control (e.g., SIRI on iOS or Google Now on Android) which is beneficial for users with vision or motor impairments. But, these methods do not offer total control of the system, as they are usually for specific tasks such as opening an app or placing a call.

Other mobile input mechanisms involve external devices such as switches to detect and incorporate the user's body motion. Detecting body motion can be done by processing data from various sensors, such as the gyroscope or the front camera. In this research, we focus on processing images provided by the device's front camera.

The data are used for vision-based control and interaction. Commercial applications, such as the Smart Screen on Samsung's Galaxy S4, use camera data to detect the position of the face and eyes to perform functions like scrolling within documents, screen rotation, or pausing video playback. However, additional research is needed to explore the detection of body motion as an input means for users with physical impairments. Notably, there are some related projects [8, 9] or apps [22, 11, 7, 19] and some research on vision-based interfaces integrated in specific applications, such as gaming or 3D interaction [6, 10].

3 System Description

FaceMe is a head-tracker for mobile devices (developed in iOS 8.0) that uses front-camera data to detect features in the nose region, to track a set of points, and to return the average point as the nose position (i.e., the head position of the user).

The nose position is then translated to the mobile screen by a pre-defined transfer function based on differential positioning. The interface reports the change in coordinates, with each location reported relative to the previous location, rather than to a fixed location. To perform a selection, we use a dwell-time criterion. FaceMe is stable and robust for different users, light conditions, and backgrounds [17]. Earlier work established the method's viability in a target-selection task with able-bodied users [18].

4 Evaluation

Two user studies were conducted to evaluate FaceMe as an alternative input system for mobile devices. The studies aim at determining if all regions of the device screen (an iPad) are accessible for users.

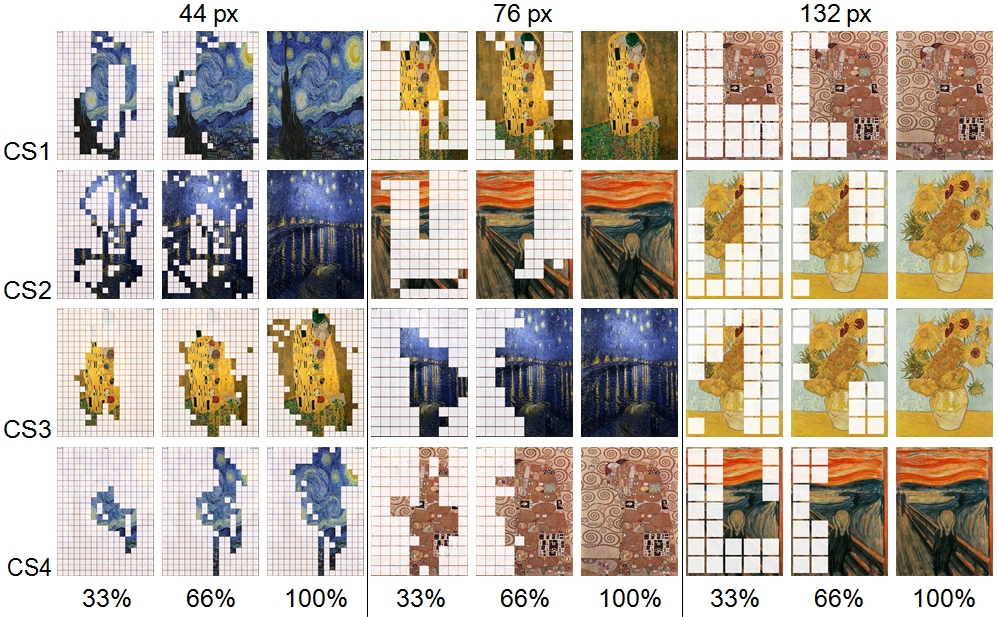

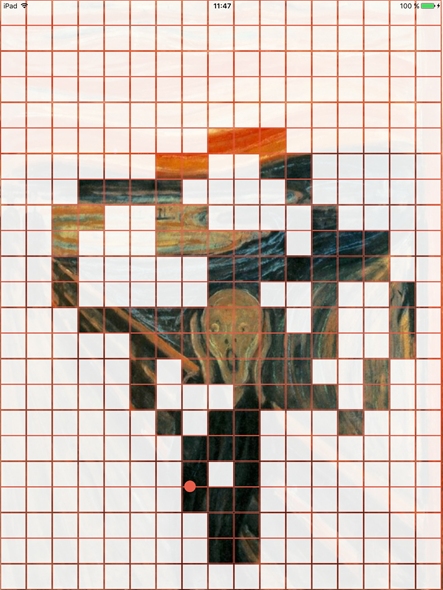

The first user study involved simple pointing tasks that spanned all the regions of the device screen. The task was a picture-revealing puzzle game. A picture was covered with an m × n grid of tiles (with three different tile sizes); participants had to move over all the tiles to remove them and to uncover the image (see top row Figure 1). A tile was selected and removed immediately when movement of the user's head/nose produced a coordinate inside the tile. This selection mode is equivalent to a 0-ms dwell-time criterion.

The second study used a point-select task with a 1000 ms dwell-time criterion for selection. The task simulated the iOS home screen on an iPad (see Figure 1a) with three icon sizes (see Figure1b-d). Participants were asked to select all the icons, which were distributed across the screen.

(a)(b)

(c)

(d)

Fig. 1. Top row: Screenshots of the picture reveal puzzle game with three different tile sizes. Bottom row: a) iOS home screen on an iPad. b-d) Screenshots of the simulated iOS home screen with three different icon sizes.

4.1 Participants and Apparatus

Twelve able-bodied unpaid participants (4 females) were recruited from the local town and university from an age group of 20 to 71 (mean = 34.8, SD = 18.3). None had previous experience with head-tracker interfaces.

Four participants with multiple sclerosis (two female) participated in the user study. Ages ranged between 46 and 67 years. They all need assistance in basic daily activities due to their motor and sensory impairments. They possess some control of their heads, although the range of movement is limited. They all use wheelchairs and lack trunk control. Three have vision disturbances.

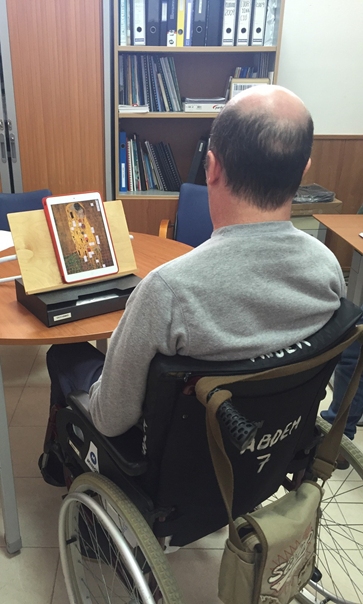

The tests used a iPad Air (2048 × 1536 resolution, 264 dpi)3 . which was placed on a stand over a table (see Figure 2).

Fig. 2. Two of the motor-impaired participants performing the user studies.

4.2 Procedure and Design

We followed a within-subjects design with one independent variable for both user studies. The levels for the target size were the same for both studies: 44 px, 76 px, and 132 px. According to the iOS Human Interface Guidelines [2], the optimal sizes are 44 × 44 px for a UI element and 76 × 76 px for an App icon. The third level (132 × 132 px) was chosen to simulate a zoom or screen magnifier operation.

For the first user study (picture-revealing puzzle), we had 391 tasks for the 44 px level, 130 tasks for the 76 px level, and 35 tasks for the 132 px level. For the second user study (home screen icon selection), we had 24 tasks for each level of the target size independent variable.

Each able-bodied participant completed two blocks of three conditions presented randomly; each motor-impaired participant completed only one block.

Thus, there were (12 participants × (391 tasks + 130 tasks + 35 tasks) × 2 blocks) + (4 par × (391 tasks + 130 tasks + 35 tasks) × 1 block) = 15,568 tasks for the first user study, and (12 par × 24 tasks × 3 conditions × 2 blocks) + (4 par × 24 tasks × 3 conditions × 1 block) = 2016 tasks for the second user study.

The dependent variables for both studies were task completion time (efficiency) and whether or not all regions of the screen were reachable (effectiveness).

Participants also assessed the usability of FaceMe using the System Usability Scale (SUS) questionnaire [5] and a comfort assessment based on ISO 9241-411 [12].

4.3 Results and Discussion

First User Study: Picture-revealing Puzzle – All able-bodied participants were able to uncover all the tiles of the puzzle in all the size conditions.

The mean completion time was lower for the 132 px condition (20.4 s) compared to 76 px (58.0 s) and 44 px (579.5 s). The differences were statistically significant (F2,22 = 220.1, p < .001). Pairwise comparisons with Bonferroni corrections revealed significant differences between all pairs (p < .05). See Figure 3a.

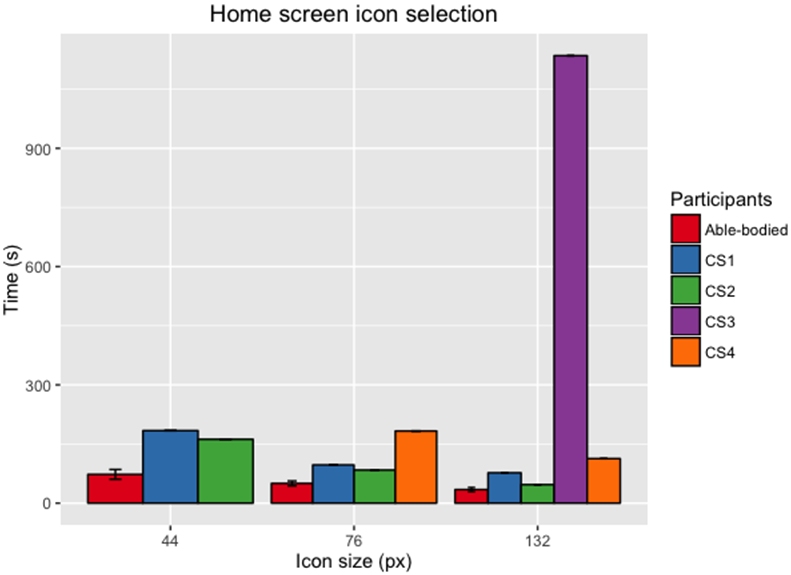

(a)(b)

Fig. 3. Completion time by participant group for (a) the picture-revealing puzzle user study and (b) the home screen icon selection user study.

Two of the four motor-impaired participants were able to uncover all the tiles of the puzzle in all the size conditions but the other two had difficulty with the smallest tile size (44 px). Figure 4 shows the evolution of the uncovered tiles for the motor-impaired participants: most started uncovering the tiles in the center of the screen and left the tiles placed in the edges of the screen for the end.

These observations suggest that FaceMe is a viable accessible input method that enables users to access all the screen regions as long as the interactive elements have a minimum size of 76 px. As far as possible, those elements should be placed toward the center of the screen.

Fig. 4. Time evolution of the uncovered tiles for the motor-impaired participants.

Second User Study: Home Screen Icon Selection – All the able-bodied participants were able to select all the targets in all the size conditions.

The mean completion time was lower for the 132 px condition (34.5 s) compared to 76 px (50.1 s) and 44 px (73.0 s). The differences were statistically significant (F2,22 = 178.5, p < .001). Pairwise comparisons with Bonferroni corrections revealed significant differences between all pairs (p < .05). See Figure 3b.

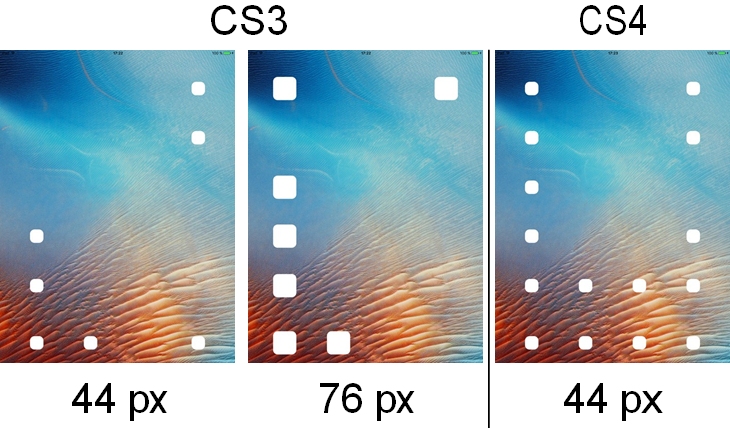

Two of the four motor-impaired participants were able to select all the targets in all the size conditions but participant CS4 had difficulty with the 44 px tile size and participant CS3 felt tired and was not able to finish the test with the 44 px and 76 px tile sizes (see Figure 5).

Fig. 5. Targets that could not be selected by the participants CS3 and CS4.

Observing the results obtained, we can argue that FaceMe can be used for selecting targets as long as they have a minimum size of 76 px. Considering the targets that could not be selected by participants CS3 and CS4, targets should be placed toward the center of the screen to the extent possible.

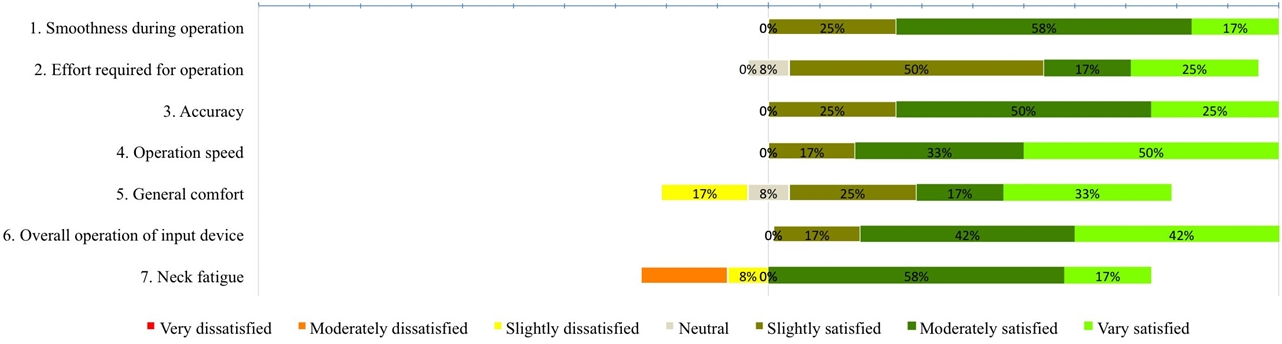

Usability – The overall average SUS score was 87.1 (SD = 10.3). According Bangor et al. [3], scores higher than 70 are in the acceptable range. Our score (87.1) is above Excellent in the adjective ratings scale. Figure 6 gives the results for the questionnaire of assessment of comfort. Overall, FaceMe received a positive rating, even in the question about neck fatigue.

Fig. 6. Assessment of comfort questionnaire results.

5 Conclusion

In this work, FaceMe, a touch-alternative head-tracker vision-based interface for users with physical limitations was presented and evaluated through two user studies with twelve able-bodied participants and four motor-impaired users with multiple sclerosis. The results indicate that FaceMe can be used as an alternative input method for motor-impaired users to interact with mobile devices, allowing them to perform point-select tasks across all the regions of the device display. The study with the motor-impaired participants highlights the importance of the size of interactive elements (recommended minimum is 76 px) and their location on the screen (preferably toward the center).

6 Acknowledgments

This work has been partially supported by grant BES-2013-064652 (FPI) and project TIN2012-35427 by the Spanish MINECO, FEDER funding.

References

| 1. | Anthony, L., Kim, Y., Findlater, L.: Analyzing user-generated

YouTube videos to understand touchscreen use by people with motor

impairments. In: Proc. CHI. pp.

1223-1232. CHI '13, ACM (2013).

https://doi.org/10.1145/2470654.2466158

|

| 2. | Apple Inc: iOS Human Interface Guidelines: Designing for

iOS,

https://developer.apple.com/library/ios/documentation/userexperience/conceptual/mobilehig/

|

| 3. | Bangor, A., Kortum, P.T., Miller, J.T.: An Empirical Evaluation

of the System

Usability Scale. Int J Hum-Comput Int 24(6), 574-594 (2008).

https://doi.org/10.1080/10447310802205776

|

| 4. | Biswas, P., Langdon, P.: A new input system for disabled users

involving eye gaze

tracker and scanning interface. Journal of Assistive Technologies 5(2),

58-66 (2011)

|

| 5. | Brooke, J.: SUS-A quick and dirty usability scale. Usability

evaluation in industry

189(194), 4-7 (1996).

https://www.taylorfrancis.com/chapters/edit/10.1201/9781498710411-35/sus-quick-dirty-usability-scale-john-brooke

|

| 6. | Cuaresma, J., MacKenzie, I.S.: A comparison between tilt-input and

facial tracking

as input methods for mobile games. In: Proc. IEEE-GEM. pp. 70-76. IEEE

(2014)

|

| 7. | Electronic rescue service: Games Sokoban Head Labyrinth,

https://play.google.com/store/apps/details?id=ua.ers.headMoveGames

|

| 8. | Fundación Vodafone España: EVA facial mouse,

https://play.google.com/store/apps/details?id=com.creasi.eviacam.service

|

| 9. | Google and Beit Issie Shapiro: Go ahead project,

https://www.hakol-barosh.org.il/

|

| 10. | Hansen, T.R., Eriksson, E., Lykke-Olesen, A.: Use your head:

exploring face tracking for mobile interaction. In: Proc. Ext. Abstracts CHI. pp. 845-850.

ACM (2006).

https://doi.org/10.1145/1125451.1125617

|

| 11. | Inisle Interactive Technologies: Face Scape,

https://itunes.apple.com/es/app/face-scape/id1019147652?mt=8

|

| 12. | ISO: ISO/TS 9241-411:2012 - Ergonomics of human-system

interaction -

Part 411: Evaluation methods for the design of physical

input devices.

https://www.iso.org/standard/54106.html

|

| 13. | Kane, S.K., Jayant, C., Wobbrock, J.O., Ladner, R.E.: Freedom to

roam: a study

of mobile device adoption and accessibility for people with visual and

motor disabilities. In: Proc. SIGACCESS. pp. 115-122. Assets '09, ACM

(2009).

https://doi.org/10.1145/1639642.1639663

|

| 14. | Manresa-Yee, C., Ponsa, P., Varona, J., Perales, F.J.: User

experience to improve

the usability of a vision-based interface. Interact Comput 22(6),

594-605 (2010).

https://doi.org/10.1016/j.intcom.2010.06.004

|

| 15. | Manresa-Yee, C., Varona, J., Perales, F.J., Salinas, I.: Design

recommendations

for camera-based head-controlled interfaces that replace the mouse for

motion- impaired users. Univers. Access Inf. Soc. 13(4), 471-482 (2014).

https://doi.org/10.1007/s10209-013-0326-z

|

| 16. | Ntoa, S., Margetis, G., Antona, M., Stephanidis, C.:

Scanning-based interaction

techniques for motor impaired users. Assistive Technologies and

Computer Access for Motor Disabilities, G. Kouroupetroglou, Ed. IGI

Global (2013).

https://doi.org/10.4018/978-1-4666-4438-0.ch003

|

| 17. | Roig-Maimó, M.F., Manresa-Yee, C., Varona, J.: A robust

camera-based interface

for mobile entertainment. Sensors 16(2), 254 (2016).

https://doi.org/10.3390/s16020254

|

| 18. | Roig-Maimó, M.F., Varona Gómez, J., Manresa-Yee, C.: Face Me!

Head-tracker

interface evaluation on mobile devices. In: Proc. Ext. Abstracts CHI.

pp. 1573-1578. ACM Press (2015).

https://doi.org/10.1145/2702613.2732829

|

| 19. | Shtick Studios: HeadStart,

https://play.google.com/store/apps/details?id=com.yair.cars

|

| 20. | Stephanidis, C.: The universal access handbook. CRC Press (2009).

https://doi.org/10.1201/9781420064995

|

| 21. | Trewin, S., Swart, C., Pettick, D.: Physical accessibility of

touchscreen smartphones. In: Proc. SIGACCESS. pp. 19:1-19:8. ASSETS '13, ACM (2013).

https://doi.org/10.1145/2513383.2513446

|

| 22. | Umoove Ltd: Umoove experience: the 3D face & eye tracking

flying game,

https://itunes.apple.com/es/app/umoove-experience-3d-face/id731389410?mt=8

|

| 23. | Varona, J., Manresa-Yee, C., Perales, F.J.: Hands-free vision-based

interface for

computer accessibility. J Newt Comput Appl 31(4), 357-374 (2008).

https://doi.org/10.1016/j.jnca.2008.03.003

|

Footnotes:

3 That means a resolution of 1024-by-768 Apple points. From now on, we refer to the Apple point as px and assume a 1024-by-768 px resolution.