Research

Here are highlights of a few recent and current projects.Lightness perception

Lightness is the perception of achromatic surface colours: black, white, and shades of grey. Amazingly, we have little idea how the brain accomplishes this seemingly simple computation, and more amazingly, even the most successful computer vision systems come nowhere near peoples' ability to perceive lightness accurately.Why is lightness such a difficult problem? The fundamental obstacle seems to be ambiguity. The retinal image is affected by many factors: the intensity and colour of lighting, the colours and positions of objects being viewed, and properties of the atmosphere, to name a few. Somehow our visual system disentangles these factors, and we perceive lighting, surface colour, and shape as separate features of the environment.

This is a fascinating problem at the core of vision science. It is a genuine `fruit fly' problem that encapsulates issues that arise in many domains, such as the deep ambiguity of visual images, and the ways in which perception is optimized for the particular world that we evolved in.

This is a fascinating problem at the core of vision science. It is a genuine `fruit fly' problem that encapsulates issues that arise in many domains, such as the deep ambiguity of visual images, and the ways in which perception is optimized for the particular world that we evolved in.

I recently reviewed these problems in the Annual Review of Vision Science.

One factor limiting progress in understanding lightness is that researchers have often not taken advantage of new tools for computational modelling. In a recent paper I made a step in this direction by developing a Markov random field model of lightness and lighting, which accounts for several powerful illusions (such as the snake illusion shown at left, where the horizontal rectangles are physically identical but appear very different) and broad phenomena that have been problematic for previous models.

In a current collaboration with David Brainard of the University of Pennsylvania and Marcus Brubaker of York University, we are drawing on tools developed in computer vision for intrinsic image estimation, to create more general and powerful models of human lightness perception.

Natural lighting

Lighting conditions strongly affect the visual stimuli that reach our eyes. The intensity, colour, direction, and diffuseness of lighting are factors that biological and artificial visual systems must deal with in order to perceive scenes accurately. I recently reviewed work on lighting in a collaborative paper with Wendy Adams of the University of Southampton. Interestingly, there is currently no easy way of measuring complex lighting conditions, and so some aspects of lighting are difficult to study.

Interestingly, there is currently no easy way of measuring complex lighting conditions, and so some aspects of lighting are difficult to study.

To get around this problem I built a multidirectional photometer (pictured at left). Using this device, Yaniv Morgenstern, then a Ph.D. student in the laboratory, discovered some interesting ways in which human vision is tuned to natural lighting. His psychophysical and modelling work showed that this tuning can easily be overridden by lighting cues from shading and shadows.

This work was done in collaboration with Wilson Geisler of the University of Texas at Austin and Laurence Harris of York University.

We are currently using the Oculus Rift to investigate how people perceive complex lighting conditions in virtual environments. This work is a collaboration with Laurence Maloney of New York University, Kris Ehinger of the University of Melbourne, and Laurie Wilcox of York University.

We are also collaborating with Michael Brown of York University on developing more practical ways of measuring and characterizing complex lighting.

Psychophysical reverse correlation

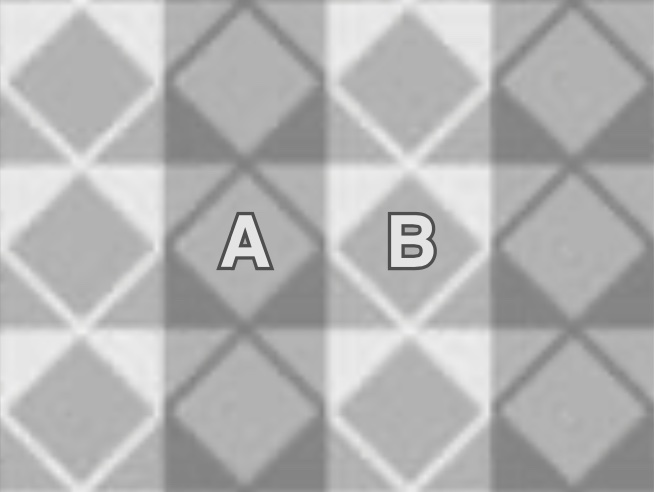

The human visual system is complex, and more sophisticated performance measures than proportion correct and reaction times can be useful for revealing its behaviour. The classification image is a powerful psychophysical tool that is similar to reverse correlation methods in physiology. In a well-defined task (e.g., which square is brighter?), we record observers' responses to a wide range of stimuli, usually perturbed by visual noise, and we infer a mathematical relationship between the visual input and behavioural output. In the argyle illusion (shown at left), diamonds A and B are physically identical, but A appears much lighter. Minjung Kim (MJ), then a Ph.D. student in the lab, used classification images to determine what image features are responsible for this lightness illusion.

In the argyle illusion (shown at left), diamonds A and B are physically identical, but A appears much lighter. Minjung Kim (MJ), then a Ph.D. student in the lab, used classification images to determine what image features are responsible for this lightness illusion.

In her experiments, the elements of this stimulus (bars, triangles, diamonds) had their luminance randomly perturbed on thousands of trials. She found that only perturbations of elements very near to diamonds A and B had an effect on observers' lightness judgements, meaning that the features that drive this strong lightness illusion are surprisingly local and limited.