U n i v e r s i t é Y O R K U n i v e r s i t y

ATKINSON FACULTY OF LIBERAL AND PROFESSIONAL STUDIES

SCHOOL OF ANALYTIC STUDIES & INFORMATION TECHNOLOGY

S C I E N C E A N D T E C H N O L O G Y S T U D I E S

STS 3700B 6.0 HISTORY OF COMPUTING AND INFORMATION TECHNOLOGY

ATKINSON FACULTY OF LIBERAL AND PROFESSIONAL STUDIES

SCHOOL OF ANALYTIC STUDIES & INFORMATION TECHNOLOGY

S C I E N C E A N D T E C H N O L O G Y S T U D I E S

STS 3700B 6.0 HISTORY OF COMPUTING AND INFORMATION TECHNOLOGY

Lecture 21: The Last 50 Years

| Prev | Next | Search | Syllabus | Selected References | Home |

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 |

Topics

-

"Civilisation has advanced as people discovered new ways of exploiting various physical resources such as materials, forces and energies. In the twentieth century information was added to the list when the invention of computers allowed complex information processing to be performed outside human brains. The history of computer technology has involved a sequence of changes from one type of physical realisation to another—from gears to relays to valves to transistors to integrated circuits and so on. Today's advanced lithographic techniques can squeeze fraction of micron wide logic gates and wires onto the surface of silicon chips. Soon they will yield even smaller parts and inevitably reach a point where logic gates are so small that they are made out of only a handful of atoms. On the atomic scale matter obeys the rules of quantum mechanics, which are quite different from the classical rules that determine the properties of conventional logic gates. So if computers are to become smaller in the future, new, quantum technology must replace or supplement what we have now. The point is, however, that quantum technology can offer much more than cramming more and more bits to silicon and multiplying the clock-speed of microprocessors. It can support entirely new kind of computation with qualitatively new algorithms based on quantum principles."

A Barenco, A Ekert, A Sanpera & C Machiavello [ A Short Introduction to Quantum Computation ] -

In this lectures I will only cover the events and trends of the last fifty years which I consider most significant:

- The shift from number crunching to communication, and the advent of the internet and of the web

- The introduction of the personal computer

- The idea of and the first steps toward nano-technology and quantum computing

- The convergence of number crunching and communication: distributed computing

-

1. The Shift from Computation to Communication: The Internet and the Web

One of the most useful resources documenting the history of the internet is

Michael Hauben's and Ronda Hauben's

Netizens Netbook: On the History and Impact of Usenet and the Internet.

In his Foreward, Thomas Truscott praises the work as

"a comprehensive, well documented look at the social aspects of computer networking," and goes on to say: "The authors

examine the present and the turbulent future, but focus on the technical and social roots of the Net."

Michael Hauben is also the author of History of ARPANET: Behind the Net. The Untold History of the ARPANET or The 'Open' History of the ARPANET/Internet.

I am updating this page on April 30, 2003. "Ten years ago, CERN issued a statement declaring that a little known

piece of software called the World Wide Web was in the public domain. That was on 30 April 1993, and it opened

the floodgates to Web development around the world. By the end of the year Web browsers were de rigueur for any

self-respecting computer user, and ten years on, the Web is an indispensable part of the modern communications

landscape. The idea for the Web goes back to March 1989 when CERN Computer scientist Tim Berners-Lee wrote a proposal

for a 'Distributed Information Management System' for the high-energy physics community. Back then, a new generation

of physics experiments was just getting underway. They were performed by collaborations numbering hundreds of

scientists from around the world - scientists who were ready for a new way of sharing information over the Internet.

The Web was just what they needed. By Christmas 1990, Berners-Lee's idea had become the World Wide Web, with its

first servers and browsers running at CERN. Through 1991, the Web spread to other particle physics laboratories

around the World, and was as important as e-mail to those in the know."

Netizens Netbook: On the History and Impact of Usenet and the Internet.

In his Foreward, Thomas Truscott praises the work as

"a comprehensive, well documented look at the social aspects of computer networking," and goes on to say: "The authors

examine the present and the turbulent future, but focus on the technical and social roots of the Net."

Michael Hauben is also the author of History of ARPANET: Behind the Net. The Untold History of the ARPANET or The 'Open' History of the ARPANET/Internet.

I am updating this page on April 30, 2003. "Ten years ago, CERN issued a statement declaring that a little known

piece of software called the World Wide Web was in the public domain. That was on 30 April 1993, and it opened

the floodgates to Web development around the world. By the end of the year Web browsers were de rigueur for any

self-respecting computer user, and ten years on, the Web is an indispensable part of the modern communications

landscape. The idea for the Web goes back to March 1989 when CERN Computer scientist Tim Berners-Lee wrote a proposal

for a 'Distributed Information Management System' for the high-energy physics community. Back then, a new generation

of physics experiments was just getting underway. They were performed by collaborations numbering hundreds of

scientists from around the world - scientists who were ready for a new way of sharing information over the Internet.

The Web was just what they needed. By Christmas 1990, Berners-Lee's idea had become the World Wide Web, with its

first servers and browsers running at CERN. Through 1991, the Web spread to other particle physics laboratories

around the World, and was as important as e-mail to those in the know."

[ from Ten Years Public Domain for the Original Web Software ] A comprehesive history of the web is The World Wide Web History Project. You may also find NCSA Mosaic History quite interesting. "The NCSA Mosaic project may have ended in 1997, but the impact of this breakthrough software is still being felt today." "The ARPANET started in 1969… The ARPANET was the experimental network connecting the mainframe computers of universities and other contractors funded and encouraged by the Advanced Research Projects Agency of the U.S. Department of Defense. The ARPANET started out as a research test bed for computer networking, communication protocols, computer and data resource sharing, etc. However, what it developed into was something surprising. The widest use of the ARPANET was for computer facilitated human-human communication using electronic mail (e-mail) and discussion lists." [ from Chapter 5 ]

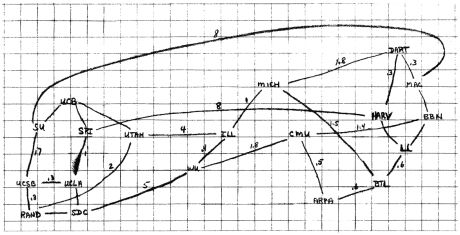

Sketch Map of the Possible Topology of ARPANET by Larry Roberts (Late 60s)

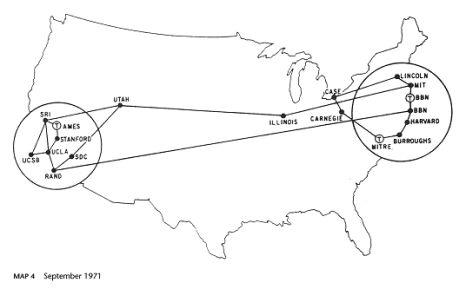

ARPANET in 1971

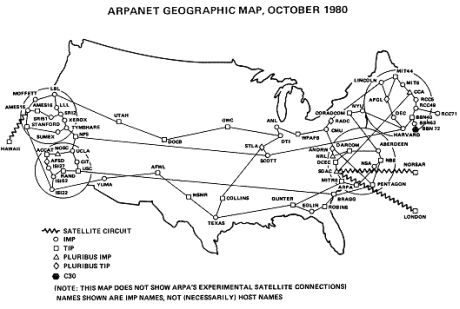

ARPANET in 1980

Given today's highly interactive interfaces of computers, it may seem strange that until the 1960s, "computers operated almost exclusively in batch mode. Programmers punched or had their programs punched onto cards. Then the stack of punched cards was provided to the local computer center. The computer operator assembled stacks of cards into batches to be feed to the computer for continuous processing. Often a programmer had to wait over a day in order to see the results from his or her input." Their operation was essentially sequential in nature. Each job had to wait for the previous one to be finished. "Crucial to the development of today's global computer networks was the vision of researchers interested in time-sharing. These researchers began to think about social issues related to time-sharing. They observed the communities that formed from the people who used time-sharing systems and considered the social significance of these communities. Two of the pioneers involved in research in time-sharing at MIT, Fernando Corbato and Robert Fano, wrote, 'The time-sharing computer system can unite a group of investigators in a cooperative search for the solution to a common problem, or it can serve as a community pool of knowledge and skill on which anyone can draw according to his needs. Projecting the concept on a large scale, one can conceive of such a facility as an extraordinarily powerful library serving an entire community in short, an intellectual public utility.'" [ from Chapter 5 ] Notice that time-sharing is quite different from multitasking: the former is the concurrent use of a computer by two or more users, while the latter is a computer's ability to execute more than one program or task at the same time. Joseph Licklider was one of the first people to take a strong interest in the new time-sharing systems introduced in the early 60s, and saw how such system could be shaped into new ways of communicating with the computer. Since Licklider was the director of the Information Processing Techniques Office (IPTO), a division of the Advanced Research Projects Agency (ARPA), he was in a position to set the priorities and obtain the appropriate funding for a network, which eventually became the internet. Between 1963 and 1964 that's precisely what he did. "Both Robert Taylor and Larry Roberts, future successors of Licklider as director of IPTO, pinpoint Licklider as the originator of the vision which set ARPA's priorities and goals and basically drove ARPA to help develop the concept and practice of networking computers." [ from Chapter 5 ] Here is a remarkable statmenet by Robert Taylor:"They were just talking about a network where they could have a compatibility across these systems, and at least do some load sharing, and some program sharing, data sharing that sort of thing. Whereas, the thing that struck me about the time-sharing experience was that before there was a time-sharing system, let's say at MIT, then there were a lot of individual people who didn't know each other who were interested in computing in one way or another, and who were doing whatever they could, however they could. As soon as the time-sharing system became usable, these people began to know one another, share a lot of information, and ask of one another, 'How do I use this? Where do I find that?' It was really phenomenal to see this computer become a medium that stimulated the formation of a human community. And so, here ARPA had a number of sites by this time, each of which had its own sense of community and was digitally isolated from the other one. I saw a phrase in the Licklider memo. The phrase was in a totally different context something that he referred to as an 'intergalactic network.' I asked him about this… in fact I said, 'Did you have a networking of the ARPANET sort in mind when you used that phrase?' He said, 'No, I was thinking about a single time-sharing system that was intergalactic.'" [ from Chapter 5 ]

Further information on J.C.R. Licklider is available in The Netizens Netbook, (see especially chapters 5, 6, and 7). This vision however turned out to be more difficult to implement than it had been anticipated. We complain today about the lack of standardization (different operating systems, different hardware, etc.), but in the early 60s this lack was much more substantial. What was needed was a communication protocol that would be essentially independent of the actual machines to be connected in the network. It took some time before an effective protocol was adopted. In part, the problem was that, as ARPANET grew quite rapidly, the various protocols, while initially satisfactory, would soon show their limitations (e.g. inability to handle larger amounts of traffic). By 1974 a new protocol, TCP/IP, designed not only for the present, but also for the future, was introduced. And that, essentially, is what we still use today.

There is another way in which we can study the eveolution of the internet, and that's through the vocabulary that developed, spontaneously, around it. The Jargon File, aka The New Hacker's Dictionary. in its printed form, "is a collection of slang terms used by various subcultures of computer hackers. Though some technical material is included for background and flavor, it is not a technical dictionary; what we describe here is the language hackers use among themselves for fun, social communication, and technical debate. The 'hacker culture' is actually a loosely networked collection of subcultures that is nevertheless conscious of some important shared experiences, shared roots, and shared values. It has its own myths, heroes, villains, folk epics, in-jokes, taboos, and dreams. Because hackers as a group are particularly creative people who define themselves partly by rejection of `normal' values and working habits, it has unusually rich and conscious traditions for an intentional culture less than 50 years old. As usual with slang, the special vocabulary of hackers helps hold their culture together—it helps hackers recognize each other's places in the community and expresses shared values and experiences. Also as usual, not knowing the slang (or using it inappropriately) defines one as an outsider, a mundane, or (worst of all in hackish vocabulary) possibly even a suit. All human cultures use slang in this threefold way—as a tool of communication, and of inclusion, and of exclusion." The Jargon File provides a unique, insider's view of the development of the internet. If to know a language is to know the people who speak it, then this continuously growing work gives more than a glimpse into the mindset of the people who developed Unix and the internet. Here is the definition of hacker:"hacker n. [originally, someone who makes furniture with an axe] 1. A person who enjoys exploring the details of programmable systems and how to stretch their capabilities, as opposed to most users, who prefer to learn only the minimum necessary. 2. One who programs enthusiastically (even obsessively) or who enjoys programming rather than just theorizing about programming. 3. A person capable of appreciating hack value. 4. A person who is good at programming quickly. 5. An expert at a particular program, or one who frequently does work using it or on it; as in 'a Unix hacker.' (Definitions 1 through 5 are correlated, and people who fit them congregate.) 6. An expert or enthusiast of any kind. One might be an astronomy hacker, for example. 7. One who enjoys the intellectual challenge of creatively overcoming or circumventing limitations. 8. [deprecated] A malicious meddler who tries to discover sensitive information by poking around. Hence 'password hacker,' 'network hacker.' The correct term for this sense is cracker. The term 'hacker' also tends to connote membership in the global community defined by the net (see the network. For discussion of some of the basics of this culture, see the How To Become A Hacker FAQ. It also implies that the person described is seen to subscribe to some version of the hacker ethic (see hacker ethic). It is better to be described as a hacker by others than to describe oneself that way. Hackers consider themselves something of an elite (a meritocracy based on ability), though one to which new members are gladly welcome. There is thus a certain ego satisfaction to be had in identifying yourself as a hacker (but if you claim to be one and are not, you'll quickly be labeled bogus). See also geek, wannabee. This term seems to have been first adopted as a badge in the 1960s by the hacker culture surrounding TMRC and the MIT AI Lab. We have a report that it was used in a sense close to this entry's by teenage radio hams and electronics tinkerers in the mid-1950s."

Finally, here are some other, selected, resources that you may find useful for understanding the internet revolution. To get a sense of the sequence of events, browse through Hobbes' Internet Timeline,

which highlights most of the key events and technologies which helped shape the Internet as we know it today.

Hobbes' Internet Timeline,

which highlights most of the key events and technologies which helped shape the Internet as we know it today.

WWW Growth (Hobbes' Internet Timeline © 2003 Robert H Zakon)

The Internet Society's A Brief History of the Internet. "In this paper, several of us involved in the development and evolution of the Internet share our views of its origins and history." PBS presents a beautiful graphical version of this period: Life on the Internet.

The Colline Report: Collective Invention and European Policies

illustrates the European views on the history, nature and purpose of the net.

A great site is the Resource Center for Cyberculture Studies,

"an online, not-for-profit organization whose purpose is to research, teach, support, and create diverse and dynamic elements of cyberculture."

Life on the Internet.

The Colline Report: Collective Invention and European Policies

illustrates the European views on the history, nature and purpose of the net.

A great site is the Resource Center for Cyberculture Studies,

"an online, not-for-profit organization whose purpose is to research, teach, support, and create diverse and dynamic elements of cyberculture."

The State of the Internet 2000 and

The State of the Internet 2000 and  The State of the Internet 2001, prepared by the United States Internet Council and International Technology and Trade Associates (ITTA),

have become "cornerstone document[s] for understanding Internet trends." These reports provide "an overview of recent Internet

trends and examine how the Internet is affecting both business and social relationships around the world. [ The 2001 ] Report

has also expanded its vision to include sections covering the international development of the Internet as well as the rapid

emergence of wireless Internet technologies."

The State of the Internet 2001, prepared by the United States Internet Council and International Technology and Trade Associates (ITTA),

have become "cornerstone document[s] for understanding Internet trends." These reports provide "an overview of recent Internet

trends and examine how the Internet is affecting both business and social relationships around the world. [ The 2001 ] Report

has also expanded its vision to include sections covering the international development of the Internet as well as the rapid

emergence of wireless Internet technologies."

[ from State of the Internet 2000 ] -

2. The Introduction of the Personal Computer

One of the best sources for the history of the PC is Ken Polsson's Chronology of Personal Computers.

I have extracted (and edited) from it the following, simplified, chronology, :

- 1956 First transistorized computer: the TX-O at the MIT

- 1958 Jack Kilby, at Texas Instruments, completes the first integrated circuit (IC)

- 1960 Digital Equipment introduces the first minicomputer, the PDP-1, the first commercial machine with keyboard and monitor

- 1963 Douglas Engelbart invents the mouse

- 1964 John Kemeny and Thomas Kurtz, at Dartmouth College, develop the BASIC (Beginners All-purpose Symbolic Instruction Code) programming language. The American Standard Association adopts ASCII (American Standard Code for Information Interchange) as a standard code for data transfer.

- 1965 Gordon Moore, at Fairchild Semiconductor, predicts that transistor density on integrated circuits would double every 12 months for the next ten years. This prediction is revised in 1975 to doubling every 18 months, and becomes known as Moore's Law.

- 1969 Honeywell releases the H316 'Kitchen Computer,' the first home computer

- 1972 Intel introduces a 200kHz 8008 chip, the first commercial 8-bit microprocessor

- 1973 First portable PC: the Canadian-made MCM/70 microcomputer

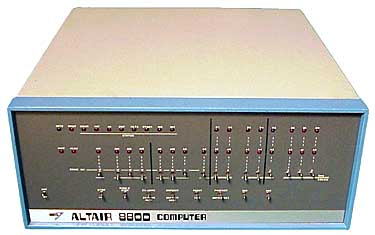

- 1974 MITS completes the first Altair 8800 microcomputer prototype

- 1975 MITS delivers the first generally-available Altair 8800.

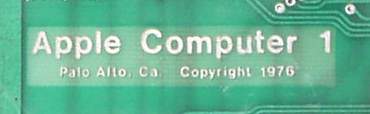

IBM unveils the IBM 5100 Portable Computer. It is a briefcase-size minicomputer with BASIC, 16kB of RAM, tape storage drive holding 204 KB per tape, keyboard, and built-in 5-inch screen - 1976 Steve Wozniak and Steve Jobs finish a computer circuit board called the Apple I computer. The term 'personal computer' first appears in the May issue of Byte magazine. The Apple I computer board is sold in kit form

- 1981 IBM's Personal Computer, the IBM 5150, is announced

The MCM/70 (1972 - 1973)

Notice that it is difficult to determine which machine was actually the first personal computer. For example, York's Computer Museum is celebrating the 30th anniversary of the unveiling of the world’s first portable PC, a Canadian-made MCM/70 microcomputer, designed by André Arpin and others between 1972 and 1973. See also York University Computer Museum and Center for the History of Canadian Microcomputing Industry. Here is the abstract of a commemorative lecture by André Arpin: "Tiny by today's standards, yet the MCM computer with only 16 k bytes of address space available for the system and the program combined was used to solve business, engineering and scientific problems. Implemented on an Intel 8008 using APL, it used a 32 characters display, a digital cassette tape, an object oriented file system, virtual memory, an active I/O bus and a switching power supply with battery backup. It was ahead of its time and stayed that way for a surprisingly long time. Since peripheral controllers were not readably available at the time, keyboard, tape, disk, printer, CRT controllers and the switching power supply were all designed and implemented. Then as technology evolved, micro coded processors were also designed and produced."

The first PC: MITS Altair 8800 (1975)

With the Altair 8800, to enter programs or data, one had to set the toggle switches on the front. There was no keyboard, video terminal or paper tape reader. All programming was in the machine code (binary digits). The Altair 8800 also lacked output devices such as printers. The output was signaled by the pattern of flashing lights on the front panel. The first models came with only 256 bytes of memory.

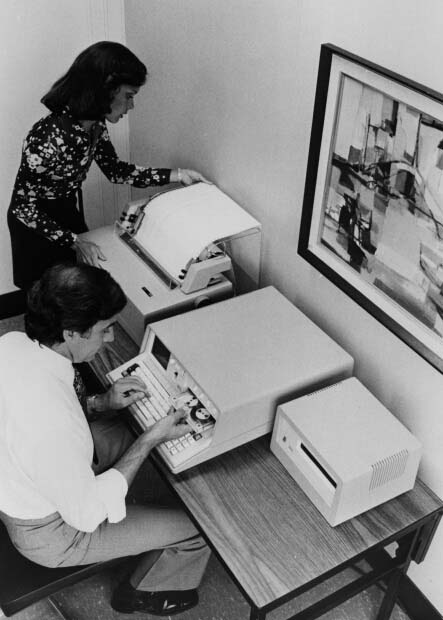

An Early Prototype: IBM 5100 Portable Computer (1975)

Apple-1 Mainboard Logo Close-Up (1976)

The 'First' PC: IBM 5150 (1981)

The IBM Personal Computer, aka as IBM 5150, was announced on August 12, 1981. It featured a 4.77MHz Intel 8088 (16bit), 16kB of RAM, an 83-key keyboard, 2 full-height 160kB floppy disk drives, TTL green phosphor screen which could display 25 rows x 80 characters, and for operating system it ran IBM Personal Computer DOS. -

3. The First Steps Toward Nano-Technology and Quantum Computing

"The high speed modern computer sitting in front of you is fundamentally

no different from its gargantuan 30 ton ancestors, which were equipped

with some 18000 vacuum tubes and 500 miles of wiring! Although computers

have become more compact and considerably faster in performing their

task, the task remains the same: to manipulate and interpret an encoding

of binary bits into a useful computational result."

[ from The Quantum Computer: An Introduction ] It is therefore not suprising that, in recent years, scientists and engineers have started exploring different architectures and different physical phenomena in a quest for new, more powerful kind of computers. In addition, physicists and mathematicians have been working at generalizing the work of Turing, Shannon and others which is the foundation of the computer as we know it. If you recall [ see Lecture 19 ], Shannon's formula for the quantity of information carried by one of n possible and equally probable messages is H = log2(n). This formula bears a remarkable resemblance to the formula the expresses the amount of disorder, or entropy> in a physical system, for example a gas. Not only does this coincidence turn out to be real, but other connections with information have been discovered in quantum mechanics.

Relationships Between Quantum Mechanics and Information Theory

It seems therefore plausible to imagine computers that rely, not on xlassical, Newtonian physics, but on quantum mechanics—the physics of the molecular, atomic, and subatomic world. Enters quantum computing. " In a quantum computer, the fundamental unit of information (called a quantum bit or qubit), is not binary but rather more quaternary in nature. This qubit property arises as a direct consequence of its adherence to the laws of quantum mechanics which differ radically from the laws of classical physics. A qubit can exist not only in a state corresponding to the logical state 0 or 1 as in a classical bit, but also in states corresponding to a blend or superposition of these classical states. In other words, a qubit can exist as a zero, a one, or simultaneously as both 0 and 1, with a numerical coefficient representing the probability for each state. This may seem counterintuitive because everyday phenomenon are governed by classical physics, not quantum mechanics—which takes over at the atomic level…"

[ from Jacob West's very clear article The Quantum Computer: An Introduction ]

We are still at the very beginning of quantum computing. However, "scientists may eventually mark as a milestone the day

in 2001 when Isaac Chuang and his colleagues at IBM determined that the two prime factors of the number 15 are three and five.

What made their calculation remarkable, of course, wasn’t the grammar school arithmetic, but that the calculation had been

performed by seven atomic nuclei in a custom-designed fluorocarbon molecule."

The Quantum Computer: An Introduction ]

We are still at the very beginning of quantum computing. However, "scientists may eventually mark as a milestone the day

in 2001 when Isaac Chuang and his colleagues at IBM determined that the two prime factors of the number 15 are three and five.

What made their calculation remarkable, of course, wasn’t the grammar school arithmetic, but that the calculation had been

performed by seven atomic nuclei in a custom-designed fluorocarbon molecule."

[ from Harnessing Quantum Bits ] Another approach, almost at the intersection between classical and quantum computing, consists in harnessing the natural computer life comes equipped with, DNA. "Leonard Adleman is often called the inventor of DNA computers. His article in a 1994 issue of the journal Science outlined how to use DNA to solve a well-known mathematical problem, called the directed Hamilton Path problem, also known as the 'traveling salesman' problem. The goal of the problem is to find the shortest route between a number of cities, going through each city only once. As you add more cities to the problem, the problem becomes more difficult. Adleman chose to find the shortest route between seven cities. You could probably draw this problem out on paper and come to a solution faster than Adleman did using his DNA test-tube computer. Here are the steps taken in the Adleman DNA computer experiment:- Strands of DNA represent the seven cities. In genes, genetic coding is represented by the letters A, T, C and G. Some sequence of these four letters represented each city and possible flight path.

- These molecules are then mixed in a test tube, with some of these DNA strands sticking together. A chain of these strands represents a possible answer.

- Within a few seconds, all of the possible combinations of DNA strands, which represent answers, are created in the test tube.

- Adleman eliminates the wrong molecules through chemical reactions, which leaves behind only the flight paths that connect all seven cities.

-

4. Computing and Communication Converge: Distributed Computing

In the last few years the term convergence has become quite ubiquitous.

It usually means "the combining of personal computers, telecommunication, and television into a user experience that is

accessible to everyone." I will use it here is a somewhat different sense, to mean the coming together of computing

and communication. As we have seen, the computer was first conceived as a computational device, as a number-crunching

machine. However, the internet first, and especially the web later, shifted the emphasis sharply toward communication. We are now

witnessing a sort of synthesis of these two conception in the form of distributed computing.

Distributed computing is achieved either by connecting together, via the internet, an essentially unlimited number

of computers which, during their idle periods, can work on a common task, or by connecting together, via a high-speed local or dedicated network,

and using specialized software, a number of machines (called a cluster) which can work together as one parallel machine

("rougly speaking, a parallel program has multiple tasks (or: processes) that cooperate to execute the program." [ from

An Introduction to Parallel Computing ])

A good introduction can be found at Internet-based Distributed Computing Projects:

"This site is designed for non-technical people who are interested in learning about, and participating in, public,

Internet-based projects which apply distributed computing science to solving real-world problems."

Here is another, good definition of distributed combuting from Grid Computing Info Centre :

"Grid is a type of parallel and distributed system that enables the sharing, selection, and aggregation of resources

distributed across 'multiple' administrative domains based on their (resources) availability, capability, performance, cost,

and users' quality-of-service requirements. If distributed resources happen to be managed by a single, global centralised

scheduling system, then it is a cluster."

And here are two examples of distributed projects.

Screensaver Lifesaver.

"Anyone, anywhere with access to a personal computer, could help find a cure for cancer by giving 'screensaver time' from their computers to the world's largest ever computational project, which will screen 3.5 billion molecules for cancer-fighting potential. The project is being carried out by Oxford University's Centre for Computational Drug Discovery - a unique 'virtual centre' funded by the National Foundation for Cancer Research (NFCR), which is based in the Department of Chemistry and linked with international research groups via the worldwide web - in collaboration with United Devices, a US-based distributed computing technology company, and Intel, who are sponsoring the project." SETI@home.

Searching for extraterrestrial intelligence. "If we assume that our alien neighbors are trying to contact us, we should be looking for them. There are currently several programs that are now looking for the evidence of life elsewhere in the cosmos. Collectively, these programs are called SETI (the Search for Extra-Terrestrial Intelligence.) SETI@home is a scientific experiment that harnesses the power of hundreds of thousands of Internet-connected computers in the Search for Extraterrestrial Intelligence (SETI). You can participate by running a free program that downloads and analyzes radio telescope data. There's a small but captivating possibility that your computer will detect the faint murmur of a civilization beyond Earth." Genome@Home

"The goal of Genome@home is to design new genes that can form working proteins in the cell. Genome@home uses a computer algorithm (SPA), based on the physical and biochemical rules by which genes and proteins behave, to design new proteins (and hence new genes) that have not been found in nature. By comparing these 'virtual genomes' to those found in nature, we can gain a much better understanding of how natural genomes have evolved and how natural genes and proteins work. Some important applications of the Genome@home virtual genome protein design database: engineering new proteins for medical therapy; designing new pharmaceuticals; assigning functions to the dozens of new genes being sequenced every day; understanding protein evolution."

Readings, Resources and Questions

-

Read Doc Searls and David Weinberger's

World of Ends: What the Internet Is and How to Stop Mistaking It for Something Else.

"All we need to do is pay attention to what the Internet really is.

It's not hard. The Net isn't rocket science. It isn't even 6th grade

science fair, when you get right down to it. We can end the tragedy of

Repetitive Mistake Syndrome in our lifetimes — and save a few trillion

dollars’ worth of dumb decisions — if we can just remember one simple

fact: the Net is a world of ends. You're at one end, and everybody and

everything else are at the other ends." Do you agree? Browse through the discussion,

and perhaps add your comments to it.

World of Ends: What the Internet Is and How to Stop Mistaking It for Something Else.

"All we need to do is pay attention to what the Internet really is.

It's not hard. The Net isn't rocket science. It isn't even 6th grade

science fair, when you get right down to it. We can end the tragedy of

Repetitive Mistake Syndrome in our lifetimes — and save a few trillion

dollars’ worth of dumb decisions — if we can just remember one simple

fact: the Net is a world of ends. You're at one end, and everybody and

everything else are at the other ends." Do you agree? Browse through the discussion,

and perhaps add your comments to it.

- A very interesting site is Atlas of Cyberspaces. "This is an atlas of maps and graphic representations of the geographies of the new electronic territories of the Internet, the World-Wide Web and other emerging Cyberspaces. These maps of Cyberspaces—cybermaps—help us visualise and comprehend the new digital landscapes beyond our computer screen, in the wires of the global communications networks and vast online information resources. The cybermaps, like maps of the real-world, help us navigate the new information landscapes, as well being objects of aesthetic interest. They have been created by 'cyber-explorers' of many different disciplines, and from all corners of the world." Another, equally interesting site is GVU WWW User Survey. "Since its beginning in 1994, the GVU WWW User Survey has accumulated a unique store of historical and up-to-date information on the growth and trends in Internet usage. It is valued as an independent, objective view of developing Web demographics, culture, user attitudes, and usage patterns. Recently the focus of the Survey has been expanded to include commercial uses of the Web, including advertising, electronic commerce, intranet Web usage, and business-to-business transactions."

- A useful resource on nano-computing is Nanoelectronics & Nanocomputing, where you can find many short introductory articles and links. Other good references to nanotechnology and quantum computing (the two areas overlap to some extent) are Introductions and Tutorials at qbit.org, the home of Oxford's Centre for Quantum Computation, which "provides useful information and links to all material in the field of quantum computing and information processing," and Simon Benjamin and Artur Ekert's A Short Introduction to Quantum-Scale Computing.

- And here are a few resources on distributed computing. A very informative site, with many relevant links, is Grid Computing Planet. Distributed.net: "Founded in 1997, our project has grown to encompass thousands of users around the world. distributed.net's computing power has grown to become equivalent to that of more than 160000 PII 266MHz computers working 24 hours a day, 7 days a week, 365 days a year." TeraGrid: "TeraGrid is a multi-year effort to build and deploy the world's largest, fastest, distributed infrastructure for open scientific research. When completed, the TeraGrid will include 20 teraflops of computing power distributed at five sites, facilities capable of managing and storing nearly 1 petabyte of data, high-resolution visualization environments, and toolkits for grid computing. These components will be tightly integrated and connected through a network that will operate at 40 gigabits per second—the fastest research network on the planet."

- Just to give you a taste of where we may be headed for, read Kimberly Patch's article On the Backs of Ants: New Networks Mimic the Behavior of Insects and Bacteria in Technology Review.

© Copyright Luigi M Bianchi 2001, 2002, 2003

Picture Credits: Atlas of Cyberspaces · Zakon.org · IBM Canada · YUCoM

IBM · Obsolete Technology · Vintage Computer Festival · Qbit.org

Last Modification Date: 06 June 2004

Picture Credits: Atlas of Cyberspaces · Zakon.org · IBM Canada · YUCoM

IBM · Obsolete Technology · Vintage Computer Festival · Qbit.org

Last Modification Date: 06 June 2004