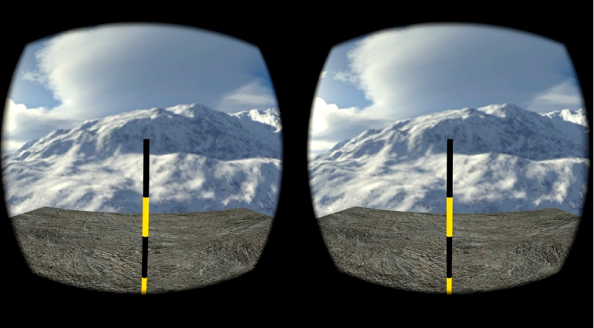

Visual motion and optic flow parsing

2024-Present

This project focuses on the perception of visual motion and optic flow, their interactions with other sensory cues (e.g. binocular disparity) and their effects on behaviours (e.g. eye movements).

Related Publications:

- Guo, H., & Allison, R. S. (2024). Binocular contributions to motion detection and motion discrimination during locomotion. PLOS ONE, 19(12), 1–21. https://doi.org/10.1371/journal.pone.0315392

- Guo, H., Schütz, A. C., & Allison, R. S. (2024). Perceptual and oculomotor dissociation of assimilation and contrast effects of optic flow. [Poster presentation]. Vision Sciences Society. St. Pete Beach, FL. https://www.visionsciences.org/presentation/?id=2430

Home-Base: finding your way home without gravity

2025-Present

A multi-year research project taking place aboard the International Space Station with the Canadian Space Agency. The research follows on from our earlier VECTION project. Here we study astronauts' ability to keep track of their starting position during simulated travel in microgravity. The CSA project page has details.

Shared Mixed Realities

2019-Present

The goal of this project was to build a virtual multiplayer environment that allows users to interact and collaborate across mixed realities. The project allowed a user in a VR Head Mounted Display (HMD) to collaborate with another user in an AR HMD, with a final user without a headset, with all presented views allowing them to collaborate on joint tasks.

The virtual environment was built to investigate the effect of distortions in shared mixed reality environments and size perception of complex shapes in VR. This project was completed in partnership with Qualcomm and the Wilcox Lab ensuring effective exploration of these topics.

Related Publications:

- Gunasekera, I., Abadi, R., Afolabi, F., Teng, X., Allison, R.S., & Wilcox, L.M. (2025, May 16 to 20). The contribution of binocular depth information to the perceived size of 3D shapes. [Poster session]. Vision Sciences Society 2024 Conference, St. Pete Beach, Florida, United States.

- Au, D., Allison, R. S., Gunasekera, I., & Wilcox, L. M. (2025). Misperception of the distance of virtual augmentations. In 2025 IEEE Conference Virtual Reality and 3D User Interfaces (VR) (pp. 516-525). IEEE.

- Gunasekera, I., Abadi, R., Afolabi, F., Teng, X., Allison, R.S., & Wilcox, L.M. (2025, June 17 to 19). Perceiving 3D size: The role of depth cues and object interaction. [Poster session]. CIAN-CVR conference, York University, Toronto, Canada.

- Au, D., Mohona, S. S., Wilcox, L. M., & Allison, R. S. (2024). Subjective assessment of visual fidelity: Comparison of forced‐choice methods. Journal of the Society for Information Display, 32(10), 726-740.

- Au, D., Mohona, S.S., Cutone, M., Hou, Y., Goel, J., Jacobson, N., Allison, R.S., & Wilcox, L.M. (2019). Stereoscopic Image Quality Assessment. SID Symposium Digest of Technical Papers, 50(1), 14-16.

Effects of motion gain on perception in VR

2021-2025

This project examined the consequences of non-unity gain on virtual reality experiences. Main effects of gain have been observed for slant perception and distance perception. It has also been found to affect user comfort.

Related Publications:

- Teng, X., Wilcox, L., & Allison, R. (2024). Gain adaptation in virtual reality. Journal of Vision, 24(10), 1406-1406. https://doi.org/10.1167/jov.24.10.1406

- Teng, X., Allison, R., & Wilcox, L. (2023). Increasing motion parallax gain compresses space and 3D object shape. Journal of Vision, 23(9), 5015-5015. https://doi.org/10.1167/jov.23.9.5015

- Teng, X., Allison, R. S., & Wilcox, L. M. (2023, March). Manipulation of motion parallax gain distorts perceived distance and object depth in virtual reality. In 2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR) (pp. 398-408). IEEE. https://doi.org/10.1109/VR55154.2023.00055

- Teng, X., Wilcox, L. M., & Allison, R. S. (2022). Binocular cues to depth and distance enhance tolerance to visual and kinesthetic mismatch. Journal of Vision, 22(14), 3312-3312. https://doi.org/10.1167/jov.22.14.3312

- Teng, X., Wilcox, L., & Allison, R. (2021). Interpretation of depth from scaled motion parallax in virtual reality. Journal of Vision, 21(9), 2035-2035. https://doi.org/10.1167/jov.21.9.2035

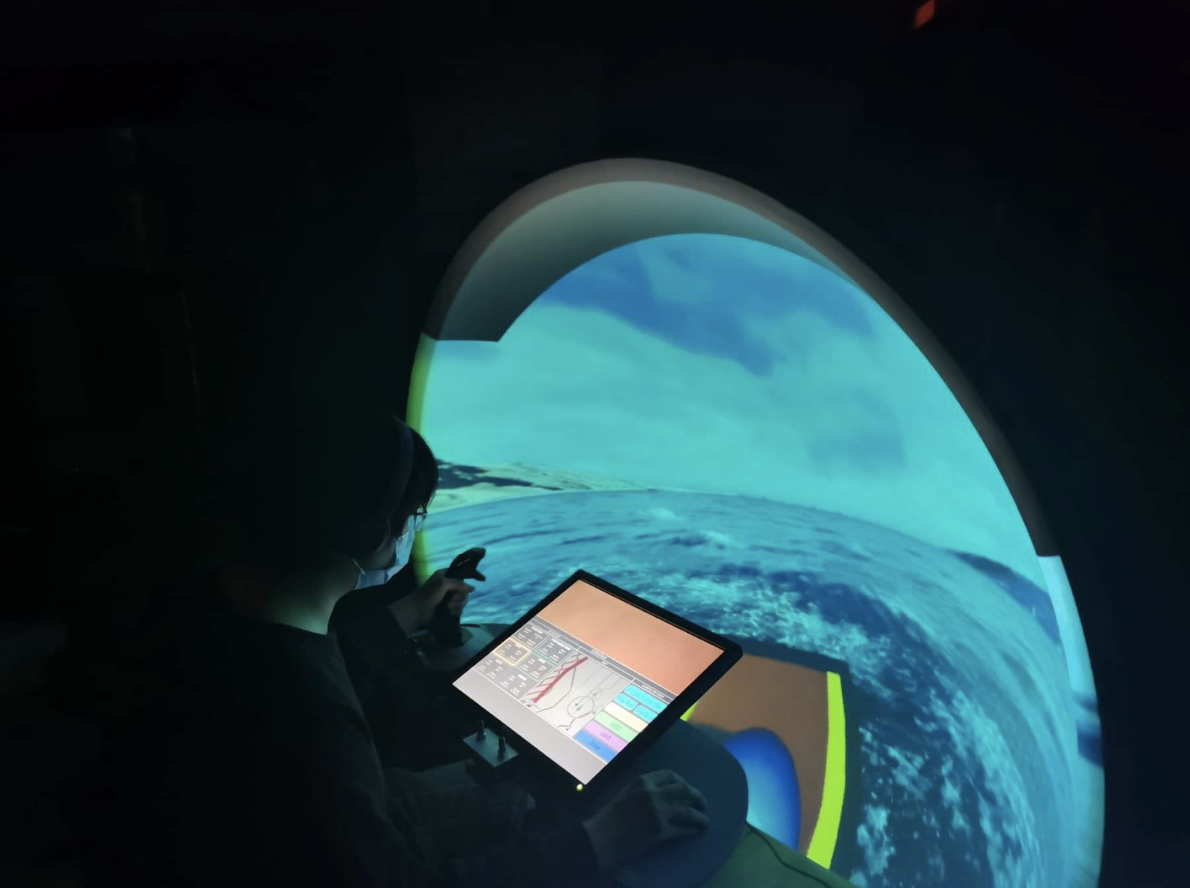

Maritime Autonomous Surface Shipping (MASS)

2021-2024

This project was built to simulate a shore control center environment, with operators remotely monitoring and operating Maritime Autonomous Surface Ship vessels. The operator is given feed information from 5 vessels, including A large cargo vessel, three unmanned surface vehicles, and one drone, each with at least one camera providing environment information.

The simulated environment was used to better understand user situational awareness and trust within an autonomous ship context and we collaborated with members from the National Research Council of Canada’s Ocean, Coastal and River Engineering Research Centre (NRC-OCRE), providing expert insight with specific implementations.

Related Resources:

Related Publications:

- Kio, O. G., Afolabi, F., McGregor, A., Garvin, M., Gash, R., & Allison, R. S. (2024, September). Facilitating Safe Automated Navigation in Ice with Cooperating Autonomous Vehicles. In OCEANS 2024-Halifax (pp. 1-10). IEEE. https://doi.org/10.1109/OCEANS55160.2024.10754346

- Gregor, A., Allison, R. S., & Heffner, K. (2023). Exploring the Impact of Immersion on Situational Awareness and Trust in Remotely Monitored Maritime Autonomous Surface Ships. In IEEE OCEANS Conference(pp. 1–10). doi: 10.1109/OCEANSLimerick52467.2023.10244249

Collaborative Technology for Healthy Living

2021-2025

This internally funded project brought together 11 York Faculty members from across four Faculties to collaborate, build networks and capacity. The focus was in the area of immersive tele-wellness techniques for remote assessment and collaboration for cognitive well-being across the life span, fitness maintenance and injury recovery. Sub-projects included (1) Implicit and explicit communication for effective telepresence, (2) Conveying and quantifying body pose and motion and (3) Application of tele-wellness to health maintenance or restoration applications. A key aspect was on co-design and co-production techniques and on network building which have supported continuing collaborations and the development of large-scale funding proposals.

We have had a number of previous major projects, details can be found in the publications page

- Stereoscopic cinema and 3D gaming

- Civilian uses of night-vision goggles in aviation, forestry, and security

- Vection in microgravity

- Gaze-contingent displays

- Calibration and alignment of stereoscopic displays

- Stereoscopic transparency

- Game-based vision therapy for convergence insufficiency

- VR tracking and locomotion

This page summarizes the research projects conducted at the Virtual Reality and Perception Lab. You can also check out our research partners or recent publications.