This is the first semester that I've taught 100% online. The Fall 2020 lockdown and the YorkU strike in 2018 gave me a taste of what it would be like and alerted me to the need for restructuring of my classes in order to make them more favourable to learning. During the pandemic, students are struggling but they still want to learn. Faculty should realize this and try their best to accommodate. Here I'll describe what I did to both EECS 1011, an introductory programming class and EECS 2021, a mid-level computer architecture class.

I don't presume to impose this approach on anyone, nor do I think that what I'm reporting here is perfect. Teaching in the pandemic is crisis teaching. It's hard, it's incomplete. But it's also an opportunity to try something different -- which I did, with the best of intentions towards the learning that my students want to do. This is a journey for me... I'm learning lots and hope to improve along the way.

The Three Sentence Summary

I got rid of exams in my online courses, focusing on assessing a combination of asynchronous, auto-graded interactive online activities with synchronous TA-supervised lab activities. If the students complete all the assessments in the class they get a B+. If they want to boost their grade beyond that they do a project related to the course learning outcomes.

Spoiler alert!

The number of A's and A+'s didn't really change. (nor did the failure rate)

Underlying Assumption: Online Exams are Bad

Exams online are a bad idea. There are no good ways to do exams online that (1) maintain academic integrity and (2) don't violate our students' dignity. Proctortrack, webcams in washrooms (listen to minute 58), investigating the IP addresses of students, etc. -- these are all awful things to impose on students. It's also a battle that we, the professors, will lose -- the students have too much to gain by cheating in online tests.

Having personally spoken to students who cheated in Fall 2020 (because they were caught in another prof's online exam) they seem pretty blasé about it. Instagram, WhatsApp, text messaging... so many ways that they normally share information and communicate with one another are just too easy to apply to exams during a pandemic. Even outside the ivory tower, which computer scientist or engineer isn't using online material (e.g. data sheets, forums) to help them solve their everyday problems? The whole online exam thing is just so tilting-at-windmills.

So, basically, I've been working to move my courses to a non-exam online format because it's just a better use of everyone's time and energy, and hopefully it will lead to better learning outcomes.

Potential Solution: Proficiency/Specifications-based teaching

Final exams are considered a rite-of-passage. They're held as sacrosanct by many. But they shouldn't be. The positive experience that my students and I had with alternatives, both at YorkU in Canada and at HsKa in Germany, confirmed for me that the traditional final exam is not necessary. Learning is the goal, not exams. And so we should seek out alternatives when the traditional assumptions and frameworks break down -- as they do during online learning, especially in a pandemic.

So what can we do that allows students to learn while forced to be online? Here I'll introduce one possibility that has been tried long before the pandemic forced us into making drastic changes to our courses. But, first, let's talk about alternatives to the traditional exam that I've looked into before COVID hit.

In the past I've used two-stage exams in my face-to-face classes, as have a few other faculty members in the Lassonde School, inspired by Dr. Tamara Kelly's excellent advice (YorkU Biology). In face-to-face assessments two-stage examsare a fantastic modification to traditional testing because they combine both formative ("learning" stage) and summative("final testing" stage).

But two-stage exams don't work in the online world for the same reasons that other traditional exams don't. Enter "specifications grading" (or proficiency grading). Jeff Harris and I had some preliminary discussions about Dr. Linda Nilson's / Dr. Robert Talbert's suggestions for using this approach. I took some liberties with the original ideas and this blog post is the result.

The Grade Breakdown

First, let's get grades out of the way. The grade should reflect the assumption that if the students do the work then they have shown a degree of proficiency in the material. To that end, there are two parts to the grading of these courses:

- the required components that add up to a B+ grade, and

- the optional components to aim for A or A+

"Do the all work and get a B+"

The assessment approach here is inspired by "Specifications" or "Proficiency" grading (link). Basically, it boils down to this: if a student completes all the work in the course they'll get a B+. No quizzes, no midterm, no final exam. The B+ portion of the class is made up of three (EECS 2021) or four (EECS 1011) main components. Each is worth an equal portion of the students grade, up to 79.99% (the threshold between B+ and A):

| EECS 1011 Grade "Bins" (up to a B+) | EECS 2021 Grade "Bins" (up to a B+) |

| Labs: 20%Class Readings & videos: 20%Online interactive activities: 20%MinorProject: 20% | Labs: 26.7%Class Readings & videos: 26.7% Online interactive activities: 26.7% |

This results in three primary grade bins in EECS 2021 and four in EECS 1011. Each sub-component within the main components is weighted identically (signified by a grade of 1) unless it is stated otherwise. In both classes a lab report is worth 1 mark. Likewise, an interactive class video is 1 mark. In EECS 1011, a subcomponent of a minor project is worth 1 mark. Each single mark gets placed in a bin, as described above.

So how does one of the assessments contribute to the overall grade (up to the B+)? Here is an example, in the context of lab activities. Let's assume that there are five labs, each with a demonstration. Each lab demo is worth an equal portion within the Lab "bin". Assuming that there are three main "bins" that form the "up to B+" portion of the course, that means that the labs are worth a total of 26.7%. Let's assume that this particular lab demo is graded out of 15 and the student received 7 out of 15. If there were five labs in a semester, each will be worth an equal portion of the "bin" representing the labs, and so this particular lab demo would contribute 2.44 points to the 79.99% that makes up student's potential B+ (equation: ((79.99/3)/5)*(7/15))

Major Project: Aim for an A or A+

For some students, simply doing the required work and learning from that work is going to be sufficient or all that they can accomplish in a semester. That's fine. For others, possibly a minority, the major project is a way for them to apply and extend the learning they did beyond the regular class activities.

The Major Project is an optional fourth component, worth 20% of the final grade in both courses and completing it puts the student in a position to possibly achieve an A or A+. The major projects will be graded relative to one another, based on the skill and originality demonstrated in the submission. Students submitting major projects will be expected to make themselves available for a video conference interview to describe and discuss their project.

The submission of a major project is not a guarantee of an A or A+. For example, not completing the four main components of the course or submitting a trivial, relatively unskilled and/or unoriginal major project will be considered grounds for not assigning an A or A+. A student can

- Meet expectations for an "A" grade :

- the student does something "solid" from a technical perspective, or

- Exceed expectations for an "A+" grade:

- the student does something technical truly excellently or combines a solid technical performance with a holistic "extra"

What do Interactive Learning Activities Look Like?

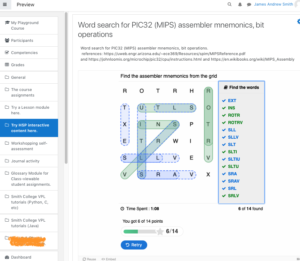

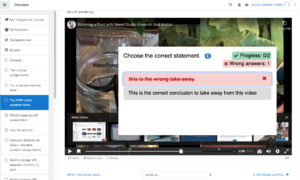

There were dozens of low-stakes interactive activities on eClass for both EECS 1011 and EECS 2021. They relied on the H5P plugins for Moodle and included multiple-choice questions, word searches, group sharing, drag-the-words, and interactive videos. Video content was hosted on YouTube and interactive questions overlaid using H5P.

All of the "lecture" content is now posted on YouTube and imported into eClass with interactive questions super-imposed. That means that all of the key learning content can be viewed over-and-over, both on eClass and on a lowest-common-denominator video platform outside of eClass. It's active learning and has a contemporary look-and-feel that I think students appreciate.

All of the scheduled class times have now been made into optional synchronous sessions on Zoom. They've effectively become public office hours for the students to ask me (and themselves) questions. I also still hold all of my office hours on Zoom, but keep those sessions one-on-one.

Reduce Deadlines and Repeat Activities

For the most part, the interactive activities have no deadlines (except the obvious one: the last day of class). The exceptions to this were the lab activities since the students were required to demonstrate their work to the teaching assistants over Zoom. I modified the deliverables in these lab activities to be more manageable for both the students and the TAs. Inspired by my undergrad instructor, Prof. Fred Vermuelen, a big advocate of repetition in teaching, I took to repeating certain lab activities in multiple weeks (e.g. create an 8-bit system in the first week, then create the same thing in the second week but in 16-bits.) as I way of reinforcing material while also reducing workload. I felt that this was a workable and fair balance while also trying to achieve the learning outcomes of the course.

What is the workload like?

The workload issue is a perpetual one within Engineering and Computer Science programs. I get the impression that it has gotten worse during pandemic. I've never received so many messages from students regarding their mental health, by their need to help family members with health or personal issues, etc.

My hope was to address the workload issue in part by making as much of the content asynchronous as possible and to make each individual assessment worth a small portion of the overall grade so that if a student decided not to do it it would have a minimal impact.

My desire to move my course to be primarily asynchronous is based on my conversations in 2012-14 with staff in the Continuing Education school at Ryerson, Nada Savicevic and Nadia Desai. For me, one of the surprising takeaways when learning about online learning in the continuing education context is how the learners value asynchronous, flexible learning processes, but also that the ability to self-direct the learning and self-manage time is key. I saw this first hand while observing my wife take online classes through ETFO and YorkU.

I think that my approach to reducing the impact of workload by removing most deadlines was helpful, but I'm waiting to receive the course surveys after the semester is done to see if my students truly felt the same way.

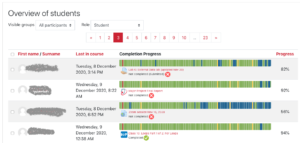

The Completion Tracker Tool for Managing Time & Activities Online

Based on what I'd heard and seen about contuinuing ed / distance ed in pre-pandemic times, I knew that keeping track of things on the LMS is a challenge for students if they don't have the regular in-person interactions with faculty, staff, TAs and fellow students. The completion tracker is an add-on for eClass that allows students and faculty to see how much work each student has done over the course of the semester. While it would be helpful during face-to-face teaching, I think that it's even more important in remote learning where the feeling of disconnectedness is so pervasive.

I was worried that some of my students had disengaged over the course of the semester as few (~10%) were attending the synchronous Q&A sessions each week. So, near the end of the semester I checked-in on students in the 2nd year course. I used the convenient messaging system associated with the completion tracker to send out a message of concern. Based on the replies from these students, the reaching out helped get at least some of them back on track.

(If you want to enable the Completion Tracker, turn editing on in eClass, and then scroll all the way to the bottom of the main page of your course, and look for the "Add Block" link on the bottom-right)

Low-Cost @Home Hands-on Labs

To help engage students, especially in Engineering and Science, I think that it's key for students to work with real equipment as often as possible, in both formal lab settings and outside them. To this end, I have advocated for low-cost student lab equipment for many years, both at York and at my previous institution. In 2019 I received funding from the Lassonde Dean's Office to explore allowing students to purchase lab kits for use both inside and outside the lab environment. When COVID hit I simply modified and accelerated the process.

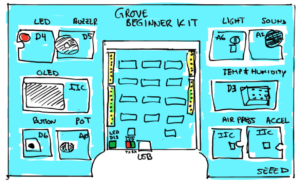

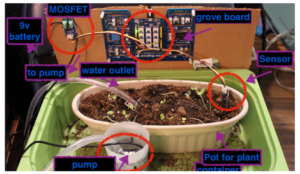

This kit was intentionally chosen because it would work both on-campus and off, can be used in typical labs, but also in projects like the minor and major projects in EECS 1011 (procedural programming / Matlab) or 1021 (object-oriented programming / Java). The minor project centres around monitoring soil conditions and watering plants, as shown here.

In EECS 2021 the students used the inexpensive ATMEGA328PB Xplained Mini, a sub-$20 embedded system board related to the Arduino, but which contains a hardware debugger rather than the typical Arduino boatloader.

Unlike some other engineering or science programs that have chosen to switch to "virtual labs" during the pandemic, with no hands-on content and canned data sets, my students are working on real hardware, with the full breadth of learning that goes along with that. I understand that this isn't possible for all courses, but when we have the ability and resources to allow students to continue to learn with real scientific and engineering equipment I think that it's the better way to go.

Grading Results [updated Jan 2021]

Now that the semester is done I can look at the grading results.

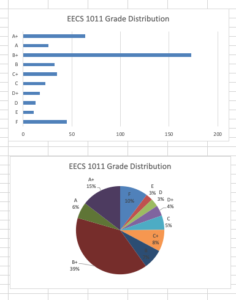

In EECS 1011

The average for the class was 68.4%, with a high standard deviation of 20.4%. The average is about the same as normal, but the standard deviation is higher. Based on the final grades calculations we see that this semester

- 21% of the class got an A or A+.

- 39% of the class got a B+

- 7% of the class got a B

- 8% got a C+

- 5% got a C

- 7% got a D or D+

- 13% got an E or F

How does this compare with previous offerings of the EECS 1011? On average, from 2016 to 2020, in EECS1011: 26% A or A+; 37% B or B+; 5% E or F. So the number of A or A+ students this semester has dropped slightly in EECS 1011 (21% vs 26% previously) and the failure rate has increased somewhat (13% vs 5% previously were E or F). The moderate grades this semester skewed heavily towards B or B+ : 46% of the class received either a B or B+. That is much higher than normal. As for the work completion rate, based on the completion tracker for the course:

- # of students who completed 90% or more of the activities in the course: 292 (66.4%)

- # of students who completed 75% to 89.9% of the activities in the course: 82 (18.6%)

- # of students who completed between 50% and 74.9% of the activities in the course: 51 (11.6%)

- # of students who completed under 50% of the activities in the course: 15 (3.4%)

- Major Project submissions: 28% (126/440).

In EECS 2021

Based on the completion tracker dashboard we see that this semester

- 8% of the class got an A or A+.

- 53% of the class got a B+

- 20% of the class got a B

- 9% got a C+

- 3% got a C

- 4% got a D or D+

- 3% got an E or F

How does this compare with previous offerings of the EECS 2021? On average, from 2016-2020, we saw: 20% A or A+; 26% B or B+; 12% E or F. So this semester, for EECS 2021, the number of A or A+ students has dropped (8% vs 20% previously) and so has the failure rate (3% vs 12% previously were E or F). The moderate grades this semester skewed heavily towards B or B+. From the completion tracker on the LMS we see the

- # of students who completed 90% or more of the activities in the course: 140 (84%)

- # of students who completed 75% to 89.9% of the activities in the course: 15 (9%)

- # of students who completed between 50% and 74.9% of the activities in the course: 9 (5%)

- # of students who completed under 50% of the activities in the course: 3 (2%)

- # of Major Project submissions: 8% (13/167).

So, in this second year class most students appeared to have the discipline to aim for and obtain the B+ (or B).

There is no "Easy A" on this learning journey

So, is this a pathway to an "easy A"? No, it does not appear to be so. The number of students that attempted the major project, the only route to an A or A+, was no more than a third of the class (28% in EECS 1011 and 8% in EECS 2021). A clear majority of the students in both classes did not attempt to boost their grade, but the vast majority (66% in EECS 1011 and 80% in EECS 2021) finished over 90% of the learning tasks in the course.

What's next? Retention of Learning.

So, did this actually change anything about how and what the students learned? Will they retain what they learned and will it affect learning in future courses? I don't know. I'm looking forward to following-up with the students to see. Courses like EECS 2030 will be worthwhile examining, starting in Winter 2021 and Fall 2021.

Reaction of Colleagues to this approach and the results

Since the Spring of 2020 I've witnessed a number of internal discussions about what to do about online teaching. Much of the discussion has, in my mind, been wasted on tweaking and tuning online testing. While initially there was much support for technology like ProctorTrack, enthusiasm for it dropped off by the Summer of 2020 as reports came back that it was terribly invasive and did not appear to be effective. But most of my colleagues insisted that online exams had to continue during the pandemic.

The discussion, by the end of the Fall 2020 semester, appears to have shifted to "oh, yeah, students cheated on final exams because they have access to Discord, WhatsApp, etc. and we can't do anything about it."

I'm, still, firmly of the opinion, that many professors are barking up the wrong tree. Generally speaking, in-person exams make sense, but online exams don't. We just simply shouldn't be putting much effort or faith into exams right now. That said, proposals like the one in this blog are viewed with scepticism. The initial results are, in my mind, promising but many of my colleagues are doubtful. I hope to compare student results during in-person teaching in 2021/22/23 to see if students who took alternative pathways will do better or worse in terms of demonstration learning outcomes.

Conclusions

I'm really happy with the reactions that students had to the approach taken in EECS 1011 and 2021. The grades don't appear to be wildly off of what they were pre-pandemic. When we go back to face-to-face teaching I'm very likely to keep using the asynchronous and interactive content, as well as the inexpensive take-home lab equipment and completion tracker. I'm hearing that other "commuter schools" like UBC are looking at doing this likewise to augment and enhance learning in face-to-face classes. Face-to-face exams? Sure, there is value in them, so I'll probably continue to use relatively low-stakes two-stage testing, but only when we're able to do them safely, with dignity and with a real shot at maintaining academic integrity.

Support Files [updates May 2021 & Nov 2021]

Now that we've had a chance to breathe after two full, regular semesters of pandemic teaching, I've received a few requests for more information on my approach to pandemic teaching. Here are some files related to the courses:

Fall 2020 & Winter 2021

- EECS 1011 (Intro to Matlab / Procedural Programming)

- EECS 1021 (Intro to Java / Object Oriented Programming

- Course Outline (W2021 for EECS 1021)

Fall 2021

- EECS 1011 (Matlab / Procedural)

- Course Outline (Fall 2021)

- EECS 2021 (Computer Organization / Architecture)

- Course Outline (Fall 2021)

Acknowledgements

This list won't be complete. Apologies if I missed you... here we go...

The work behind much of what is described here was inspired by a Teaching Commons course with Robin Sutherland-Harris ("Taking it Online!") and YorkU's Teaching Commons. A bunch of us did a break-out series of meetings after the course to work on implementing the things we learned with Robin. This includes Profs. Meg Luxton (LAPS School of Gender, Sexuality and Women's Studies), Sabine Dreher (Glendon Campus, International Studies Dept), Josée Rivest (Glendon Campus, Centre for Cognitive Health), and Shirley Roburn (LAPS, Dept. of Communication Studies).

I also need to thank Patrick Thibeaudeau and Lisa Caines at UIT, Muhammad Javeed in the Lassonde School, our fantastic tech team in the EECS Department (Ulya, Jason, Paul, Seela, Nam, Jaspal, Gayan, Eric...) and others. The changes to computing hardware and software on campus, your willingness to get onboard with all the crazy ideas -- you're all fantastic!

Thanks to Prof. Iris Epstein, and Prof. Melanie Baljko and the other members of the SMART Toolbox project for giving some helpful structure to the student video submissions.

Thank you to everyone in the YorkU Bookstore for helping put together our lab kits and getting them out to students.

I also need to shout out to Nada and Nadia from the Chang School at Ryerson for working with me so many years ago on a course and the paper.

And the icons? They're from The Noun Project. I'm a subscriber.

Update March 2021

And then we have stories like this one with, apparently, a student in Myanmar not receiving accommodations for a political situation that makes taking online tests remotely difficult, if not impossible. Let's imagine, for a moment, that instructor had provided a different assessment path for students that had, in its very design, permitted flexibility without the need for an interaction like the one that was posted to Reddit. Now, imagine if both the upper admin and the department in question had been more proactive in advocating for more progressive assessment pathways.

Clearly, I'm not the only one investigating and implementing alternatives to exams, timed or otherwise. This August 2020 article in The Conversation is going to be an interesting rabbit hole to explore.

The original version of this post appeared in December 2020 at https://drsmith.blog.yorku.ca/2020/12/pedagogy-in-a-pa…ng-without-exams/

James Andrew Smith is an associate professor in Electrical Engineering and Computer Science Department in York University's Lassonde School. He lived in Strasbourg, France and taught at the INSA Strasbourg and Hochschule Karlsruhe while on sabbatical in 2018-19 with his wife and kids. Some of his other blog posts discuss the family's sabbatical year, from both personal and professional perspectives.