About Artificial Intelligence (AI) Technology

Since the launch of ChatGPT in late 2022, generative artificial intelligence (GenAI) has received much attention in the media and within educational institutions. GenAI tools are able to mimic, and at times to exceed, human abilities to research, write, problem-solve, create art, produce videos, and even to “learn” and evolve. Since the release of ChatGPT, different types of GenAI tools have been developed and additionally, updates have been continuously occurring, leading to increasingly more coherent and human-like responses. As such, there is concern that generative AI tools/apps can be used to help students engage in academic misconduct.

This page has been created to help instructors understand the capabilities of GenAI technology and provide strategies for helping students avoid using it to engage in academic misconduct.

As an instructor, anticipate that students will be curious about these tools and that they require clear direction about whether they are permitted to use them for academic work within your course. As such, instructors are encouraged to engage students in an open discussion about GenAI apps, and how these intersect with academic integrity.

As an instructor, anticipate that students will be curious about these tools and that they require clear direction about whether they are permitted to use them for academic work within your course. As such, instructors are encouraged to engage students in an open discussion about GenAI use, and how such use intersects with academic integrity.

Institutional Expectations

When talking to students, let them know about institutional expectations:

- Any unauthorized use of GenAI for assessments is considered to be a breach of academic honesty.

- Remind them of York’s Senate Policy on Academic Honesty and provide examples about how the unauthorized use of this technology can lead to breaches of:

- cheating, if they are using an AI tool to gain an improper advantage on an academic evaluation when it has not been authorized by their instructor (Section 2.1.1), or

- plagiarism if they are using images created by another, i.e. through the use of DALL-E or another image-generating tool when not authorized by their instructor and not attributed to the creator (Section 2.1.3).

- Be very clear about your expectations, and explicit when providing assignment instructions.

- To help reduce confusion, ensure these expectations are communicated in various ways, such as including them in eClass, course syllabi, instruction guidelines and repeated in class

- Explain how different instructors can have different expectations for GenAI use, and if it is permitted by one instructor, this does not mean it will be permitted by others.

- Encourage students to ask you questions about GenAI.

Note: The Academic Integrity and Generative Artificial Intelligence (AI) Technology document distributed by the ASCP committee in February 2023 draws on York's Senate Policy on Academic Honesty to provide clarity on the use of this technology for academic work.

Consider co-creating class guidelines/a charter with your students regarding the use of GenAI in your courses.

- Should GenAI be used in this course?

- If so, what are some guidelines pertaining to its use to ensure that learning outcomes are met?

Related Student Discussion Topics

Hold discussions with students to learn about their perspectives and concerns about this technology. Some guiding discussion questions may include:

- When do you think it’s acceptable to use GenAI for your academic work?

- When do you think it should be considered cheating?

- In what ways can you ethically use GenAI to support your learning in this course? In your discipline?

Alternatively, you can survey your students by using these survey questions to stimulate conversation about GenAI, or use the questions in an anonymous survey to better understand their thinking.

Finally, consider providing a forum where students can connect by including an artificial intelligence discussion thread so they can share information, ask questions, post articles, etc.

GenAI technology has created a need to revise assessment practices, and at the same time it offers some new possibilities for in-class learning. Assessments can be redesigned so that students are not submitting work that AI apps can easily produce. Some ideas for redesigning assessments are listed below.

Expand/Replace Assessments

Consider expanding or replacing written assessments to:

- focus more on the process of the writing assignment rather than on the final product

- have students emphasize evidence of original thought and critical thinking as AI tools have been shown to be weak at demonstrating these higher-order skills

- ask students to use current sources (post-September 2021)

- ask students to apply personal experience or personal knowledge to course topics

- create assessment questions that are based on the context of classroom discussion

- or, replace a written assessment with a multimodal one

You may also want to update your grading criteria or rubrics to emphasize assessment of deeper discipline-specific skills such as argumentation, use of evidence, or interpretive analysis, rather than the mechanics of writing and essay organization. This can help re-weight your assessments in favour of student learning and away from skills easily performed by GenAI tools.

Integrate Generative AI Technology

Many educators are currently experimenting with integrating generative AI technology into their assessment design. Keeping in mind the limitations of GenAI, if you decide to incorporate such tools into assessments, some ways that students can use technology like ChatGPT to apply higher-order skills are to:

- generate a ChatGPT response to a particular question, and then write an analysis of the strengths and weaknesses of the ChatGPT response

- fact-check the responses that ChatGPT provides to identify incorrect information

- generate a paper from ChatGPT and evaluate its logic, consistency, accuracy and bias, including any stereotypes it may reinforce

- use ChatGPT to create an outline that students can then use to develop an essay

Note: If using the above strategies, be mindful of potential accessibility and equity issues that may arise when shifting assessment modalities.

Communicating Course Expectations through Syllabus Statements

The Sample Syllabus Statements Regarding the Use of GenAI document contains different options that you can use in your course syllabi, or that you can post onto eClass to clarify your expectations about the use of GenAI to your students.

To select the appropriate statements for your courses, consider if/how you would like students to use GenAI for their assessments:

- No use permitted

- Use permitted for some assessments

- Use permitted with some parameters

Additionally, you can use these statements for discussion with students throughout the term.

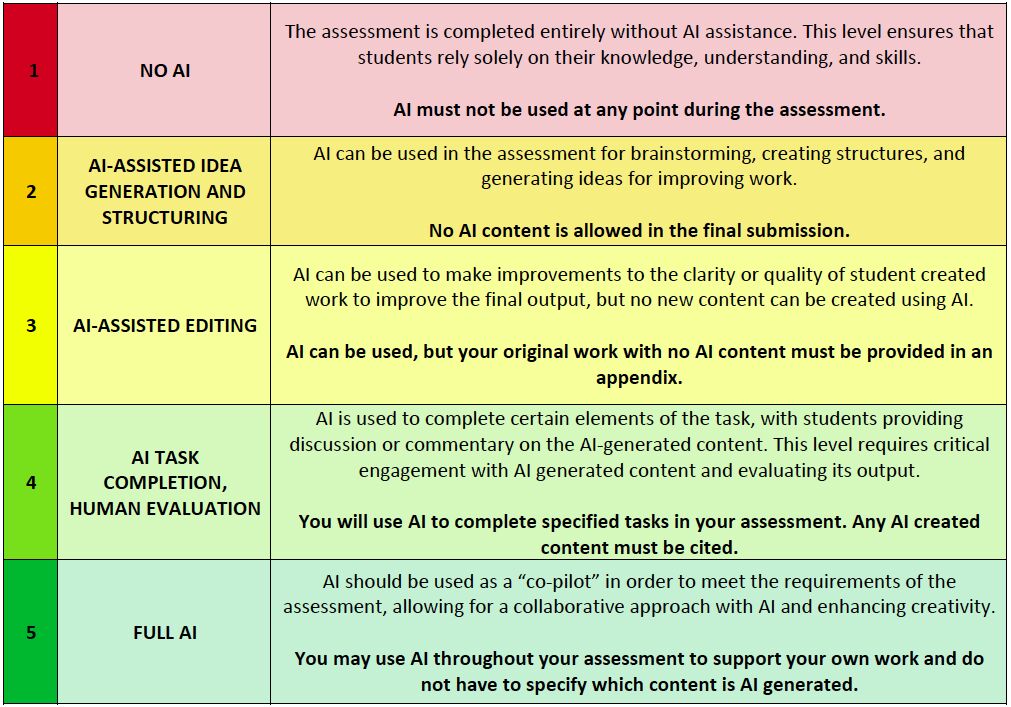

Communicating Assessment Expectations through the AI Assessment Scale (AIAS)

To help promote clarity and ethical use of GenAI, the AIAS framework was developed for both educators and students who may have a limited understanding of these tools. Consisting of five levels, ranging from “no AI” to “full AI,” the AIAS can be used across different disciplines. A pilot study has shown that the use of this tool led to a decrease in academic misconduct cases involving GenAI at one institution.

Beyond formal assessments, GenAI tools can also be used in ungraded or low-stakes learning activities during class time. Bringing this tech into lectures or discussions can help students understand how and when to use AI technology effectively and ethically, and in ways that align with the norms and standards of your disciplinary context. Some learning activities you might consider include:

- Using GenAI text as the starting point for class discussion on a particular topic.

- What does it get right?

- What is it missing?

- How would it need to be revised to meet the scholarly standards of your field?

- Having small teams of students experiment in using GenAI to create text about a given subject, and then comparing the results (what grade would they assign its response using a course rubric?) and/or the process (what prompts and tweaks were needed to generate the text?)

- Engaging in a class debate against GenAI. Use the tool to generate counterarguments that can help them explore perspectives and strengthen their own arguments

- Asking your students! Gather anonymous feedback about whether they are using the tool, what value it provides them, and how they think it should be used in your disciplinary or teaching context.

Many detections tools are now available, yet none has proven to unequivocally identify AI-generated content. To date, detectors have been shown to be unreliable, producing both false positives and false negatives. Many have not been fully tested, and are not able to keep pace with the evolving nature of GenAI. There are also privacy concerns associated with detectors as most require that you copy and paste student work that you suspect has been generated by AI apps.

To detect AI-generated content without the use of a detection tool, you can keep in mind the limitations of generative AI tools (e.g., misinformation, fabricated references, lack of in-depth information, among others).

If the unauthorized use of generative AI is suspected, instructors should follow the process outlined in the Senate Policy on Academic Honesty.

Resources

Sample Syllabus Statements Regarding the Use of GenAI

- This document contains different options that you can use in your course syllabi, or post onto eClass to clarify your expectations about the use of GenAI to your students. To select the appropriate statements for your courses, consider if/how you would like students to use GenAI for their assessments (no use permitted, use permitted for some assessments, or use permitted with some parameters).

Survey Questions for Students about GenAI

- These questions could be used to stimulate conversation with your students about GenAI, or you could use them in an anonymous survey to better understand their thinking.

Artificial Intelligence Assessment Scale (AIAS)

- To help promote clarity and ethical use of GenAI, the AIAS framework was developed for both educators and students who may have a limited understanding of these tools. Consisting of five levels, ranging from “no AI” to “full AI,” the AIAS can be used across different disciplines.

- For more information on the AIAS:

Furze, L., Perkins, M., Roe, J., & MacVaugh, J. (2024). The AI Assessment Scale (AIAS) in action: A pilot implementation of GenAI supported assessment. arXiv preprint arXiv:2403.14692. https://arxiv.org/pdf/2403.14692v2.pdf

For support on GenAI in pedagogy and assessment, you can:

- Reach out to your educational developer liaison

- Reach out to your liaison librarian

- Reach out to the Academic Integrity Specialist: academicintegrity@yorku.ca